Windows Azure and Cloud Computing Posts for 6/1/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

Shamelle posted Managing Azure Storage: 4 Free Azure Cloud Storage Explorer Tools on 5/30/2010:

1. Azure Storage Explorer

Azure Storage Explorer is a useful GUI tool for inspecting and altering the data in your Azure cloud storage projects including the logs of your cloud-hosted applications.

Azure Storage Explorer

At a glance

- All three types of cloud storage can be viewed: blobs, queues, and tables.

- Blob items can be viewed as text, bytes, or images

- Can create or delete blob/queue/table containers and items.

- Text blobs can be edited and all data types can be imported/exported between the cloud and local files.

- Table records can be imported/exported between the cloud and spreadsheet CSV files.

- Support for multiple storage projects

Try out Azure Storage Explorer

2. CloudBerry Explorer for Azure Blob Storage

Similar to Azure Storage Explorer, CloudBerry Explorer provides a user interface to Microsoft Azure Blob Storage accounts, and files. CloudBerry lets you manage your files on cloud just as you would on your own local computer.

CloudBerry

At a glance

- Has support for Dev Storage

- Has support for $root container

- Copy and move files between Microsoft Azure Blob Storage and your local computer

- Create, browse, and delete Microsoft Azure Blob Storage files, folders

- Ability to split blobs into multiple blocks

- Freeware version supports only 1 storage account and expires in 3 months.

Try out CloudBerry Explorer for Azure Blob Storage

3. Cloud Storage Studio

Cloud Storage Studio is a Silverlight based application using which you can manage your Azure Storage account. This is what I use

At a glance

- Ability to manage multiple storage accounts simultaneously

- Manage tables/entities, queues/messages, blob containers/blobs (create, update, delete)

- Support for container and blob ACL policies

- Automatically connect to Development Storage

- Upload blobs (multiple files, folders in one shot) with the ability to pause, resume and cancel.

- Download blobs (multiple blobs) with the ability to pause, resume and cancel.

- 30 days to try period with full functionality, after which you can either purchase a license or let it switch to a “developer” edition, where you can continue using the access to development storage.

Try out Cloud Storage Studio

4. Gladinet Cloud Desktop

Gladinet Cloud Desktop is somewhat different to the above tools; It allows you to manage Azure Blob Storage directly from Windows Explorer. The solution makes it possible for Windows Azure Blob storage to be mapped as a virtual network Drive.

Gladinet

Try out Gladinet Free Starter Edition

What’s the tool that you use to manage your Azure storage?

(There can be more great ones not listed here. If you know any, please share in the comments.)

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Herve Roggero posted his Enzo SQL Shard SQL Server and SQL Azure Shard Library to CodePlex on 5/31/2010:

Enzo SQL Shard

This library provides a Shard technology that allows you to spread the load of database queries over multiple databases easily (SQL Server and SQL Azure). Uses the Task Parallel Library (TPL) and caching for high performance.

For more information about our company, visit http://www.bluesyntax.net.Details

This library allows you to perform all the usual tasks in database management: create, read, update and delete records. Except that your code can execute against two or more databases seemlessly. Using the usual SqlCommand object, spread the load of your commands to multiple databases to improve performance and scalability. This library uses a Horizontal Partion Shard, which requires your tables to be partitionned horizontally. The download comes with a sample application that shows you how to use the shard library and gives you execution time in milliseconds of your commands.

Here is a sample code that allows you to fetch records from the USERS table across multiple databases. As you can see, extension methods on the SqlCommand object makes this program easy to read; new Shard methods have been added to SqlCommand that allow you to execute reads, writes and insert operations in parallel.

SqlCommand cmd = new SqlCommand();

// Set the parallel option

PYN.EnzoAzureLib.Shard.UseParallel = true;

PYN.EnzoAzureLib.Shard.UseCache = true;

cmd.CommandText = "SELECT * FROM USERS";

DataTable data = cmd.ExecuteShardQuery();

dataGridView2.DataSource = data;Caching

This library allows you to selectively cache your records so you do not have to perform additional roundtrips to the database. The caching library used is from the Enterprise Library, which is now included in .NET 4.0.Task Parallel Library (TPL)

And for additional performance, the library uses the TPL, which is also part of .NET 4.0. You can turn this feature on or off and see the impact on performance when you query your Shard using parallel threads.Round Robin Inserts

And if you need to insert many records in the shard, you can use the ExecuteParallelRoundRobinLoad method, which takes a List of command objects and spreads their execution in a round-robin manner to all the underlying databases in the shard.Requirements

This library runs only with the .NET 4.0 framework.

Robin Bonin posted Bulk transfering data in MS SQL 2008 using BCP on 5/30/2010:

Recently I’ve been playing around with SQL Azure. One of the current limitations of Azure, is no support for replication. I’ve been looking into different solutions to try and sync Azure data with my local SQL Server. The BCP utility and bulk import seem to be promising. Here is a simple example to copy the contents of a table from one DB table / server to another.

To export data to a binary data file, use the following command:

bcp "[sql query]" queryout [filename].bin -S [serveraddress] -U [username] -P [password] -d [database] –nTo import the data via the BCP utility, you can use the following command line:

bcp [table] in [filename].bin -S [serveraddress] -U [username] -P [password] -d [database] –nAlternatively, you can also import the data via a SQL Query by using BULK INSERT:

BULK INSERT [tablename]

FROM '[path and filename]'

WITH (DATAFILETYPE='native')To use these commands with Azure, you must be using version 10.

bcp /vwill tell you your version number.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

See Clemens Vasters recommended Maggie Myslinska’s TechEd: ASI204 Windows Azure Platform AppFabric Overview session at TechEd North America 2010 in the Cloud Computing Events section below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Dom Green recommends Deploying to the cloud as part of your daily build – update in this 6/1/2010 post:

Nearly a year ago I made a post about how to deploy your Windows Azure applications to the cloud as part of your daily build.

Well, times have changed and our tools for Windows Azure have got a little shinier, making cloud deployments much easier.

This update was something that I had been itching to do for a while but have been busy plotting

worldcloud domination with my current project.Drum roll please…

However, Scott Densmore over at Microsoft’s P&P group has pipped me to the post (literally) with a two part blog series on how to do your daily deployments to Azure using the Windows Azure Service Management CmdLets and MSBuild (part1 & part2). Scott has even added in the ability to upload your certs for SSL during the deployment.

The addition of the Power Shell CmdLets makes this a much concise set of build tasks, even updating your config to point to the correct storage and account using a custom Task, I still prefer using the MSBuild Extension Pack however.

Additions

One thing that I will be adding to my own set of build scripts will be uploading the package and config to Azure storage before I run the deploy tasks so that I can keep them in dated blob containers so that I can roll back to prior releases at a moments notice.

Guidance

There’s some great Azure guidance coming from Scott and Eugenio in the Patterns and Practice team so keep a watch on their site for all manner of Azure related goodness.

The Windows Partner Network (WPN) released the Windows Platform Ready (WPR) project “Built on Windows® Azure™” on 6/1/2010:

About Microsoft Platform Ready

Microsoft Platform Ready (MPR) is designed to give you what you need to plan, build, test and take your solution to market. We’ve brought a range of platform development tools together in one place, including technical, support and marketing resources, as well as exclusive offers. With MPR, you can:

- Access training and webinars to help you get compatible.

- Test your application with online resources and testing tools.

- Utilize marketing toolkits including customizable templates for email, letters and presentations.

Supported Technologies

Windows 7, Windows Server 2008 R2, SQL Server 2008 R2, Microsoft Office 2010, SharePoint 2010, Exchange 2010 and Window Azure including SQL Azure.

Eugenio Pace announced Windows Azure Architecture Guide – Part 1 – Live! on 6/1/2010:

While we wait for the book to be published, we packaged the entire guide to be available online. We are using a very cool tool from our colleagues at ContentMaster which allows us to deliver a richer experience.

Many of the diagrams in the guide are now “active”. When you hover with your over these areas, you will get a more detailed explanation of what is interesting about it:

There are also number of walkthrough videos (activate the script with the button below) [in Eugenio’s post.]

By the way, as you probably can guess from the URL, the entire content is hosted in Windows Azure storage as blobs.

Joe from the new Azure Miscellany blog describes how to program Web Role [Diagnostic] Crash Dumps in this detailed post of 6/1/2010:

After closer inspection, it appeared the role instance was not entirely unresponsive; it was still performing its duties (logging, sending heartbeat signals back to the fabric controller and processing HTTP requests) but because its CPU was maxed out, it was taking so long to process requests they were timing out. For this reason, the instance did not register as ‘unresponsive’ by the Azure Fabric controller and subsequently recycled.

This caused us quite a headache because we have 2 web roles (for failover and scalability reasons) but the Azure load balancer which sends the HTTP traffic to the web role instances appears to be quite basic (round robin) and is not aware that one of the role instances has become (to all intents and purposes) unresponsive, so the ‘hung’ instance continued to receive approximately half of all incoming HTTP traffic rendering our cloud app effectively unavailable.

Furthermore, because the role instance was still sending heartbeat signals back to the Fabric Controller the instance was not automatically recycled, which meant we had to do it manually.

The big problem was how to debug this issue. We couldn’t recreate the issue on the dev fabric.

What we did

We use the Azure Diagnostics framework pretty extensively throughout our cloud app (which is how we can tell the problem is a CPU spike), but the usual steps of logging etc were not ideal, since we had no idea where the CPU spike was originating from.

What would be ideal is a crash dump of the web role VM instance while its CPU was spiking, then we could inspect the call stacks/number of threads/heap etc using WinDbg and isolate the cause. But it’s quite rare to get a web role crash dump organically since any unhandled exceptions that bubble up are handled by Global.asax’s default unhandled Exception handler.

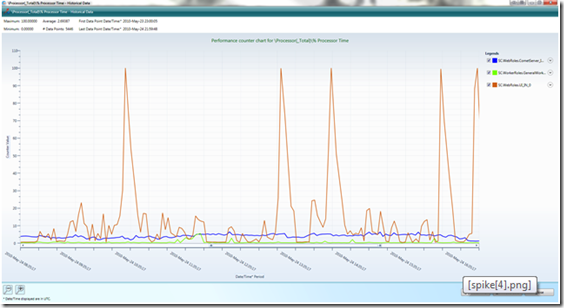

To work around this, we started a new Thread on the WebRole OnStart() method which monitored the Processor(_Total) perf counter for the web role instance.

Joe continues with the C# code for the workaround and:

Conclusion

This meant we had a crash dump to analyse and it had the added bonus that the Web Role instance was marked as unhealthy and automatically recycled by the Fabric Controller.

If you’re interested, the cause of the CPU spike was some dodgy server side image resizing we were doing, but that’s another topic and another post.

Maartin Balliauw announced on 5/31/2010 that new version 1.1.2-dev of his php_azure.dll is available for download from CodePlex’s Windows Azure - PHP contributions project.

iLink Systems.com announced its County.gov Azure demo project on 6/1/2010. This project appears to have grown out of the company’s iLink GIS Framework app, which won third place in the Windows Azure public sector developer contest.

Maartin Balliauw posted on 5/31/2010 the Running in the Cloud - First Belgian Azure project slide deck that describes RealDolmen’s windows Azure project for the ChronoRace’s web site upgrade to handle the traffic generated by the 20 km de Bruxelles event that drew 30,000 joggers to downtown Brussels on 5/31/2010:

Check out @martinballiauw’s tweets about the autoscaling features of the Azure site.

Gaurav Mantri posted Announcing the launch of Azure Diagnostics Manager: A desktop client to visualize and manage Windows Azure diagnostics data on 5/31/2010:

I am pleased to announce that after nearly four months of private and public beta, Azure Diagnostics Manager is now available for purchase.

What is Azure Diagnostics Manager?

Azure Diagnostics Manager is a desktop client for visualizing and managing Windows Azure Diagnostics data logged by the applications running in Windows Azure.

At a very high level, Azure Diagnostics Manager has following features:

Event Logs Viewer: Event Logs viewer feature in Azure Diagnostics Manager allows you view events logged in a “Windows Event Viewer” like user interface. You can view both Live as well as historic events data logged by your application. You can filter the data (both on the server and client side) as well as download the data in CSV format on your computer for further analysis.

Trace Logs Viewer: Trace Logs viewer feature in Azure Diagnostics Manager allows you view trace data logged in a “Windows Event Viewer” like user interface. You can view both Live as well as historic trace data logged by your application. You can filter the data (both on the server and client side) as well as download the data in CSV format on your computer for further analysis.

Azure Infrastructure Logs Viewer: Infrastructure Logs viewer feature in Azure Diagnostics Manager allows you view infrastructure data logged by Windows Azure in a “Windows Event Viewer” like user interface. You can view both Live as well as historic data logged by your application. You can filter the data (both on the server and client side) as well as download the data in CSV format on your computer for further analysis.

Performance Counters Viewer: Performance counters viewer in Azure Diagnostics Manager allows you to view performance counters data logged by your applications in a “Windows Perf

mon” like user interface. You can view charts of the performance counters (both Live and historic), view statistical summary (Average/Max/Min) of this data. You can also download the data in CSV format on your computer for further analysis.

Directory Logs (IIS/Failed Requests/Crash Dumps) Viewer: You can view/download IIS logs, IIS Failed Request logs and Crash Dump logs using Azure Diagnostics Manager.

On Demand Transfer: By default Windows Azure Diagnostics keep all the diagnostics data in your application’s VM and transfer it periodically to Windows Azure Storage based on a transfer schedule defined by your application. Using Azure Diagnostics Manager you can perform an “On Demand” transfer from application’s VM to Windows Azure Storage.

Remote Diagnostics Management: One way to configure the diagnostics in your application is by including the diagnostics information in your application code. Using Azure Diagnostics Manager’s remote diagnostics management feature, you can configure the diagnostics data on the fly thus eliminating the need for redeploying your application.

Dashboard: Dashboard feature in Azure Diagnostics Manager allows you to monitor LIVE data feed for performance counters, event logs and trace logs data of your applications.

Gaurav continues with pricing and trial details.

Scott Densmore adds his workaround for a problem running MVC 2 on .NET 4 in his How a Checkbox Saved Paving My Machine post of 5/28/2010:

Last week we started working on the code for part 2 of our Windows Azure Architecture Guidance. Eugenio has a great writeup of our start to Part 2. We started framing out the code for our project. We decided to use MVC 2, .NET 4.0 / VS2010 for this project. Once we started hosting the application in Azure using .NET 4.0 (yes there are tools coming for this), the application stopped working on my machine. At first I thought this was an Azure problem but come to find out it is actually has to do with MVC 2 and IIS. The problem came down to a check box. The problem is actually documented in a KB and the ReadMe from the .NET 4 install. Both talk about returning a 404 errors, but I found that my situation turned into blank pages. All this came down to a single checkbox in IIS: HTTP Redirection.

I will not bore you with my 3 days of fighting this. Most people will not run into this if you are not selective about what you install in IIS. I let the WebPI install the Azure Tools for Visual Studio 2008 and when I upgraded to Visual Studio 2010 this was not selected. I could run ASP.NET 4.0 applications, MVC 2 applications targeting 3.5, but not MVC 2 applications targeting .NET 4.0 when running under IIS. I hope this helps someone else that runs into this problem.

Thanks to all the help from Brad Wilson, Phil Hack and the rest of the team for helping me figure this out.

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie leads with Cloud and virtualization share a common attribute: dynamism. That dynamism comes at a price… for her The Rise of the Out-of-Band Management Network post of 6/1/2010:

Let’s talk about management. Specifically, let’s talk about how management of infrastructure impacts the network and vice-versa, because there is a tendency to ignore that the more devices and solutions you have in an infrastructure the more chatty they necessarily become.

In most organizations management of the infrastructure is accomplished via a management network. This is usually separate from the core network in that it is segmented out by VLANs, but it is still using the core physical network to transport data between devices and management solutions. In some organizations an “overlay management network” or “out-of-band” network is used. This network is isolated – physically – from the core network and essentially requires a second network implementation over which devices and management solutions communicate. This is obviously an expensive proposition, and not one that is implemented unless it’s absolutely necessary. Andrew Bach, senior vice president of network services for NYSE Euronext (New York Stock Exchange) had this to say about an “overlay management network” in “Out-of-band network management ensures data center network uptime”

Bach said out-of-band network management requires not only a separate network infrastructure but a second networking vendor. NYSE Euronext couldn't simply use its production network vendor, Juniper, to build the overlay network. He described this approach as providing his data center network with genetic diversity.

"This is a generalized comment on network design philosophy and not reflective on any one vendor. Once you buy into a vendor, there is always a possibility that their fundamental operating system could have a very bad day," Bach said. "If you have systemic failure in that code, and if your management platform is of the same breed and generation, then there is a very good chance that you will not only lose the core network but you will also lose your management network. You will wind up with absolutely no way to see what's going on in that network, with no way to effect repairs because everything is dead and everything is suffering from the same failure."

"Traditionally, in more conventional data centers, what you do is you buy a vendor's network management tool, you attach it to the network and you manage the network in-band – that is, the management traffic flows over the same pipes as the production traffic," Bach said.

Most enterprises will manage their data center network in-band by setting up a VLAN for management traffic across the infrastructure and dedicating a certain level of quality of service (QoS) to that management traffic so that it can get through when the production traffic is having a problem, said Joe Skorupa, research vice president at Gartner.

Right now most enterprises manage their infrastructure via a management network that’s logically separate but not physically isolated from the core network. A kind of hybrid solution. But with the growing interest in implementing private cloud computing that will certainly increase the collaboration amongst infrastructure components and a true out-of-band management implementation may become a necessity for more organizations – both horizontally across industries and vertically down the “size” stack.

Lori continues with INFRASTRUCTURE 2.0 WILL DRIVE OOB MANAGEMENT and THE WHOLE is GREATER THAN the SUM of the PARTS topics.

Edward L. Haletky proposed Defining Tenants for Secure Multi-Tenancy for the Cloud in this 6/1/2010 post to The Virtualization Practice blog:

The panel of the Virtualization Security Podcast on 5/27/2010 was joined by an attorney specializing in the Internet space. David Snead spoke at InfoSec and made it clear that there was more to secure multi-tenancy (SMT) than one would imagine. The first question was “how would you define tenant?” which I believe is core to the discussion of SMT as without definitions we have no method of communicating. Before we get to David’s response, we should realize that nearly every one has their own definition of Tenant for a multi-tenant solution.If you talk to Cisco with respect to CVN, it is defined as entities requiring differing levels of QoS. If you talk to a storage vendor (NetApp or EMC) you really get an encrypt “data at rest” answer that is a small part of the entire Secure Multi-Tenancy discussion. You will also get answers related to storage availability. Cisco’s answer is actually all about Availability and guaranteeing that a tenant receives the assigned QoS throughput.

David Snead, an attorney, defined Tenant as “whatever definition is used within the contract”. If there is no definition within your contract then assumptions are made, so I tend to fall back to the definition of Tenant to be “the legal entity responsible for the data.” So you need to read your contracts carefully.

Regardless of how you define Tenant within a contract or using my own definition, there are governmental dictates that come into play for Personally identifiable information (PII) which could impose a split personality within a given tenant.In Europe for example, a Tenant is required to handle PII in a very specific ’secure’ way, while the government may not care much about the rest of the data. When you state you are PCI or HIPPA compliant, you also sign on to treat PII data differently or at least in accordance with the respective regulatory compliance. This does not actually mean the data is secure, just that you follow some regulatory compliance.

Outside of regulatory compliance Tenant gets obfuscated further to include data classification within the tenant causing even further bifurcation of the term ‘tenant’.

Ed continues his search for the appropriate definition.

Chris Hoff (a.k.a. @Beaker) posted Incomplete Thought: The DevOps Disconnect on 5/31/2010:

DevOps — what it means and how it applies — is a fascinating topic that inspires all sorts of interesting reactions from people, polarized by their interpretation of what this term really means.

At CloudCamp Denver, adjacent to Gluecon, Aaron Pederson of OpsCode gave a lightning talk titled: ”Operations as Code.” I’ve seen this presentation on-line before, but listened intently as Aaron presented. You can see John Willis’ version on Slideshare here. Adrian Cole (@adrianfcole) of jClouds fame (and now Opscode) and I had an awesome hour-long discussion afterwards that was the genesis for this post.

“Operations as Code” (I’ve seen it described also as “Infrastructure as Code”) is really a fantastically sexy and intriguing phrase. When boiled down, what I extract is that the DevOps “movement” is less about developers becoming operators, but rather the notion that developers can be part of the process whereby they help enable operations/operators to repeatably and with discipline, automate processes that are otherwise manual and prone to error.

[Ed: great feedback from Andrew Shafer: "DevOps isn't so much about developers helping operations, it's about operational concerns becoming more and more programmable, and operators becoming more and more comfortable and capable with that. Further, John Allspaw (@allspaw) added some great commentary below - talking about DevOps really being about tools + culture + communication. Adam Jacobs from Opscode *really* banged out a great set of observations in the comments also. All good perspective.]

Automate, automate, automate.

While I find the message of DevOps totally agreeable, it’s the messaging that causes me concern, not because of the groups it includes, but those that it leaves out. I find that the most provocative elements of the DevOps “manifesto” (sorry) are almost religious in nature. That’s to be expected as most great ideas are.

In many presentations promoting DevOps, developers are shown to have evolved in practice and methodology, but operators (of all kinds) are described as being stuck in the dark ages. DevOps evangelists paint a picture that compares and contrasts the Agile-based, reusable componentized, source-controlled, team-based and integrated approach of “evolved” development environments with that of loosely-scripted, poorly-automated, inefficient, individually-contributed, undisciplined, non-source-controlled operations.

You can see how this might be just a tad off-putting to some people.

In Aaron’s presentation, the most interesting concept to me is the definition of “infrastructure.” Take the example to the right, wherein various “infrastructure” roles are described. What should be evident is that to many — especially those in enterprise (virtualized or otherwise) or non-Cloud environments — is that these software-only components represent only a fraction of what makes up “infrastructure.”

Hoff continues his argument and concludes:

I only wish that (NetSec) DevOps evangelists — and companies such as Opscode — would address this divide up-front and start to reach out to the enterprise world to help make DevOps a goal that these teams aspire to rather than something to rub their noses in. Further, we need a way for the community to contribute things like Chef recipes that allow for flow-through role definition support for hardware-based solutions that do have exposed management interfaces (Ed: Adrian referred to these in a tweet as ‘device’ recipes)

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Clemens Vasters recommended Maggie Myslinska’s TechEd: ASI204 Windows Azure Platform AppFabric Overview session at TechEd North America 2010 in this 6/1/2010 post:

Come learn how to use Windows Azure AppFabric (with Service Bus and Access Control) as building block services for Web-based and hosted applications, and how developers can leverage services to create applications in the cloud and connect them with on-premises systems.

- Room 398, Tuesday June 8

- 3:15pm-4:30pm

- Session Type: Breakout Session

- Track: Application Server & Infrastructure

- Speaker(s): Maggie Myslinska

- Level: 200 – Intermediate

If you are planning on seeing Juval’s and my talk ASI304 at TechEd and/or if you need to know more about how Windows Azure AppFabric enables federated cloud/on-premise applications and a range of other scenarios, you should definitely put Maggie’s talk onto your TechEd schedule as well.

The InformationWeek::Analytics Team leads off with Mike Healey’s Practical Guide to SaaS Success white paper in a 6/1/2010 flurry of SaaS publications (a SaaSathon?):

Software as a service is hot, and we have the stats to prove it: Fully 68% of the 530 respondents to our InformationWeek Analytics 2010 Outsourcing Survey use some form of SaaS. And they’re happy with that decision. Most say SaaS provided higher-quality results versus internal sourcing, with 37% pointing to the IT nirvana of “higher quality at a lower cost.” Forty-four percent plan to expand their use of SaaS this year.

Welcome to the Golden Age of SaaS. We’re using it, we like it and we’re poised to bet big that it will continue to deliver benefits. However, just like previous Golden Ages, there are some real problems that are being swept aside.

David Linthicum posits “While many cloud providers consider APIs as an afterthought, they should be front and center” and concludes The API is everything for cloud computing in this 6/1/2010 post to InfoWorld’s Cloud Computing blog:

I spoke at Glue Con last week, a developer-oriented conference held in Denver this year. What's the core message from the conference around cloud computing? You can answer that question with three letters: A-P-I.

APIs are nothing new to cloud computing; indeed, most of cloud services are accessed using an API -- whether you're allocating compute or storage resources, placing a message on a queue, or remotely shutting down a virtual instance. However, the gap between what we have today and where we need to be is vaster than most cloud computing users understand.

For example, at the conference we heard Ryan Sarver, director of platform at Twitter, state that early on their APIs were "very simplistic." But now developers are looking to use Twitter in new and more exciting ways, so Twitter is enhancing its APIs -- for example, promoting the use of its API by creating a new developer portal, as well as providing more code samples, more documentation, and more tools. Oh yeah, they're also creating more APIs. Twitter is adding arbitrary metadata or structured data to tweets. (There are 140,000 applications that use the Twitter API, Sarver said.)

Beyond social networking services, you can count on the majority of the future features of cloud computing to be based on APIs, and thus developer-oriented, not user-oriented. While SaaS systems, such as Salesforce.com, were all about the visual interface initially (and that is still important for SaaS), more and more cloud computing users will consume both data and behavior using the APIs, so they can mix and match cloud services within traditional enterprise or composite applications. This blurs the line between what lives in the clouds and what does not.

However, we're not there yet, generally speaking, with cloud APIs. There are still two key issues that providers need to address:

First, APIs for most cloud providers are often an afterthought. Looping back and exposing their core services as APIs often requires rethinking how the core system is architected. That's why, in many instances, the providers go for quick and lower-value API solutions -- a mistake. Engineer APIs into your systems from the get-go.

Second, APIs are often not documented or supported as well as they should be. Developers will use the easiest API; therefore, providers' APIs had better be that path of easiest use, or they'll end up watching developers go elsewhere.

Brenda Michelson posted Dave and Brenda discuss top Cloud Computing Stories for May on 6/2/2010:

Last week, Dave Linthicum invited me back to his podcast to discuss top cloud computing stories for May. There were no set rules on what constituted “top”. We each picked 3 stories, which we didn’t reveal to each other beforehand.

As for the results, I will say that we had one story in common, two mainstream stories (one each) and two wildcards (one each). The format was fun, so we’ll do another at the end of June.

To listen to the podcast (16 minutes or so), go here.

Related posts:

The WCF Data Services Team announced OData Workshops in Raleigh, Charlotte, Atlanta, Chicago and NYC in a 6/1/2010 post:

Chris (aka Woody) Woodruff has organized a series of OData workshops to compliment the official OData Roadshow.

This is highly recommended. So if for whatever reason you can’t make it to one of the OData Roadshow events, or you just can’t get enough OData, see if you can get along to one of Chris’ workshops.

The workshops start in Raleigh tomorrow and finish in NYC on June 28th.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

No significant articles today.