Windows Azure and Cloud Computing Posts for 7/5/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Update 7/6/2010: Articles added 7/6/2010 are marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Wayne Walter Berry delivered an Introduction to Data Sync Service for SQL Azure on 7/6/2010:

Data Sync Service for SQL Azure allows you to perform bi-directional synchronization between two or more SQL Azure databases. This blog post will discuss the basics of Data Sync Services for SQL Azure.

Under the Covers

SQL Azure Data Sync Service runs on the Windows Azure making use of web and worker roles using the Microsoft Sync Framework. The Windows Azure account is … owned by the Microsoft Sync Team, and is managed by them; Data Sync Service for SQL Azure is not an application that you install within a role on you[r] Windows Azure account. This service is hosted currently in the data center in South Central US. There is an online web interface that manages the configuration of your synchronization sets, allowing you to configure what databases you want to synchronize on what servers, in what data centers and how often you want them to synchronize. Currently, SQL Azure Data Sync Service is in CTP and is available in SQL Azure Labs.

Getting Started

In every synchronization scenario, there is a single hub database and many member databases. When you set up the synchronization group, you declare the hub database and assign as many empty member databases as you want.

On the first synchronization, Data Sync Service for SQL Azure provisions the member databases by copying the hub databases schema to them. In other words, you do not have to generate the schema on member databases; this is done for you by Data Sync Service for SQL Azure. Changes are also made to the hub database to efficiently track data changes.

When the schema is copied from the hub database to the member databases the foreign key constraints are not copied. This allows the table data to be inserted in any order on the member database. By not enforcing the foreign key constraints on member databases Data Sync Service for SQL Azure doesn’t have to convert the hub database to read-only on the first synchronization, plus it works with data schemas that have circular foreign key references (for more about circular references see this blog post).

After the member databases have been provisioned, on the first synchronization, all the data from the hub database is copied to the member databases table by table. The order of the inserts doesn’t matter, since there are no foreign key constraints on the member databases.

Data Transfer Pricing

The member database can be on servers that are not in the same database center as Data Sync Service (South Central US). If all your databases are in different data centers, when you synchronize your databases (transfer data) you will be charged for bandwidth for both directions for database that are not in South Central US.

The limitation of only being able to run Data Sync Service from South Central US is a limitation of the CTP. As with the other Windows Azure Platform services, if all your databases are in the same data center and the Data Sync Service is running there, there will be no fee for data exchange.

Only the data that has changed will be transferred on synchronization, not the whole database. However, since that data needs to be synchronized with all member databases and the hub, the number of members is a multiplier in the cost of the data transfer.

For more about pricing click here.

Partial Synchronization

Data Sync Service for SQL Azure allows you to pick the tables you would like to synchronize. You do not have to synchronize the whole database. This allows you to create a synchronization group where the member databases are a subset of the hub. This create[s] an interesting scenario where you can give permissions to a member database that are different than the permission on the hub database, allowing users to access a subset of the data. I will discuss how to do this in a later blog post using PowerPivot.

Schema Changes

When Data Sync Service for SQL Azure creates the schema on the member databases it is a copy of the schema on the hub database. The hub database’s schema cannot be changed once the synchronization group has been established. In order to change the schema on the hub database you need to remove the synchronization group and recreate a new synchronization group which will reallocate your member databases; re-generating their schemas and moving the data again.

On Demand or Scheduled Synchronization

Synchronization can be done on demand or on a set schedule, unlike merge replication there is no real-time synchronization. The smallest increment for schedule replication is currently an hour in the current CTP, more frequent synchronization will be coming in the release version. Synchronization can also be done on demand.

Bi-Direction Synchronization

Synchronization on Data Sync Service happens bi-directionally you can write to the member databases and when synchronization occurs, the hub and all member databases are updated. Or, you can write to the hub database and synchronize the member databases. In other words, all the databases are read write.

Data Sync Service for SQL Azure doesn’t allow you to program the handling of conflict resolution as [you can with] the Microsoft Sync Framework. For this reason it is best if you are inserting row into multiple member databases that you use [the] uniqueidentifier data type [for] primary keys…, instead of the int IDENTITY data type. This will prevent identity conflicts during the bi-directional synchronization. If you are working with a legacy database or do not want to use the uniqueidentifier data type, there are several other methods of ensuring a unique primary key across all the databases. I will blog more about this later.

![]() I consider synchronization between SQL Compact on Windows Phone 7’s Isolated Storage, on-premises SQL Server instances and SQL Azure to be equally interesting. Liam Cavanagh demonstrated “how the Sync Framework is being enhanced to expand our offline client-side reach beyond traditional Windows Presentation Foundation/Winforms applications by adding support for Microsoft Silverlight, HTML 5, Windows Phone 7, and other devices such as an iPhone” in his TechEd North America 2010 presentation, COS07-INT | Using Microsoft SQL Azure as a Datahub to Connect Microsoft SQL Server and Silverlight Clients (PDF slide deck.)

I consider synchronization between SQL Compact on Windows Phone 7’s Isolated Storage, on-premises SQL Server instances and SQL Azure to be equally interesting. Liam Cavanagh demonstrated “how the Sync Framework is being enhanced to expand our offline client-side reach beyond traditional Windows Presentation Foundation/Winforms applications by adding support for Microsoft Silverlight, HTML 5, Windows Phone 7, and other devices such as an iPhone” in his TechEd North America 2010 presentation, COS07-INT | Using Microsoft SQL Azure as a Datahub to Connect Microsoft SQL Server and Silverlight Clients (PDF slide deck.)

• Hilton Giesenow posted a 00:12:26 Use SQL Azure to build a cloud application with data access screencast to MSDN on 7/6/2010:

Microsoft SQL Azure provide for a suite of great relational-database-in-the-cloud features. In this video, join Hilton Giesenow, host of The Moss Show SharePoint Podcast, as he explores how to sign up and get started creating a Microsoft SQL Azure database. In this video we also look at how to connect to the SQL Azure database using the latest release of Microsoft SQL Server Management Studio Express (R2) as well as how to configure an existing on-premises ASP.NET application to speak to SQL Azure.

• Gil Fink shows you how to use the OData Explorer in this 7/6/2010 post:

One nice tool to use with OData is the OData Explorer. The OData Explorer is a tool that helps to explore OData feeds in a visual way and not by exploring the Atom/JSON responses. You can download the tool from here. Another way to use the tool is by going to the following link: http://Silverlight.net/ODataExplorer.

OData Explorer Requirements: The tool is a Silverlight 4 project. In order to use it you’ll have to answer these requirements:

Visual Studio 2010

- Silverlight 4 Developer runtime

- Silverlight 4 SDK

- Silverlight 4 tools for Visual Studio

- Latest Silverlight Toolkit release for Silverlight 4

or of course use it on-line in the link I provided previously.

Using the Tool: When the tool start you’ll get to the Add New OData Workspace view:

Here you can address your feed or address public OData feeds.

After choosing the workspace you’ll get into the tool:

Gil continues with more tool details.

• Pluralcast posted its Pluralcast 19 : OData with Matt Milner podcast on 7/6/2010:

Listen to this Episode [45:12]

What’s all this OData talk? Find out in this episode with Matt Milner, who helps us understand this new way of publishing and consuming data on the web. Matt also tells us about a project he recently did for Pluralsight in which he published his first “real” OData feed.

Matt Milner

Matt is a member of the technical staff at Pluralsight, where he focuses on connected systems technologies (WCF, Windows WF, BizTalk, "Dublin" and the Azure Services Platform). Matt is also an independent consultant specializing in Microsoft .NET application design and development. As a writer Matt has contributed to several journals and magazines including MSDN Magazine where he currently authors the workflow content for the Foundations column. Matt regularly shares his love of technology by speaking at local, regional and international conferences such as Tech Ed. Microsoft has recognized Matt as an MVP for his community contributions around connected systems technology.

Show Links

- OData.org

- The Pluralsight OData Feed

- OData Q&A on MSDN

- Online OData Course

- @milnertweet – Matt on Twitter

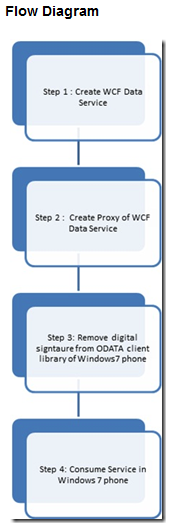

• Dhananjay Kumar posted a Walkthrough on Creating WCF Data Service (ODATA) and Consuming in Windows 7 Mobile application on 7/6/2010 to his Debug Mode … blog:

In this article, I will discuss:

How to create WCF Data Service

- How to remove digital signature on System.Data.Service.Client and add in Windows7 phone application.

- Consume in Windows 7 phone application and display data.

You can see three video[s] here:

Dhananjay continues with a detailed, step-by-step tutorial.

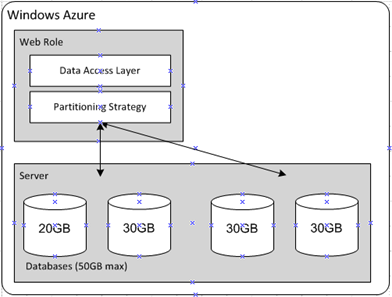

J. D. Meier recommends an SQL Azure Partitioning Pattern in this 7/4/2010 post:

While working with a customer at our Microsoft Executive Briefing Center (Microsoft EBC), one of the things we need[ed] to do was find a simple and effective way to partition SQL Azure.

The problem with SQL Azure is that depending on your partitioning strategy, you can end up allocating increments that you don’t need and still pay for it. The distinction is that for Azure Storage, you pay for storage used, but with SQL Azure you pay for the allocated increments. See Windows Azure Pricing.

After checking with our colleagues, one of the approaches we liked is to partition by customer ID ranges. By adding this level of indirection, you can optimize or reduce unused increments because you already know the ranges and usage, and you can change the ranges and allocations if you need to. Here is a visual of the approach:

Thanks to our colleague Danny Cohen for the insight, clarification, and suggestions.

Selcin Turkarslan posted his 23-page, profusely illustrated Getting Started with SQL Azure white paper in *.docx format on 7/2/2010. The paper carries the following overview:

SQL Azure Database is a cloud based relational database service from Microsoft. SQL Azure provides relational database functionality as a utility service. Cloud-based database solutions such as SQL Azure can provide many benefits, including rapid provisioning, cost-effective scalability, high availability, and reduced management overhead.

This document provides guidelines on how to sign up for SQL Azure and how to get started creating SQL Azure servers and databases.

Selcin (@selchin) is a Microsoft programmer/writer.

Scott Hanselman gave an OData with Scott Hanselman presentation to the Phoenix .NET Users group on 5/19/2010, which I missed at the time. It showed up today on Google Alerts for “OData” for unknown reasons:

Scott Guthrie announced New Embedded Database Support with ASP.NET in this 6/30/2010 post:

Earlier this week I blogged about IIS Express, and discussed some of the work we are doing to make ASP.NET development easier from a Web Server perspective.

In today’s blog post I’m going to continue the simplicity theme, and discuss some of the work we are also doing to enable developers to quickly get going with database development. In particular, I’m pleased to announce that we’ve just completed the engineering work that enables Microsoft’s free SQL Server Compact Edition (SQL CE) database to work within ASP.NET applications. This enables a light-weight, easy to use, database option that now works great for ASP.NET web development.

Introducing SQL Server Compact Edition 4

SQL CE is a free, embedded, database engine that enables easy database storage. We will be releasing the first public beta of SQL CE Version 4 very shortly. Version 4 has been designed and tested to work within ASP.NET Web applications.

Works with Existing Data APIs

SQL CE works with existing .NET-based data APIs, and supports a SQL Server compatible query syntax. This means you can use existing data APIs like ADO.NET, as well as use higher-level ORMs like Entity Framework and NHibernate with SQL CE. Pretty much any existing data API that supports the ADO.NET provider model will work with it.

This enables you to use the same data programming skills and data APIs you know today.

No Database Installation Required

SQL CE does not require you to run a setup or install a database server in order to use it. You can now simply copy the SQL CE binaries into the \bin directory of your ASP.NET application, and then your web application can run and use it as a database engine. No setup or extra security permissions are required for it to run. You do not need to have an administrator account on the machine. It just works.

Applications you build can redistribute SQL CE as part of them. Just copy your web application onto any server and it will work.

Database Files are Stored on Disk

SQL CE stores databases as files on disk (within files with a .sdf file extension). You can store SQL CE database files within the \App_Data folder of your ASP.NET Web application - they do not need to be registered in order to use them within your application.

The SQL CE database engine then runs in-memory within your application. When your application shuts down the database is automatically unloaded.

Shared Web Hosting Scenarios Are Now Supported with SQL CE 4

SQL CE 4 can now run in “medium trust” ASP.NET 4 web hosting scenarios – without a hoster having to install anything. Hosters do not need to install SQL CE or do anything to their servers to enable it.

This means you can build an ASP.NET Web application that contains your code, content, and now also a SQL CE database engine and database files – all contained underneath your application directory. You can now deploy an application like this simply by using FTP to copy it up to an inexpensive shared web hosting account – no extra database deployment step or hoster installation required.

SQL CE will then run within your application at the remote host. Because it runs in-memory and saves its files to disk you do not need to pay extra for a SQL Server database.

Scott continues with a description of Visual Studio 2010 and Visual Web Developer 2010 Express Support and concludes:

SQL CE 4 provides an easy, lightweight database option that you’ll now be able to use with ASP.NET applications. It will enable you to get started on projects quickly – without having to install a full database on your local development box. Because it is a compatible subset of the full SQL Server, you write code against it using the same data APIs (ADO.NET, Entity Framework, NHibernate, etc).

You will be able to easily deploy SQL CE based databases to a remote hosting account and use it to run light-usage sites and applications. As your site traffic grows you can then optionally upgrade the database to use SQL Server Express (which is free), SQL Server or SQL Azure – without having to change your code. [Emphasis added.]

We’ll be shipping the first public beta of SQL CE 4 (along with IIS Express and several more cool things I’ll be blogging about shortly) next week.

Hope this helps.

Vincent-Philippe Lauzon posted An overview of Open Data Protocol (OData) to the Code Project on 6/28/2010:

Introduction

In this article I want to simply give an overview of the OData protocol: what is it, how does it work, where it does make sense to use it.

Background

Open Data Protocol (or OData, http://www.odata.org) is an open protocol for sharing data. It is built upon AtomPub (RFC 5023), itself an extension of Atom Publishing Protocol (RFC 4287). OData is a REST (Representational State Transfer) protocol ; therefore a simple web browser can view the data exposed through an OData service. OData specs are under Microsoft Open Specification Promise (OSP).

The basic idea behind OData is to used a well known data format (Atom feed) to expose list of entities. AtomPub extends the basic Atom Protocol by allowing not only read but the whole CRUD opertions. OData extends AtomPub by enabling simple queries over feeds. OData also typically expose a collection of entity sets with a higher-level grouping feed where you see all the feeds available.

Vincent-Philippe continues with downloadable OData AtomPub and C# code samples.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

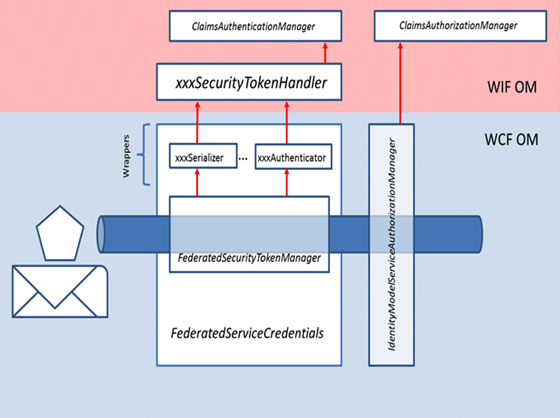

• Vittorio Bertocci (@vibronet) describes How WIF Wedges Itself in the WCF Pipeline by means of a pastel-colored diagram in this 7/6/2010 post:

[I]f it’s true that a picture is worth 10^3 words…

Without the carrot/stick of the book I would have never spent the cycles to put together a visual representation of it, but now that it’s done I am glad I did it. It’s so much simpler to think about how the flow goes, just by looking at the picture… but then again, that’s how I roll :-) Thanks Peter & Brent!

Amazon expects Vibro’s Programming Windows Identity Foundation book from Microsoft press to be ready to ship on 9/15/2010.

• MSDN Events announced on 7/6/2010 MSDN Webcast: Windows Azure AppFabric: Soup to Nuts (Level 300) for 9/15/2010 at 9:00 AM PDT:

Event Overview

In this webcast, we demonstrate a web service that combines identity management via Active Directory Federation Services (AD FS) and Windows Azure AppFabric Access Control with cross-firewall connectivity via Windows Azure AppFabric Service Bus. We also discuss several possible architectures for services and sites that run both on-premises and in Windows Azure and take advantage of the features of Windows Azure AppFabric. In each case, we explain how the pay-as-you-go pricing model affects each application, and we provide strategies for migrating from an on-premises service-oriented architecture (SOA) to a Service Bus architecture.

Presenter: Geoff Snowman, Mentor, Solid Quality Mentors

Geoff Snowman is a mentor with Solid Quality Mentors, where he teaches cloud computing, Microsoft .NET development, and service-oriented architecture. His role includes helping to create SolidQ's line of Windows Azure and cloud computing courseware. Before joining SolidQ, Geoff worked for Microsoft Corporation in a variety of roles, including presenting Microsoft Developer Network (MSDN) events as a developer evangelist and working extensively with Microsoft BizTalk Server as both a process platform technology specialist and a senior consultant. Geoff has been part of the Mid-Atlantic user-group scene for many years as both a frequent speaker and user-group organizer.

• MSDN Events announced on 7/6/2010 MSDN Webcast: Developing for Windows Azure AppFabric Service Bus (Level 300) for 9/8/2010 at 9:00 AM PDT:

Event Overview: In this webcast, we drill into some technical aspects of the Windows Azure AppFabric Service Bus, including direct connections, message security, and the service registry. Although the simplest way to use Service Bus is as a message relay, you can improve performance by directly connecting between the client and the service if the firewalls permit. We review the steps that allow Service Bus to automatically promote your connections to direct connections to improve efficiency, share techniques for encrypting and protecting messages passing through the service bus, and take a look at ways to use the Service Bus as a registry to make your services discoverable.

Presenter: Geoff Snowman, Mentor, Solid Quality Mentors

Geoff Snowman is a mentor with Solid Quality Mentors, where he teaches cloud computing, Microsoft .NET development, and service-oriented architecture. His role includes helping to create SolidQ's line of Windows Azure and cloud computing courseware. Before joining SolidQ, Geoff worked for Microsoft Corporation in a variety of roles, including presenting Microsoft Developer Network (MSDN) events as a developer evangelist and working extensively with Microsoft BizTalk Server as both a process platform technology specialist and a senior consultant. Geoff has been part of the Mid-Atlantic user-group scene for many years as both a frequent speaker and user-group organizer.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• David Aiken announced on 7/6/2010 the availability of the Windows Azure Architecture Guide – Part 1:

The Patterns & Practices team has just released the first part of the Windows Azure Architecture guide.

This book is the first volume in a planned series about the Windows® Azure™ platform and focuses on a migration scenario. It introduces a fictitious company named Adatum which step-by-step modifies its expense tracking and reimbursement system, aExpense, so that it can be deployed to Windows Azure. Each chapter explores different considerations: authentication and authorization, data access, session management, deployment, development life cycle and cost analysis.

You can view the guide online at http://msdn.microsoft.com/en-us/library/ff728592.aspx.

THIS POSTING IS PROVIDED “AS IS” WITH NO WARRANTIES, AND CONFERS NO RIGHTS

• Kevin Kell explained Worker Role Communication in Windows Azure – Part 1 in this 6/28/2010 post to Learning Tree’s Cloud Computing blog:

In an earlier post we talked about the “Asynchronous Web/Worker Role Pattern” in Windows Azure. In this pattern the web roles are “client facing” and expose an http endpoint. In Azure a web role is essentially a virtual machine running IIS while a worker role is a background process that is usually not visible to the outside world. The web roles communicate with the worker roles via asynchronous messages passed through a queue. Even though it is relatively simple this basic architecture is at the heart of many highly scalable Azure applications.

There are, however, other ways in which roles can communicate internally or externally. This allows for considerable flexibility when implementing Azure based solutions. A worker role, for example, could be directly exposed to the outside world (through a load balancer, of course!) and communicate over tcp (or other) protocol. This might be useful in a solution which required, for some reason, a highly customized application server exposed over the Internet. It is also possible for worker roles to communicate directly with other worker roles internally. Often the internal communication is done over tcp but other protocols can be used internally as well.

In the first of this two part series we will explore the basics of exposing an external (i.e. available over the Internet) endpoint to a worker role over tcp. We will use this to implement a simple client/server version of a “math service”. Note that this is not something you would ever necessarily want to do in practice! It is used here simply as an example of how to enable the communication. In the real world the “math service” could be replaced by whatever custom application service was required. …

Kevin continues with his tutorial.

Toddy Mladenov explains Allowing More Than One Developer to Manage Services in Windows Azure in this 7/5/2010 post:

This is more as a note to myself but you can also use it as suggestion how to delegate Windows Azure service management tasks without compromising your Live ID.

At the moment Windows Azure Developer Portal limits the access for managing services to only one LiveID – the System Administrator’s one. This limitation applies to only Windows Azure portal – SQL Azure and Windows Azure platform AppFabric portals allow access using both the Account Administrator’s and the System Administrator’s LiveIDs.

If you don’t want to share Live ID information between developers you should consider using the Service Management CmdLets for managing the services in Windows Azure. You are able to upload up to 5 (five) Management API certificates through the portal, which means that you can allow up to 6 (six) developers to manage your services in Windows Azure – 5 through the Management APIs using the CmdLets and 1 through the portal using the UI.

For details how to configure Windows Azure Service Management CmdLets follow the How-To Guide.

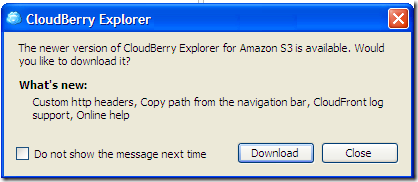

Nadezhda Lukyanova reports that Cloudberry Labs is Removing expiration from the freeware verson of CloudBerry Explorer 2.1 and later:

As always we are adding features that our customers are requesting. We got quite some feedback from our customers on our policy to expire the freeware version every three months. Even though we just wanted our customers to stay on the most recent version of the software many customers found it inconvenient and even distracting.

As of version 2.1 the freeware version doesn’t expire and you can use it for as long you like. Until for example AWS S3 console becomes mature enough and you don’t need a 3rd party product any more.

However, we would like to take this opportunity to tell you that it is highly recommended that you regularly upgrade to the most recent version of the software. Automated check for updates feature will tell you when the new version becomes available. Believe it or not with each version we fix some 100 issues, besides adding new exciting functionality to the product and it well worth upgrading.

CoreMotives reported Microsoft Publishes CoreMotives Case Study on 7/4/2010:

Microsoft selected CoreMotives as a case study of advanced use of cloud-based technology. Dynamics CRM and Windows Azure are at the core of our marketing automation suite. They enable instant access to powerful features that generate sales leads and marketing campaigns.

Microsoft’s case study on CoreMotives: Read it here

CoreMotives selected Windows Azure as its architecture platform because it provides a scalable, secure and powerful method of delivering software solutions. Windows Azure is a “cloud-based” infrastructure with processing and storage capabilities.

Our solutions require nothing to maintain, or support over time – thus lower costs. With zero-footprint, you can start immediately, regardless of your Microsoft CRM flavor: On Premise, Partner Hosted or Microsoft CRM Online.

Microsoft data centers meet compliance standards and other key accreditations for information security, such as the International Organization for Standardization (ISO) 27001:2005, the Sarbanes-Oxley Act, and the Statement on Auditing Standards (SAS) 70 Type II.

See the Jay Heiser posted The SAS 70 Charade to the Gartner blogs on 7/5/2010 post in the Cloud Security and Governance section below.

Return to section navigation list>

Windows Azure Infrastructure

• Cory Fowler (@SyntaxC4) wrote this You asked, Microsoft Delivered. Free Time on Windows Azure post on 7/6/2010:

If you were one of the developers at one of my talks that was tempted to try Windows Azure, but didn’t want to pay to give the platform a shot, here is your chance. Previously, the only way to get some time on Azure was to have an MSDN Subscription but Microsoft noticed that a lot of their audience that wanted to try out the Azure a Platform didn’t have a subscription.

Microsoft has announced the Windows Azure Introductory Special which offers 25 free hours per month on a Small instance, a Web Edition SQL Azure Database, 2 Service Bus connections to AppFabric, and 500MB of Transfers coming to, and going from the Cloud. This is a fairly decent starting point for someone trying to launch an existing application to Windows Azure.

How do I get started?

If you just came across my blog there are a few posts that you may want to check out to get up to speed on Windows Azure:

- My Presentations from PrairieDevCon

- How to get started with Windows Azure

- Synchronizing a local database to the Cloud using SQL Azure Sync Framework

- Setting up Windows Azure Development Storage

You can also get some tools and an a free IDE to help you deploy your project to the cloud.

Where do I sign up?

Head on over to the Windows Azure Offers page, and click the Buy Now button under the Introductory Special.

What do I do next?

Head out to TechDays 2010 for some Training on Windows Azure.

Microsoft is getting closer to parity with Google App Engine in the cost of getting started with a hosted PaaS offering for students and independent developers. See Randy Bias stokes the IaaS vs. PaaS competitive fire with Rumor Mill: Google EC2 Competitor Coming in 2010? of 7/5/2010 in the Cloud Computing Platforms and Services section below.

Brocade Communication Systems reported European Businesses Taking Meaningful Strides Toward Cloud Computing as relayed by this 7/5/2010 post to the HPC in the Cloud blog:

GENEVA, July 5, 2010 -- European enterprises are beginning to embrace the business opportunities offered by virtualizing assets and accessing applications through the cloud, according to new research, commissioned by Brocade (NASDAQ: BRCD). The research shows that 60 percent of enterprises expect to have started the planning and migration to a distributed – or cloud – computing model within the next two years. Key business drivers for doing so are to reduce cost (30 percent), improve business efficiency (21 percent) and enhance business agility (16 percent).

The findings appear to confirm recent research by analyst firm IDC, which identified that cloud IT services are currently worth £10.7bn globally, a figure that is estimated to grow to around £27bn by 2013.

For enterprise organizations, investment in the majority of cases is in the development of a private cloud infrastructure due, in part, to concerns over security. Over a third of respondents cited security as the most significant barrier to cloud adoption, closely followed by the complexities of virtualizing data centers, network infrastructure and bandwidth. The research also analyzed the small-to-medium enterprise (SME) space, and while enterprises are embracing the cloud and the business advantages it brings, SMEs appear to be slightly slower to adopt with only 42 percent predicting a move to the cloud within the next two years; unsurprisingly, 63 percent of these plan to opt for a hosted solution.

Other key research findings include:

- More than a quarter of large organizations are planning to migrate a cloud model within the next two years; 11 percent within one year

- A quarter of organizations stated that the ability to consolidate the number of data centers was also a critical driver

- The availability of bandwidth was also a deciding factor amongst 14 percent of respondents

The findings reinforce Brocade’s vision that data centers and networks will evolve to a highly virtualized, services-on-demand state enabled through the cloud. Brocade recently outlined its vision, called Brocade One, at its annual Technology Day. Brocade One is a unifying network architecture and strategy that enables customers to simplify the complexity of virtualizing their applications. By removing network layers, simplifying management and protecting existing technology investments, Brocade One helps customers migrate to a world where information and services are available anywhere in the cloud.

“As data centers become distributed, the network infrastructure must take on the characteristics of a data center. For the cloud to achieve its true promise, the network needs to deliver high performance, scalability and security,” said Alberto Soto, Vice President, EMEA, at Brocade. “What our research tells us is that companies are now recognizing the profound positive economic implications of adopting cloud solutions and are ready to make the journey of adoption, but only with a sound infrastructure in place.”

John Soat posted Five Predictions Concerning Cloud Computing to Information Week’s Plug into the Cloud blog on 6/30/2010:

It may be presumptuous to make predictions about cloud computing, since the cloud has such little history and is almost all about future potential. But here are a few prognostications, based on trends that have established the current cloud-computing paradigm.

- All applications will move into the cloud. There's been some discussion over whether certain applications -- those that are compute or graphics intensive, for instance -- may not be suited to the SaaS model. That controversy will fade as more applications come online. For example, Autodesk, the leading computer-aided design (CAD) software vendor, is already moving features and capabilities into the cloud.

- Platform-as-a-service (PaaS) will supplant software-as-a-service (SaaS) as the most important form of cloud computing for small and, especially, midsize businesses. PaaS is well suited to organizations with limited IT resources, in particular limited IT staff, that still want to design, implement, and support custom applications. (Check out the example of 20/20 Companies in this feature story.)

- Private clouds will be the dominant form of cloud computing in large enterprises for the next couple of years. The public cloud's gating factors -- security, performance, compliance -- will be enough to hold back its significant, widespread advance into the enterprise while internal IT organizations catch up in terms of virtualization, provisioning, application development, and service automation.

- Hybrid clouds eventually will dominate enterprise IT architectures. The advantages inherent in a technology architecture that combines specific internal capabilities with low-cost, flexible external resources is too compelling to ignore. This argues well for IT vendors with comprehensive portfolios of products/services. Microsoft, especially, will emerge as a major force in this new terrain.

- The term "cloud computing" will drop off the corporate lexicon. Once the cloud is firmly ensconced in the IT environment, there will be no need to make such a distinction.

I don't see these as long-term developments but more in the two-to-three-year range. What do you think? Have I missed anything?

<Return to section navigation list>

Cloud Security and Governance

• Paul Rubens posted a brief but useful Security Questions for Cloud Computing Providers article to Datamation.com on 7/6/2010:

There's also no obvious reason to assume that any service provider will be more able to provide good cloud security, and that means you will need to carry out due diligence, work out what the cloud security requirements are for your data, and check that a given cloud computing service provider can meet those requirements, according to Martin Blackhurst, a security specialist at UK-based consultancy Redstone Managed Solutions.

Although specific cloud security requirements are likely to vary from organization to organization, Blackhurst recommends, at the very least, asking cloud computing service providers under consideration the following questions. They can be broken down as relating to people, data, applications and infrastructure.

- Where will my data be stored?

- What controls do you have in place to ensure my sensitive business data is not leaving the virtual walls of your business?

- What are the borders of responsibility?

- How do you ensure my applications are not susceptible to emerging application security threats?

- How do you detect an application is being attacked in real-time, and how is that reported?

- How do you implement proactive controls over access to my applications -- and how can you prove to me that they are effective?

Scott Clark continued his Behind the Cloud blog with a Cloud is History: The Sum of Trust post of 7/5/2010:

To continue where we left off with the last blog, this time we are focusing the discussion around trust. In considering cloud, this is probably the largest barrier we will encounter.

If we look at history, the issues associated with trusting someone else to perform what we view as a critical element of our business has been faced and successfully addressed in the past. Semiconductor companies had to have the entire process of manufacturing under their direct oversight and control because portions of that process were considered business differentiating and proprietary, and close coupling between design process and manufacturing process were required for successful ASIC development (lots of iterative, back and forth process). As time marched on, capacity needs increased, complexity climbed, the cost increased with each of those dynamics, creating an ever higher barrier to entry for maintaining existing or creating new fabrication facilities. In the mid 1980’s, we witnessed the birth of the first foundry, with TSMC coming onto the scene to create a differentiated business model (Fabless Semiconductor), where engineering companies could focus on just the process of design, and then hand off their designs off to TSMC to be manufactured. The Fabless Semiconductor Industry is a $50B market today, and growing.

So, are the issues we face with datacenters today any different? Not really, just a slight different view of the same picture. The dynamics are the same: a non-linear cost increase due to capacity and complexity increases is the driver for re-evaluating the current position. The function is considered critical, and sometimes differentiating and/or proprietary to the business, and is therefore internally maintained at present. And finally, the function deals directly with the core product of the company, therefore security is a paramount concern. What we witnessed with the fabrication facilities is that many companies were able to realize the cost benefits of outsourcing that function without damaging the business, so we should be able to follow that model to realize the cost benefits that cloud computing offers with respect to the datacenter. And we even have a recipe for success to look and use as a template for what to do and how to do it.

What customers of cloud will be looking for from service providers is multi-faceted:

- Budget control – making sure they can continue to do the right thing for their company from a cost perspective and continue to come up with creative ways to keep budgets under control. This includes making sure they do not get locked into exclusive relationships, so they need to make sure that there are multiple vendor options so that there can be competition. In the same light, they need to make sure that the solution they consume is standards based, so that moving to another provider is simple, straight forward, and not costly.

- Do it my way – points to customer intimacy. The consumer company must understand the solution they are leveraging and the supplier must provide the solution in such a way that it makes sense to the customer. This sounds obvious, but in many cases, companies have been held hostage even by their own internal IT organizations through confusing terminology, overly complex descriptions of solutions, and territorial behavior. The customer should understand the solution on their terms, which implies that the service provider must intimately understand the customer’s core business. Customers should get the services and solution they need, which is something specific to their business, not something bootstrapped from another industry or something built for a different or generic purpose. And it is not sufficient to have really smart technology people on staff, and have the customer tell the service provider exactly what they need so the supplier can do the right thing – many times the customer doesn’t know what they need, they just want it to work right. That is why this needs to be domain specific, performed by domain experts in the customer’s space.

- Honesty – do I believe you? The customer needs to have faith and confidence that the supplier has the best interest of the consumer as a driver. Understanding intent and understanding positive behavioral characteristics as compared to negative ones. Any competitive or adversarial behavior will be the tip that trust should be called into question.

- Focus on my business, not yours (counter-intuitive concept). This is really the crux of the issue. If the customer can really believe that the supplier is looking out for customer interests first, and not only trying to tell the customer whatever they think they want to hear, only then will the customer allow the supplier to absorb responsibility from them for their infrastructure to help make them successful. This is key because if the customer has to continue to drive success and own all the responsibility, then nothing has really changed, and it is probably easier for the customer to continue keeping all the resource in-house where they have much more direct control over hire/fire, retention, resource caliber, etc. …

Scott continues his essay with what behaviors cloud service providers need to demonstrate to retain their customers’ trust.

Jay Heiser posted The SAS 70 Charade to the Gartner blogs on 7/5/2010:

SAS 70 is a) not a certification, b) not a standard, and c) isn’t meant to be applied the way it is being applied now. To be fair, all service providers are under huge customer pressure to provide SAS 70, but instead of explaining their security, continuity, and recovery capabilities in more appropriate terms, most vendors make the unfortunate decision to exaggerate the significance of their having undergone a SAS 70 evaluation.

Why should a potential customer accept SAS 70 as being proof of anything? They don’t know what was evaluated, they don’t know who evaluated it, or what form the evaluation took. Even if the evaluation did look at design and build considerations, it was almost certainly a very small part of the overall assessment, and do you really want an accounting firm evaluating security architectures and encryption implementations?

![]() Jay is a Gartner research vice president specializing in the areas of IT risk management and compliance, security policy and organization, forensics, and investigation. Current research areas include cloud and SaaS computing risk and control, technologies and processes for the secure sharing of data.

Jay is a Gartner research vice president specializing in the areas of IT risk management and compliance, security policy and organization, forensics, and investigation. Current research areas include cloud and SaaS computing risk and control, technologies and processes for the secure sharing of data.

Nicole Hemsoth asks Renting HPC: What's Cloud Got to Do with It? in this 7/5/2010 post that begins:

The following is a transcript of a public release of a discussion presented by Scott Chaney, Corporate Vice President for Trustworthy Computing at Microsoft as given to members of the United States government as they debate the inherent value and weaknesses of the cloud computing model. This coincides nicely with a new post from Scott Clark "Cloud is History: The Sum Value of Trust" [See above article.]

-----

Thank you for inviting me here today to discuss the federal government's use of cloud computing.

My name is Scott Charney, and I am the Corporate Vice President for Trustworthy Computing at Microsoft Corporation. I also serve as one of four Co-Chairs of the Center for Strategic and International Studies (CSIS) Commission on Cybersecurity for the 44th Presidency. Prior to joining Microsoft, I was Chief of the Computer Crime and Intellectual Property Section in the Criminal Division of the United States (U.S.) Department of Justice. I was involved in nearly every major hacker prosecution in the U.S. from 1991 to 1999; worked on legislative initiatives, such as the National Information Infrastructure Protection Act that was enacted in 1996; and chaired the G8 Subgroup on High Tech Crime from its inception in 1996 until I left government service in 1999.

I currently lead Microsoft's Trustworthy Computing (TWC) group, which is responsible for ensuring that Microsoft provides a secure, private, and reliable computing experience for every computer user. Among other things, the TWC group oversees the implementation of the Security Development Lifecycle (which also includes privacy standards); investigates vulnerabilities; provides security updates through the Microsoft Security Response Center; and incorporates lessons learned to mitigate future attacks.

Microsoft plays a unique role in the cyber ecosystem by providing the software and services that support hundreds of millions of computer systems worldwide. Windows-based software is the most widely deployed platform in the world, helping consumers, enterprises, and governments to achieve their personal, business, and governance goals. Also, as Steve Ballmer, our Chief Executive Officer, stated, ?we're all in? when it comes to the cloud. We already offer a host of consumer and business cloud services, including a wide array of collaboration and communications software.

We operate one of the largest online e-mail systems, with more than 360 million active Hotmail accounts in more than 30 countries/regions around the world. Microsoft's Windows Update Service provides software updates to over 600 million computers globally, and our Malicious Software Removal Tool cleans more than 450 million computers each month on average. We are a global information technology (IT) leader whose scale and experience shapes technology innovations, helps us recognize and respond to ever changing cyber threats, and allows us to describe the unique challenges facing the government as it moves to the cloud.

Cloud computing creates new opportunities for government, enterprises, and citizens, but also presents new security, privacy, and reliability challenges when assigning functional responsibility (e.g., who must maintain controls) and legal accountability (e.g., who is legally accountable if those controls fail). As a general rule, it is important that responsibility and accountability remain aligned; bifurcation creates a moral hazard and a legal risk because a ?responsible? party may not bear the consequences for its own actions (or inaction) and the correct behavior will not be incentivized. With the need for alignment in mind, I will, throughout the rest of my testimony, use the word ?responsibility? to reflect both responsibility and legal accountability. It must also be remembered that there is another type of accountability: political accountability. Citizens have certain expectations of governments (much like customers and shareholders have certain expectations of businesses) that may exceed any formally defined legal accountability.

As a cloud provider, Microsoft is responding to security, privacy, and reliability challenges in various ways, including through its software development process, service delivery, operations, and support. In my testimony today, I will (1) characterize the cloud and describe how cloud computing impacts the responsibility of the government and cloud providers; (2) discuss the responsibilities cloud computing providers and government must fulfill individually and together; and (3) examine the importance of trust and identity to cloud computing.

New Computing Models ("The Cloud") Create New Opportunities and Risks

See also the the Voices of Innovation’s Creating Trust for the Government Cloud post of 7/2/2010:

<Return to section navigation list>

Cloud Computing Events

• Geva Perry reported on 7/6/2010 that he wants speakers and panelists for the QCon San Francisco 2010 Cloud Track to be held 11/1 through 11/5/2010 at the Westin San Francisco Market Street hotel:

This year I will be hosting the QCon San Francisco Cloud Track. If you haven't attended QCon before, it's a high quality conference that takes place twice a year in London and San Francisco. The attendants are usually senior software development and IT executives, software architects and senior developers from enterprises and startups. The conferences are organized by the fine folks at InfoQ.

The theme of the cloud track this year will be Real-Life Cloud Architectures, with the focus being on production apps by larger organizations in the cloud. There will be five sessions all of which will take place on Friday, November 5, 2010.

The first presentation will be given by yours truly on selecting the right cloud provider (more details to come). Another presentation will be given by Randy Bias (@randybias) of Cloudscaling on the topic of building a private cloud. I don't want to give it away but Randy has some controversial views on the subject (and a lot of experience), so it's sure to be an interesting session.

Informal Call for Speakers

Although I have a few candidates for the other sessions, I'm still looking for speakers and panelists. In particular, I am looking for two things: a company that has migrated an existing production application to a public cloud and a company that has implemented a hybrid cloud application (meaning it runs both on-premise and in the cloud).

If you would like to suggest yourself or someone else, please send me an email (if you have my address), leave a comment below, or contact me through Twitter or LinkedIN.

Hope to see you there!

• Pat Romanski reported “At Cloud Expo East, Microsoft Dist[inguished] Engineer Dr. Yousef Khalidi discussed Microsoft's cloud computing vision & investments” in his Microsoft's Cloud Services Approach post of 7/6/2010:

Twenty million businesses and over a billion people use Microsoft cloud services, and many of the Microsoft products that we know and trust are available in the cloud. Like SQL. Office. And Windows.

In his general session at Cloud Expo East, Yousef Khalidi, Distinguished Engineer and lead architect for Windows Azure, discussed Microsoft's cloud computing vision and investments. He also outlined Microsoft's cloud strategy and portfolio, then discussed the benefits to customers and partners such as business agility, and the array of choice from on-premise into the cloud. In addition, Khalidi detailed the Windows Azure platform pillars and how they fit into Microsoft's cloud computing initiatives.

Click Here to View This Session Now!

About the Speaker

Dr. Yousef Khalidi is a Distinguished Engineer and Lead Architect of Windows Azure at Microsoft. Before Azure, Khalidi led an advanced development team in Windows that tackled a number of related operating system areas, including application model, resource management, and isolation. He also served as a member of the Windows Core Architecture group. He has a PhD and a Master of Science in Information and Computer Science from Georgia Institute of Technology (Georgia Tech) in Atlanta, Georgia.

See • MSDN Events announced on 7/6/2010 MSDN Webcast: Windows Azure AppFabric: Soup to Nuts (Level 300) for 9/15/2010 at 9:00 AM PDT in the AppFabric: Access Control and Service Bus section.

See • MSDN Events announced on 7/6/2010 MSDN Webcast: Developing for Windows Azure AppFabric Service Bus (Level 300) for 9/8/2010 at 9:00 AM PDT in the AppFabric: Access Control and Service Bus section.

The Microsoft Developer Network launched a new MSDN Architecture Center site on 7/5/2010. The site includes a rogue’s gallery of Architect Evangelists, which includes many contributors to this site, and a list of Architecture-related events sorted by state:

Currently, there’s not much original content on the site.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• James Governor analyzes EMC’s Greenplum purchase (see HPC in the Cloud article below) in his EMC Big Data Play Continues: Greenplum Acquisition post of 7/7/2010 to the MonkChips blog:

I wrote recently about VMware’s emerging Data Management play after the announcement the firm was hiring Redis lead developer Salvatore Sanfillipo[:]

While [CEO Paul] Maritz may say VMware isn’t getting into the database business, he means not the relational database market. The fact is application development has been dominated by relational- Oracle on distributed, IBM on the mainframe – models. Cloud apps are changing that. As alternative data stores become natural targets for new application workloads VMware does indeed plan to become a database player, or NoSQL player, or data store, or whatever you want to call it.

We have been forcing round holes into square pegs with object/relational mapping for years, but the approach is breaking down. Tools and datastores are becoming heterodox. something RedMonk has heralded for years.

Now comes another interesting piece of the puzzle. EMC is acquiring Greenplum – and building a new division around the business, dubbed Data Computing Product Division. While Redis is a “NoSQL” data store, Greenplum represents a massively parallel processing architecture designed to take advantage of the new multicore architectures with pots of RAM: its designed to process data into chunks for parallel processing across these cores. While Greenplum has a somewhat traditional “datawarehouse” play – it also supports MapReduce processing. EMC will be competing with the firms like Hadoop packager Cloudera [client] and its partners such as IBM [client]. Greenplum customers include Linkedin, which uses the system to support its new “People You May Know” function.

There is a grand convergence beginning between NoSQL and distributed cache systems (see Mike Gualtieri’s Elastic Cache piece). It seems EMC plans to be a driver, not a fast follower. The Hadoop wave is just about ready to crash onto the enterprise, driven by the likes of EMC and IBM. Chuck Hollis, for example, points out Greenplum would make a great pre-packaged component VBlock for VMware/EMC/Cisco’s VCE alliance – aimed at customers building private clouds. Of course Cisco is likely to make its own Big Data play anytime soon… That’s the thing with emergent, convergent markets- they sure make partnering hard. But for the customer the cost of analysing some types of data is set to fall by an order of magnitude, while query performance improves by an order of magnitude. Things are getting very interesting indeed.

• David Linthicum asserts “EMC's shutdown of the Atmos online cloud storage service is a good example of a provider cannibalizing its own market” as a preface to his Why some vendors regret becoming cloud providers post of 7/6/2010 to InfoWorld’s Cloud Computing blog:

I was not surprised to hear that EMC is shutting down Atmos, its cloud storage service. This was a case where moving to the cloud was good for technology but perhaps bad for business.

As Teri McClure, an analyst with Enterprise Strategy Group, puts it, "EMC chose to shutter its Atmos Online service to avoid competing with its software customers." Fair enough, but I'm not sure why EMC did not see that issue from the get-go.

The larger issue here is that large enterprise software and hardware companies, like EMC, that move into cloud computing could find themselves cannibalizing their existing market. Thus, they might end up selling cloud services to replace their more lucrative hardware and software solutions or -- in the case of EMC -- competing with their partners.

I suspect we'll be seeing more cloud pullbacks, considering the number of large enterprise software companies -- including Microsoft, Oracle, and IBM -- rushing into the cloud computing space. For instance, if you're selling cloud storage at 15 cents per gigabyte per month, but your customers end up spending $1 per gigabyte per month for your storage box offering, how do you suspect your customers to react?

Consider this scenario: A customer adds a terabyte of cloud storage from a large software and hardware vendor now offering cloud storage, a few days before the sales rep comes in to pitch a DASD upgrade. But the upgrade does not happen. As a result, those in the traditional hardware and software marketing and sales side of the vendor soon learn that while it's good to have "cloud computing" in the sales pitch, they did not understand how it would affect the traditional business -- and their paychecks.

• The HPC in the Cloud blog reprinted the EMC to Acquire Greenplum press release on 7/6/2010:

EMC Corporation (NYSE: EMC), the world's leading provider of information infrastructure solutions, today announced it has signed a definitive agreement to acquire California-based Greenplum, Inc. Greenplum is a privately-held, fast-growing provider of disruptive data warehousing technology, a key enabler of "big data" clouds and self-service analytics. Upon completion of the acquisition, Greenplum will form the foundation of a new data computing product division within EMC's Information Infrastructure business.

Today, new forms of data—massive amounts of it—are emerging more quickly than ever before thanks to always-on networks, the Web, a flood of consumer content, surveillance systems, sensors and the like. In a recent report, IDC predicted that over the next 10 years the amount of digital data created annually will grow 44 fold. Companies are increasingly turning to new architectures and new tools to help make sense of this "big data" phenomenon.

Regarded by industry experts as a visionary leader, Greenplum utilizes a "shared-nothing" massively parallel processing (MPP) architecture that has been designed from the ground up for analytical processing using virtualized x86 infrastructure. Greenplum is capable of delivering 10 to 100 times the performance of traditional database software at a dramatically lower cost. Data-driven businesses around the world, including NASDAQ OMX, NYSE Euronext, Skype, Equifax, T-Mobile and Fox Interactive Media have adopted Greenplum for sophisticated, high-performance data analytics.

“Big data” is almost as hype-infested as “cloud computing.”

Randy Bias stokes the IaaS vs. PaaS competitive fire with Rumor Mill: Google EC2 Competitor Coming in 2010? of 7/5/2010:

I’ve heard from a somewhat reliable source that Google is working on their Amazon EC2 competitor. Yes, some kind of on-demand virtual servers. I would have been the last person to guess that Google would take this direction[1], but you have to admit it makes a certain sense from their perspective. Consider:

- Amazon’s EC2 is clearly generating Real Revenue (TM) and could be at 500-750M in revenue for 2010

- Google has a massive global footprint and is north of one million servers

- The support structure for these servers includes a huge investment in datacenters, networking, and related

- The Googleplex houses an extremely large number of talented engineers in relevant areas: networking, storage, Linux kernel, server automation, etc.

- Google Storage recently went into BETA and is accepting developer signups

This last is perhaps one of the more telling signs. As you may be aware, Amazon’s Simple Storage Service (S3) pre-dates Amazon’s Elastic Compute Cloud (EC2). When Amazon launched in Europe they first deployed S3 followed by EC2. The same happened with their Asia/Pac deployment.

Amazon has built AWS in such a way that all of the services are synergistic, but in particular, EC2 is dependent on S3 as a persistent storage system of record. EC2 AMIs originate from and are stored in S3, it’s the long term backing store for Elastic Block Storage (EBS) and EBS snapshots, and it’s safe to assume that many other kinds of critical data that AWS relies on are stored there.

Would Google take a different approach? It’s doubtful. Amazon’s S3 is built to be a highly scalable storage platform[2]. Google’s own GoogleFS and BigTable server similar purposes. It’s certain that Google would use related design principles and hence we could see the Google Storage as a prelude to a Google on-demand virtual server service (Google Servers???).

Combined with the rumor I heard from a reasonably informed source I think we can look forward to an EC2 competitor, hopefully this year.

What I want to bring to folks attention here is that if another credible heavyweight enters into this market it will have a tremendous impact in further driving the utilitization of cloud services. In the medium term it will also threaten hosting providers and ‘enterprise clouds‘.

Why? I think what many hosting providers fail to understand is that Amazon and Google, particularly if fueled by direct competition, must grow up into the enterprise space. Just as in the Innovator’s Dilemma, they will eventually provide most of the features of any ‘enterprise’ cloud, which means that if you aren’t building to be competitive with Amazon and Google, you aren’t in the public cloud game.

Much more detail on this in a future posting.

[1] My best would have been that Google put more weight behind PaaS solutions like Google App Engine (GAE) and related, which are more ‘google-y’.

I’m looking forward to Randy’s “future posting.”

Maureen O’Gara asserts “The infrastructure Planet is pitching initially at hosting companies & resellers is distinctly enterprise-class & purpose-built” as a preface to her The Planet Undercuts Amazon post of 7/5/2010:

The Planet, which, come to find out, is suitably named since it's the largest of the privately held U.S. hosters with eight data centers and 50,000-odd servers playing host to 18,000 SMB companies and 12 million web sites worldwide, has a new Server Cloud that undercuts Amazon.

Amazon jacks up its bandwidth charges 700%-800% over cost, Planet's practically scandalized senior product manager for cloud services Carl Meadows tsk-tsks.

The Planet is offering a far more reasonable prix fixe menu compared to Amazon's à la carte prices. Its bandwidth price alone is more like eight cents a gig to Amazon's 22 cents.

And the infrastructure Planet is pitching initially at hosting companies and hosting resellers is distinctly enterprise-class and purpose-built for web-based businesses: Sun SANs, rack-mounted dual-Nehalem-based Dell servers, a Cisco and Juniper powered network. None of your shoddy local disks, it says, that can leave customers vulnerable to hardware failures, downtime and data loss.

But, since it's going for lower cost, it's using the KVM hypervisor for virtualization, not VMware mojo, and Canonical's Ubuntu Server for its KVM platform. Xen, Meadows figures, at least the open source Xen project, is "dying."

For the price customers still get dedicated resources. They get fully guaranteed CPUs, RAM, storage and network capacity of their own that can't suddenly be usurped by some noisy neighbor. Redundancy and availability are built-in.

The widgetry, which supports both Centos Linux and Windows platforms - Windows Server 2003 R2 or 2008 R2 run an extra 20 bucks a month - went into beta in February with 400 customers using 1,000 virtual servers. The production-ready Server Cloud instances provide completely automated provisioning, with login reportedly in as little as five minutes and round-the-clock service.

Prices start at $49 a month for a half-gig of memory and 60GB of storage with a dedicated half-core of CPU power and a free terabyte of bandwidth. From there it increases in steps depending on RAM and vCPUs to $339 a month for four vCPUs and 16GB of memory. Extra bandwidth costs 10 cents a GB or 80 buck a month for another TB. Extra storage runs $25-$110 a month for another 160GB to 500GB.

Hourly billing has flown out the proverbial window. There's a meter visible for the customer to see if he's exceeding any perimeters. There are also supposed to be clear SLAs.

In The Planet's opinion "The industry is rife with cloud services hindered by inconsistency and a lack of redundancy. By contrast, our customers count on us for high availability, rapid provisioning and seamless scalability, which they'll find in our new Server Cloud. Customers are able to fully manage all aspects of their virtual servers, as well as include managed services for the platform, which provides the best value in the business."

It says Server Cloud is always available, since computing and storage resources are separate and independent. In the event of a complete host server failure, data is always protected.

The Planet offers service upgrades, including managed server monitoring and remediation, server security management and managed backup.

Looks to me as if Planet is undercutting Windows Azure, too.

<Return to section navigation list>