Windows Azure and Cloud Computing Posts for 7/16/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

![AzureArchitecture2H_thumb[3] AzureArchitecture2H_thumb[3]](http://lh5.ggpht.com/_GdO7DQgAn3w/TEDYtBKczmI/AAAAAAAACUA/IAM29rLoIic/AzureArchitecture2H_thumb35.png?imgmax=800)

Update 7/17/2010: Added update to My Using the SQL Azure Migration Wizard v3.1.3/3/1/4 with the AdventureWorksLT2008R2 Sample Database in the SQL Azure Database, Codename “Dallas” and OData section and added Bruno Terkaly’s Leverage Cloud Computing with Windows Azure and Windows Phone 7 – Step 1 to Infinity in the Windows Azure Infrastructure section, both marked ••.

Update 7/17/2010: Added items marked •.

Update 7/15/2010: Diagram above updated with Windows Azure Platform Appliance, announced 7/12/2010 at the Worldwide Partners Conference and Project “Sydney”

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

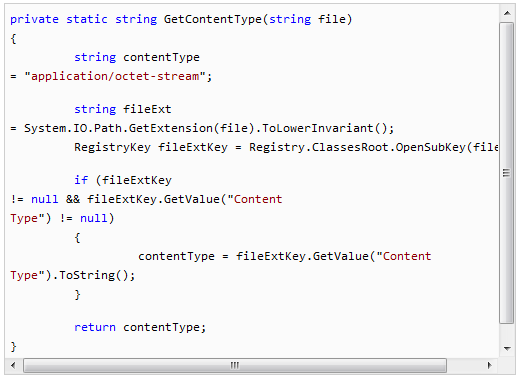

Ryan Dunn explains Getting the Content-Type from your Registry for Windows Azure blobs in this 7/16/2010 post:

For Episode 19 of the Cloud Cover show, Steve and I discussed the importance of setting the Content-Type on your blobs in Windows Azure blob storage. This was was especially important for Silverlight clients. I mentioned that there was a way to look up a Content Type from your registry as opposed to hardcoding a list. The code is actually pretty simple. I pulled this from some code I had lying around that does uploads.

Here it is:

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

•• Updated My Using the SQL Azure Migration Wizard v3.1.3/3.1.4 with the AdventureWorksLT2008R2 Sample Database post of 1/23/2010 on 7/18/2010 to reflect upgrade from v.3.1.3 to v3.3.3:

George Huey’s SQL Azure Migration Wizard (SQLAzureMW) offers a streamlined alternative to the SQL Server Management Studio (SSMS) Script Wizard for generating SQL Azure schemas that conform to the service’s current Data Definition Language (DDL) limitations. You can download the binaries, source code, or both from CodePlex; a Microsoft Public License (Ms-PL) governs use of the software. Detailed documentation in *.docx format is available. The recent versions use SQL Server’s Bulk Copy Protocol (BCP) utility to populate SQL Azure tables with data from an on-premises database.

• Update 7/18/2010 9:00AM: George Huey updated SQLAzureMW from v.3.3.2 to v.3.3.3 on 7/17/2010 with 5,227 downloads of the new version on its first day. The last documentation release on 7/6/2010 covers v.3.3.

Read more.

•• Phani Raju announced Windows Phone OData sample application updated for July 2010 Beta release on 7/13/2010:

With the latest release of the Windows Phone tools, the OData sample Netflix application has also been updated.

Here is where you get the latest Windows Phone Tools :

Windows Phone Developer Tools BetaAnd here is where you get the latest OData sample application :

ODataOnWinPhoneSample_July2010Beta.zipOriginal blog post here :

Developing a Windows Phone 7 Application that consumes OData

• Rick Negrin and Niraj Nagrani present their 01:01:12 [AP01] Building a Multi-Tenant SaaS Application with SQL Azure and AppFabric session video from the Worldwide Partners Conference 2010 (WPC10):

Come learn how SQL Azure, AppFabric, and the Windows Azure platform will enable you to grow your revenue and increase your market reach. Learn how to build elastic applications that will reduce costs and enable faster time to market using our highly available, self-service platform. These apps can easily span from the cloud to the enterprise. If you are either a traditional ISV looking to move to the cloud or a SaaS ISV who wants to get more capabilities and a larger geo-presence, this is the session that will show you how.

Note that video is missing from 00:00:00 to 00:03:30; use the slider.

• Wayne Walter Berry provides a link to Liam Cavanagh’s 00:06:42 Channel9 video segment in this Video: Extending SQL Server Data to the Cloud using SQL Azure Data Sync post of 7/16/2010:

In this first of a 3 part webcast series, Liam Cavanagh (@liamca) will show you how SQL Azure Data Sync enables on-premises SQL Server data to be easily shared with SQL Azure allowing you to extend your on-premises data to begin creating new cloud-based applications.

Using SQL Azure Data sync’s bi-directional data synchronization support, changes made either on SQL Server or SQL Azure are automatically synchronized back and forth.

Video: View It

To learn more and register for access to SQL Azure Data Sync Service please visit: http://sqlazurelabs.com/.

Wayne Walter Berry announced the availability of a 00:06:45 Channel9 Video: Using SQL Azure Data Sync Service to provide Geo-Replication of SQL Azure databases by Liam Cavanagh. From the Channel9 abstract:

In this second of a 3 part webcast series, I introduce you to the Data Sync Service for SQL Azure. This service extends on the SQL Azure Data Sync tools’ ability to provide symmetry between SQL Server and SQL Azure where data changes at either location are bi-directionally synchronized between on-premises and the cloud.

With SQL Azure Data Sync Service, we have extended that capability to allow you to easily geo-distribute that data to one or more SQL Azure data centers around the world. Now, no matter where you make changes to your data, it will be seamlessly synchronized to all of your databases whether that be on-premises or in any of the SQL Azure data centers. To learn more and register for access to SQL Azure Data Sync Service please visit: http://sqlazurelabs.com/.

Video: View It

• and Video: New Business and Application Models with Microsoft SQL Azure (00:20:38, EXLS22 by Sudhir Hasbe from WPC10):

SQL Azure enables businesses to increase profitability, realize new revenue streams and expand segment reach. In this video Sudhir Hasbe talks about how SQL Azure enables first mover advantage by reducing the time required for you to build and deploy to market. Learn about the game-changing opportunities that SQL Azure provides with elastic scalability, a dynamic consumption model, business ready service levels, and web-based accessibility and flexibility.

Video: View It

Wayne Walter Berry describes Implementing Geographic Redundancy (a.k.a., Geolocation) in his post to the SQL Azure team blog of 7/16/2010:

Geographic redundancy is a fancy word for distributing your data across multiple data centers globally. One way to accomplish this is to spread your data across many SQL Azure data centers using Data Sync Service for SQL Azure to synchronize a hub database to many global member databases. SQL Azure has six data centers worldwide

In the future I will blog about a more complicated technique that provides real time writes and geographic redundancy with Data Sync Services.

Considerations

The technique that I will discuss in this blog post is best for databases with low writes and high reads where the write data doesn’t need to be synchronized in real time. One scenario that fits well with this technique is a magazine publishing web site that I discussed in this blog post.

The current CTP of Data Sync Service for SQL Azure allows the most frequent synchronization to be an hour. This limitation reduces the usefulness of this specific technique to a limited number of applications. The good news is that you will have an option for more frequent synchronization in upcoming releases of Data Sync Services.

Setting up Data Sync Service for SQL Azure

In order to get geographic redundancy, you will need to have multiple SQL Azure service accounts with SQL Azure servers that are in different data centers. Currently, you are only allowed to have one server per account, which means to have resided in multiple data centers, you need multiple account. The location of the data centers and the count of the member databases depend on the needs of your application. Having two databases, in two different data centers is the minimum needed for geographic redundancy.

The next step is to set up Data Sync Service for SQL Azure. With this technique, the hub database is the database that you can read and write from, and the member databases are the ones you only read (SELECT) from. Having the member databases read-only simplifies the issues of data integrity and synchronization conflicts.

Modifying the Data Layer

The final step is to add some intelligence to your data layer code that will pick a local datacenter relative to the Windows Azure web role first. If the local database is offline, and the code fails over to a remote SQL Azure datacenter. This code expands on the connection handling code that was discussed in this blog post.

Connection Strings

Using the setup above, you now have multiple read-only member databases distributed globally. This means that you have multiple connection strings that your application can use. As a design exercise, let’s assume these applications are Windows Azure web roles and that they could exist in the same data centers as the SQL Azure database.

In this technique I am going to dynamically construct the connection string every time a rREAD query is executed against the database. I want my code to have these goals:

- Return a connection string where the primary server is in the same datacenter as the Windows Azure web role is running. This will increase performance and reduce data transfer charges.

- Return a connection string where the failover partner is in a different data center than the primary server. This will give me geographic redundancy.

- Build on earlier code examples to try again at the local datacenter for transient errors, instead of calling the remote data center.

- Have one server per datacenter that has matching data.

- All the databases have the same name; this keeps the code simpler for this example, since we don’t have to maintain a list of databases name for each server.

- Only try one remote data center. If the local data center and the random failover partner fail, abort the operation.

ConnectionStringManager class,

shown below,knows about the data centers and servers that hold the data. It also knows how to read the user id and password from the configuration file. From this information, it can construct a connection string to a local SQL Azure database, or return a random failover partner to connect to remotely.The ConnectionStringManager class code can be found in the [Code Sample] download [below]. … [Source code excised for brevity.]

• Jeff Krebsbach’s RESTful Applications and the Open Data Protocol post of 7/15/2010 provides a concise, third-party overview of REST architectural style and the OData protocol:

Before we can build these types of applications, we must answer two questions:

What is REST?

Rest is an “Architectural Style” developed to help provide a standard strategy for data interactions over the internet (Port 80 – HTTP) using entirely existing web based technologies using the HTTP verbs we all know and love(GET, POST, PUT, DELETE). How is it different than Web Services?

Web Services are designed to abstractly decouple systems. A call over port 80 can initiate an invoicing process, update warehouse inventory, or initiate a long running transaction. Every time we have a different need for a web service, we start anew. Each method is implemented separately, a wsdl is defined describing the specific method, a contract is written describing what data must be provided and what data will be returned.

REST is a protocol designed to expose data. Web Services have contracts on methods. Every Web Service interaction must be a method call, and every method call has a data contract. In contrast, REST works on a structured query language intended to expose data for reading and writing. Let’s take an example site example.com that wants to expose their Users table.

http://example.com/users/

This will be our fundamental REST example. What this means is from the address “example.com”, we want to see all users. An XML result will be returned in a standard format that will enumerate the objects and their properties to then be used.

http://example.com/users/{user}

Taken one step further, if we provide a user ID in the request string, we will instead receive only one user instead of the whole list in the response.

http://example.com/locations/

Now we will see all locations instead of all users

Along with this and a few other ways of querying the data, REST also exposes standard methods of exposing relationships, inserting, updating, and deleting data. Because we have one way to interact with the data, we do not need to defined WSDL files or need to concern ourselves with how to interact with a specific version of a given web service. The implementation of those commands is left as an exercise to the reader.

What is OData?

OData is a framework built in WCF to fully automate the REST protocol. Any REST enabled data sources can therefore be leveraged using an OData connection over the web. A data provider does not need to use OData, they simply need to use REST. Likewise, a consumer could choose not to use OData, they digest the REST however they see fit. OData allows us to not have to worry about the specifics of implementing REST, and instead use OData to leverage the heavy lifting and can treat REST as an open standard for exposing tables. And for our security aficionados, OData allows us to restrict data selects, updates, and inserts in the most granular levels (ie: This user group may select the data but not update, this group may not interact with the data in any way)

Most importantly to me: Because of the decoupled, uniquely addressable, and standardize approach to OData, I don’t have to worry about the five different GetUsers web service method that invariably arises as each department has their own specific needs for what a “User” object must look like. The users table is simply available for all OData consumers to digest as they see fit.

Likewise, OData makes no presuppositions on what the data source truly is. Using WCF, with a few clicks I can publish an OData data source pointing directly at my SQL Server database, or I can create custom CLR entities that are based on CSLA objects, or the data is being pulled from an Access database on a Win NT Server – the only thing important to the OData consumers is that the REST endpoint is available. Everything else is seamless to the consumer.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

• The “Geneva” Team reports the availability of the AD FS 2.0 Step-by-Step Guide: Federation with CA Federation Manager guide in a 7/16/2010 post:

We have published a step-by-step guide on how to configure AD FS 2.0 and CA Federation Manager to federate using the SAML 2.0 protocol. You can view the guide either as a web page or in docx format. This is the first in a series of these guides; the guides are also available on the AD FS 2.0 Step-by-Step and How To Guides page.

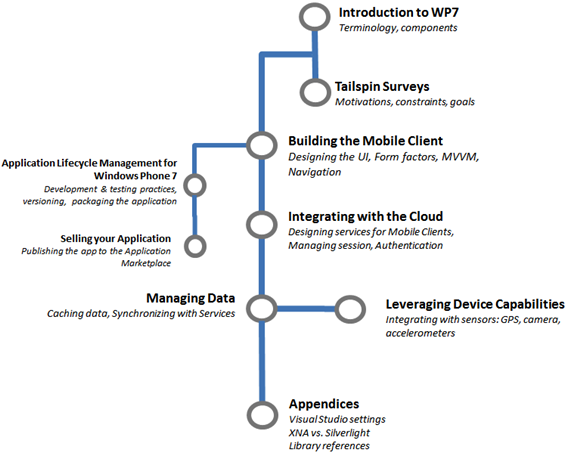

• Eugenio Pace’s What’s next? Tailspin goes mobile… post of 7/15/2010 reports the availability and content of the Windows Azure Architecture Guide – Part 2:

Now that the Windows Azure Architecture Guide – Part 2 is “done” (Done = content is complete and production is taking place. Production = index, covers, PDF conversion, etc), our team is ready for the next adventure.

Our fictitious ISV, Tailspin, is very innovative and wants to stay on the latest and greatest. Now they want to extend their flagship application: Tailspin Surveys, to mobile users. The essential idea being to allow its customers (Adatum, Fabrikam, etc) to publish surveys to people with Windows Phone 7 devices, so they can capture information from the field.

This is a “mobile front-end” interacting with a “cloud backend” scenario.

Note: Tailspin backend is mostly covered in the Windows Azure Guide, but we will extend it to support new user stories that are specific to the mobile client. So expect a different version included in the new guide.

Detailed scenario (hidden agenda items in red [in the original post])

TailSpin: the “SaaS” ISV (this is covered in WAAG Part 2)

- Develops a multitenant “survey as a service” for a wide range of companies (with “Big IT” and with “Small IT”)

- Hosts the application on Windows Azure (service endpoint for WP7 app to interact: REST? SOAP? Chunky? Chatty?)

- Develops a WP7 application for “surveyors” (people taking surveys) (packaging the app)

- Publishes the WP7 application to the Application Marketplace (Publishing the app, certification process, versions, trials and betas)

Fabrikam & Adatum (companies subscribing to TailSpin’s service)

- (Self) design and launch surveys using TailSpin’s app (surveys now will have a target geography, used for “get surveys near me”)

- Waits for collected information

- Browses and analyzes results (added data-points captured by the phone: geo-location, voice, pictures, barcodes)

People with WP7: “surveyors”

- Own a WP7 device (browse the Tailspin mobile website, download the app from the Application Marketplace)

- They “work from home”

- They subscribe to surveys that are targeted to the place they are (location based search, like “Surveys 40 miles from Redmond”)

- They are notified of new surveys available that match their subscription criteria (push notifications)

- They complete the surveys (UI design, app state, connectivity management, sending results back to the service-> service design, authenticating, being a “good citizen app”, resource management like battery levels)

In this scenario, the “surveyors” could answer for themselves, but our current preference would be that they’ll interview others or would provide multiple answers (think someone surveying homes or traffic patterns in different places or someone going from home to home in a neighborhood collecting information).

You can imagine a mechanism were there’s compensation for surveyors from the companies that submit the survey: Adatum could pay for every “x submitted surveys” or there might even be a “coupon” system: “every 10 surveys you get a discount coupon for the supermarket”.

As usual, the surveys application is just an excuse to discuss the design patterns behind the solution (all the red things above). The entire app will be functionally incomplete, but technically sound.

The current (draft) structure for the guide is the following:

Feedback of course, is greatly welcome!

• Zane Adam links brief video interviews in his Partners at WPC discuss benefits of SQL Azure and AppFabric post of 7/15/2010:

The Worldwide Partner Conference (WPC) is a great chance for us to sit down with industry partners and understand their latest plans for adopting cloud technologies and the opportunities they see with SQL Azure, Windows Azure AppFabric, and “Dallas” –as they adopt the Windows Azure Platform and deliver value to customers in new ways. I’ll be sharing some of these stories and usage scenarios with you by linking to some very short partner interviews, presentations, and demos captured onsite here at the conference.

First up, here is a link to Brian Rose at Infor, discussing the benefits they see with SQL Azure and Windows Azure AppFabric. Infor is a 2 Billion dollar per year company with more than 70,000 customers worldwide. We are pleased to see Infor adopt the Windows Azure Platform!

Other interviewees are Darren Guarnaccia from Sitecor, Jason Popillion from GCommerce, Rainer Stropek from Software Architects and Rob McGreevy from Invensys.

• Rick Negrin and Niraj Nagrani present their 01:01:12 [AP01] Building a Multi-Tenant SaaS Application with SQL Azure and AppFabric session video from the Worldwide Partners Conference 2010 (WPC10):

Come learn how SQL Azure, AppFabric, and the Windows Azure platform will enable you to grow your revenue and increase your market reach. Learn how to build elastic applications that will reduce costs and enable faster time to market using our highly available, self-service platform. These apps can easily span from the cloud to the enterprise. If you are either a traditional ISV looking to move to the cloud or a SaaS ISV who wants to get more capabilities and a larger geo-presence, this is the session that will show you how.

Note that video is missing from 00:00:00 to 00:03:30; use the slider. (Repeated from the SQL Azure Database, Codename “Dallas” and OData section.)

• Pluralsight’s Matt Millner wrote an 11-page Service Bus Technologies from Microsoft – Better Together whitepaper, which became available for download on 7/16/2010 for Microsoft. From the abstract:

Messaging for the enterprise and beyond

Applies to: The BizTalk ESB Toolkit 2.0 and the Windows Azure AppFabric Service Bus

Abstract: This whitepaper provides an overview of the Microsoft® BizTalk® Enterprise Service Bus Toolkit (BizTalk ESB Toolkit) 2.0 and the Windows® Azure AppFabric Service Bus. The BizTalk ESB Toolkit is a set of tools built on top of Microsoft BizTalk Server (starting with BizTalk Server 2009) that provide a more loosely coupled messaging and service composition. The AppFabric Service Bus is a message relay service hosted in the cloud, and is one part of the Windows Azure AppFabric. In this paper, you will be introduced to each of these technologies and provide examples of how each can be applied. For a more detailed discussion of each technology, see the Additional Resources section at the end of this paper.

Aaron Skonnard presented Introducing AppFabric: Moving .NET to the Cloud by Aaron Skonnard to Microsoft Dev Days on 4/11/2010. Here’s the description of the 01:15:02 session:

Companies need infrastructure to integrate services for internal enterprise systems, services running at business partners, and systems accessible on the public Internet. And companies need be able to start small and scale rapidly. This is especially important to smaller businesses that cannot afford heavy capital outlays up front. In other words, companies need the strengths of an ESB approach, but they need a simple and easy path to adoption and to scale up, along with full support for Internet-based protocols. These are the core problems that AppFabric addresses, specfically through the Service Bus and Access Control services.

This session is presented by Aaron Skonnard during Microsoft DevDays 2010 in The Hague in The Netherlands.

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Bruno Terkaly’s Leverage Cloud Computing with Windows Azure and Windows Phone 7 – Step 1 to Infinity post of 5/17/2010 begins:

I am about to discuss a series of technologies that will be around for the next 5 to 10 years. I am talking about Cloud and Mobile Computing.

You will see detailed code about how to build applications. I want to discuss how to create and expose data through service oriented approaches, hosted in Windows Azure. I want to show you how to consume SQL Azure. …

The Value Proposition of Windows Azure. Businesses can rent access to applications and IT infrastructure that reside on the Internet, pay for them on a subscription or per-use basis and provide employees with access to information from anywhere at any time with nothing more than a connected device. …

The World of Private Clouds. Private cloud services run in datacenters on company property managed by corporate IT staffs. Private clouds address the security concerns of large enterprises. They're scalable, growing and shrinking as needed. They're also managed centrally in a virtualized environment.

…

What are we going to cover? I am going to begin a series of posts that illustrate how to leverage power of cloud computing on Windows Phone 7. My posts will show you how to make use of many technologies:

- SQL Azure

- Windows Azure

- WCF Services

- Silverlight LINQ

- And various framework and language technologies

I’d like to hear from you. Shoot me an email at bterkaly@microsoft.com and let me hear your thoughts.

Bruno’s a Microsoft developer evangelist for the San Francisco Bay Area. Read his complete post. Here’s a link to Design Resources for Windows Phone (as of 7/12/2010) from MSDN.

•• See Phani Raju announced Windows Phone OData sample application updated for July 2010 Beta release on 7/13/2010 in the SQL Azure Database, Codename “Dallas” and OData section.

•• See Phani Raju announced Windows Phone OData sample application updated for July 2010 Beta release on 7/13/2010 in the SQL Azure Database, Codename “Dallas” and OData section.

Brian Hitney brings developers up to date with @home with Windows Azure: Behind the Scenes of 7/16/2010:

As over a thousand of you know (because you signed up for our Webcast series during May and June), my colleague Jim O’Neil and I have been working on a Windows Azure project – known as @home With Windows Azure – to demonstrate a number of features of the platform to you, learn a bit ourselves, and contribute to a medical research project to boot. During the series, it quickly became clear (…like after the first session) that the two hours was barely enough time to scratch the surface, and while we hope the series was a useful exercise in introducing you to Windows Azure and allowing you to deploy perhaps your first application to the cloud, we wanted (and had intended) to dive much deeper.

So enter not one but two blog series. This introductory post appears on both of our blogs, but from here on out we’re going to divide and conquer, each of us focusing on one of the two primary aspects of the project. Jim will cover the application you might deploy (and did if you attended the series), and I will cover the distributed.cloudapp.net application, which also resides in Azure and serves as the ‘mothership’ for @home with Windows Azure. Source code for the project is available, so you’ll be able to crack open the solutions and follow along – and perhaps even add to or improve our design.

You are responsible for monitoring your own Azure account utilization. This project, in particular, can amass significant costs for CPU utilization. We recommend your self-study be limited to using the Azure development fabric on your local machine, unless you have a limited-time trial account or other consumption plan that will cover the costs of execution.

So let’s get started. In this initial post, we’ll cover a few items

- Project history

- Folding@home overview

- @home with Windows Azure high-level architecture

- Prerequisites to follow along

Project history

Jim and I have both been intrigued by Windows Azure and cloud computing in general, but we realized it’s a fairly disruptive technology and can often seem unapproachable for many of your who are focused on your own (typically on-premises) application development projects and just trying to catch up on the latest topical developments in WPF, Silverlight, Entity Framework, WCF, and a host of other technologies that flow from the fire hose at Redmond. Walking through the steps to deploy “Hello

WorldCloud” to Windows Azure was an obvious choice (and in fact we did that during our webcast), but we wanted an example that’s a bit more interesting in terms of domain as well as something that wasn’t gratuitously leveraging (or not) the power of the cloud.Originally, we’d considered just doing a blog series, but then our colleague John McClelland had a great idea – run a webcast series (over and over… and over again x9) so we could reach a crop of 100 new Azure-curious viewers each week. With the serendipitous availability of ‘unlimited’ use, two-week Windows Azure trial accounts for the webcast series, we knew we could do something impactful that wouldn’t break anyone’s individual pocketbook – something along the lines of a distributed computing project, such as SETI.

SETI may be the most well-known of the efforts, but there are numerous others, and we settled on one (http://folding.stanford.edu/, sponsored by Stanford University) based on its mission, longevity, and low barrier to entry (read: no login required and minimal software download). Once we decided on the project, it was just a matter of building up something in Windows Azure that would not only harness the computing power of Microsoft’s data centers but also showcase a number of the core concepts of Windows Azure and indeed cloud computing in general. We weren’t quite sure what to expect in terms of interest in the webcast series, but via the efforts of our amazing marketing team (thank you, Jana Underwood and Susan Wisowaty), we ‘sold out’ each of the webcasts, including the last two at which we were able to double the registrants - and then some!

For those of you that attended, we thank you. For those that didn’t, each of our presentations was recorded and is available for viewing. As we mentioned at the start of this blog post, the two hours we’d allotted seemed like a lot of time during the planning stages, but in practice we rarely got the chance to look at code or explain some the application constructs in our implementation. Many of you, too, commented that you’d like to have seen us go deeper, and that’s, of course, where we’re headed with this post and others that will be forthcoming in our blogs.

Overview of Stanford’s Folding@Home (FAH) project

Stanford’s http://folding.stanford.edu/ was launched by the Pande lab at the Departments of Chemistry and Structural Biology at Stanford University on October 1, 2000, with a goal “to understand protein folding, protein aggregation, and related diseases,” diseases that include Alzheimer’s, cystic fibrosis, CBE (Mad Cow disease) and several cancers. The project is funded by both the National Institutes of Health and the National Science Foundation, and has enjoyed significant corporate sponsorship as well over the last decade. To date, over 5 million CPUs have contributed to the project (310,000 CPUs are currently active), and the effort has spawned over 70 academic research papers and a number of awards.

The project’s Executive Summary answers perhaps the three most frequently asked questions (a more extensive FAQ is also available):

What are proteins and why do they "fold"? Proteins are biology's workhorses -- its "nanomachines." Before proteins can carry out their biochemical function, they remarkably assemble themselves, or "fold." The process of protein folding, while critical and fundamental to virtually all of biology, remains a mystery. Moreover, perhaps not surprisingly, when proteins do not fold correctly (i.e. "misfold"), there can be serious effects, including many well known diseases, such as Alzheimer's, Mad Cow (BSE), CJD, ALS, and Parkinson's disease.

What does Folding@Home do? Folding@Home is a distributed computing project which studies protein folding, misfolding, aggregation, and related diseases. We use novel computational methods and large scale distributed computing, to simulate timescales thousands to millions of times longer than previously achieved. This has allowed us to simulate folding for the first time, and to now direct our approach to examine folding related disease.

How can you help? You can help our project by downloading and running our client software. Our algorithms are designed such that for every computer that joins the project, we get a commensurate increase in simulation speed.

FAH client applications are available for the Macintosh, PC, and Linux, and GPU and SMP clients are also available. In fact, Sony has developed a FAH client for its Playstation 3 consoles (it’s included with system version 1.6 and later, and downloadable otherwise) to leverage its CELL microprocessor to provide performance at a 20 GigaFLOP scale.

As you’ll note in the architecture overview below, the @home with Windows Azure project specifically leverages the FAH Windows console client.

@home with Windows Azure high-level architecture

The @home with Windows Azure project comprises two distinct Azure applications, the distributed.cloudapp.net site (on the right in the diagram below) and the application you deploy to your own account via the source code we’ve provided (shown on the left). We’ll call this the Azure@home application from here on out.

… Brian continues with more details about the project.

The Windows Azure Team reported OCCMundial Named Second Most-Innovative Company [in Mexico] with Windows Azure Solution in a case study of 7/16/2010:

InformationWeek Mexico just named OCCMundial as the second most innovative company in Mexico in its annual "50 Most Innovative Companies" issue. OCCMundial developed the largest job-listing website in the Mexican employment market and has more than 15 million unique visitors annually and posts more than 600,000 jobs each year.

The company earned its innovator distinction from InformationWeek Mexico for its recommendation system, OCCMatch, that uses a powerful algorithm to match job openings to candidate resumes. Each day the algorithm that OCCMatch uses compares approximately 90,000 active job offers to a candidate pool represented by up to four million resumes. The system matches qualified applicants to job openings and sends email notifications to potential candidates. OCCMatch generates hundreds of thousands of email messages every single day.

To achieve the computing power necessary to process the powerful algorithm, and the storage capacity required for the popular service, OCCMundial relies on the Windows Azure platform. With on-premises infrastructure, the company was limited in the number of operations it could run each day, but by innovating with a cloud-based solution on the Windows Azure platform, it can now execute millions of OCCMatch operations in parallel-with as much processing power as the company needs. It currently uses more than 200 instances of Windows Azure for several hours each day, but can quickly scale up, or down, when the need arises; plus, it achieved impressive levels of scalability while still avoiding approximately U.S.$600,000 in capital and operational costs.

Read the full story on how OCCMundial uses the Windows Azure platform at: www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000005802

Read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence.

The OnWindows site posted a Connecting retailers to the cloud case study on 7/15/2010:

BEDIN Shop Systems has unveiled version 2.0 of aKite, a software services suite for retail stores developed on Windows Azure and SQL Azure. This announcement was made at the Microsoft Worldwide Partner Conference in Washington.

“An excellent example of Microsoft cloud momentum in the retail industry ecosystem, BEDIN’s service-oriented architecture solutions for retailers and the Windows Azure platform complement well and together deliver the benefits of the cloud and optimise the connected customer experience,” said Bill Gonzalez, general manager, Worldwide Distribution and Services Sector, Microsoft.

“Through alliances with industry leaders such as BEDIN, we are able to empower retailers to become ‘Connected Retailers’ by providing capabilities that enhance the connection between retailers and their customers, provide the critical connection needed between retailers and their suppliers, while greatly improving internal collaboration from the desktop to the data centre, and into the cloud,” addded Gonzalez.

BEDIN Shop Systems smart clients are light Internet-centric desktop applications, deployed from a Web page. They are automatically updated from Retail Web Services, the intelligent ‘hub in the cloud’, designed as a service-oriented architecture for straightforward cooperation inside and outside a retail store chain. Retail Web Services fully leverages SQL Azure.

Another important new feature of BEDIN’s cloud-based retail stores services suite is aKite Document Interchange, a software service based on the Windows Azure platform AppFabric Service Bus. It simplifies integration with legacy enterprise resource planning systems and other on-premises.

“aKite from BEDIN Shop Systems is an example of how a partner can generate value for the end customers by leveraging the Windows Azure platform to create innovative solutions. These solutions enable fast time-to-market, simplicity to deploy, flexibility, expected service level agreements and instant integration with the existing on-premises assets,” said Anders Nilsson, Director Developer and Platform Evangelism, Microsoft Italy.

“The aKite reduction of store servers, the energy efficiency of Microsoft’s cloud computing platform, coupled with our capability to use the right level of resources, is our contribution to greentailing,” said Giorgio Betti, BEDIN Shop Systems sales and marketing manager.

Check out the rest of our WPC 2010 news in our special event section

Adron Hall’s Microsoft White Papers post of 7/15/2010 describes two new Windows Azure migration white papers written by Logic 20/10:

Recently Microsoft updated their Windows Azure Site with some new whitepapers. The cool thing is, some of these papers I was directly involved with helping to create! That makes me a little biased in saying, I’m stoked that they’ve been released!

So if you’re in the market for some cloud technology and happen to be researching Windows Azure, check these out. I’ve included the links to the specific whitepapers I had considerable involvement in. Thanks Logic20/20 for providing the opportunity, it was tons of fun working on and researching Windows Azure! I look forward to future opportunities to work with the cloud more.

Here are the Microsoft links:

-

Custom E-Commerce (Elasticity Focus) Application Migration Scenario

E-commerce applications that deal with uneven computing usage demands are frequently faced with difficult choices when it comes to growth. Maintaining sufficient capacity to expand offerings while continuing to support strong customer experiences can be prohibitively expensive—especially when demand is erratic and unpredictable. The Windows Azure Platform and Windows Azure Services can be used to help these organizations add computing resources on an as-needed basis to respond to uneven demand, and when it makes sense, these organizations can even implement programmatic system monitoring and parameters to help them achieve dynamic elasticity, which it is only possible when organizations become unconstrained by the costs and complexity of building out their on-premises computing infrastructure. While programmatic elasticity may be non-trivial to achieve, for certain organizations it can become a competitive advantage and a differentiating capability enabling faster growth, greater profitability, and improved customer satisfaction. Learn about migrating custom e-commerce (elasticity focus) applications in this whitepaper.

- Custom Web (Rapid Scaling Focus) Application Migration Scenario

Organizations hosting custom web applications that serve extremely large numbers of users frequently need to scale very rapidly. These organizations may have little to no warning when website traffic spikes, and many organizations that have launched this type of custom application were unprepared for subsequent sudden popularity and explosive growth. For some organizations, their resources became overburdened, and their sites crashed; instead of riding a wave of positive publicity, their upward trajectory was halted, and they needed to repair their reputations and assuage angry customers and site visitors. The Windows Azure Platform and Windows Azure Services can help organizations facing these challenges achieve web application architecture that is both highly scalable and cost effective. Learn about migrating custom web (rapid scaling focus) applications in this whitepaper.

The Financial Post blog reported iStore to Launch Digital Oilfield Software as a Service

[powered by Azure] in this 7/15/2010 post:

The Information Store (iStore) today announced Digital Oilfield Express (DOX),the first Digital Oilfield offering delivered online through a subscription price model. The new service will bring key exploration and production (E&P) business functionality to the thousands of small businesses that comprise the U.S. independent oil and gas producer market. This announcement was made at Microsoft’s Worldwide Partner Conference (WPC) 2010 in Washington, D.C.

“American independent producers are the backbone of domestic energy production,” said Barry Irani, CEO of iStore. “Because the vast majority of these companies are small businesses they typically lack the infrastructure and IT expertise of the big players which has kept Digital Oilfield solutions out of reach. Recognizing that the Digital Oilfield concept would allow these companies to better manage and use exploration and production data, we looked to Microsoft’s Azure Cloud computing platform as the means to deliver essential and affordable technology to this largely underserved market. And because independents produce 68 percent of the oil and 82 percent of the natural gas consumed domestically what is good for them is good for our national energy security.”

iStore is creating DOX as a streamlined set of key E&P functions, such as document management and data visualization, utilizing SQL Azure and other components of the Windows Azure Services Platform. The Azure cloud platform provides Digital Oilfield technology in a software-as-a-service (SaaS) pricing model, eliminating the barriers created by the up-front capital requirements of a large software implementation and requisite hardware platform that can be prohibitive for a smaller company. Delivering Digital Oilfield in the cloud also allows iStore to bypass the long implementation cycles intolerable for smaller enterprises.

“An excellent example of Microsoft cloud momentum in the oil and gas industry partner ecoysystem, iStore envisions a roadmap for E&P companies moving into the cloud,” said Simon Witts, corporate vice president, Enterprise & Partner Group (EPG), Microsoft.“iStore is leading the migration of data-rich Digital Oilfield applications from the data center to the cloud, which is aimed at creating a cost-effective software-as-a-service pricing model for end users. Microsoft’s Azure platform enables iStore to bring the power of the Digital Oilfield to a market tier that lacks the IT infrastructure of the larger players, allowing smaller E&P companies to focus on managing their business, not IT infrastructure.”

For larger oil & gas businesses the cloud provides an essential fabric for Digital Oilfields of the future on which to combine both software and services, enabling petroleum enterprises to create the mix of online and on-premises data and software that fit the business purpose.

The Microsoft Windows Azure platform addresses the IT requirements of the Digital Oilfield by:

- Leveraging cloud services to act as the inter-organization integration layer.

- Providing a secured worldwide distributed run-time environment that is always on and supports auto provisioning and hosting of these services.

- Taking advantage of the built-in services available (compute, storage, access control, etc.) and the common development toolset to seamlessly connect to existing or new on-premise systems.

The Windows Azure platform, consequently, provides oil and gas solution providers and enterprise customers with the ability to extend and deploy their business services into the cloud and enables improved partner integration. These capabilities are poised to deliver greater levels of innovation and cooperation, further digitization of processes across global value chains and high levels of interactivity and engagement with end consumers.

About iStore

iStore helps petroleum companies access exploration and production (E&P) data wherever it resides and presents it in a useful form. As a result, customers improve asset performance, reduce cycle time and maximize return on investment. Most importantly, iStore's suite of software solutions puts the information E&P asset management teams need to make good decisions at their fingertips. Founded in 1994, the privately held company's headquarters are in Houston. For more information, visit www.istore.com.

Tim Aidlin described The Evolution of The Archivist in this 7/12/2010 second post of a series to MIX Online’s Opinion blog:

In this post, part II of a series, I'll take a step back and explain how we concepted and executed The Archivist Web (alpha)and talk about what came before the design and code.

In an earlier post I talked about working on The Archivist Desktop version, and how the software began its evolution to the web and the cloud. In this post, part II of a series, I'll take a step back and explain how we concepted and executed The Archivist Web (alpha) and talk about what came before the design and code.

Audience Research

Once we decided to take on the project, we turned to our established group of experts for guidance. We already had a prototype of a similar application in The Archivist Desktop, so we had a cadre of fellow-Microsoft employees, friends and colleagues in other companies, and current users we could tap for solid user-testing. We focused on two primary groups of users: our internal Microsoft team and users of The Archivist Desktop.

Getting Stakeholders and Internal Microsoft Team Members On Board

We started by discussing the project's intricacies and goals with our internal team members and stakeholders. We set up short individual meetings with people, gathered feedback on how they used The Archivist Desktop and asked what new features they wanted to see.

“Conversation” with current users

Again, because we had already released The Archivist Desktop, we had an already-established user-base. Over the years there had been a few “vocal” users – who either wrote in to thank us, or report problems and failures – who we felt could provide valuable feedback from real-world use-cases. At the beginning stages of building The Archivist Web we simply emailed a few of the users with whom we had been in contact and asked them a simple set of questions surrounding specific topics such as types of visualizations they would use, how often the tool needed to update, the way they were using the data and the like. By asking specific questions rather than asking for general feedback, users were able to focus on key issues and needs that we could act upon. Often, by asking a generalized question such as “What would you like to change,” you get very generalized answers that are hard to turn into actionable tasks.

We also communicated our plans openly, which helped us make contacts in other parts of the company and take advantage of Microsoft's existing technologies and knowledge-base. Discussing our plans with the Windows Azure team, for example, helped us overcome some serious hurdles with our data-storage methodology. Without their help, we might have been derailed at the beginning.

What Current Users Want

At the beginning stages of the project, we simply emailed a few of our most vocal users and asked them questions about what types of visualizations they might use, how often the tool needed to update, how they were using data, etc. Asking specific questions instead of relying on general feedback helped us identify key issues and needs we could easily act on.

User personas

From our sit-downs and email conversations, we were able to segment our audience into 'personas', defined as representations of real users, including their habits, goals and motivation.

There are many ways to approach user personas. Some designers spend a long time fleshing out the behaviors and market segmentations of a target audience. We, on the other hand, took a more rudimentary approach, following the example of Smashing Magazine great article on the same subject.

Drive-by vs. Return Users

We know that most users will come by the site once, do a quick search, check out some visualizations, and then leave. That’s just the nature of building websites, and especially one geared toward a niche audience.

We wanted to provide an engaging experience with few barriers to entry for the drive-by user, but it was more important to concentrate on bringing people back. So, we decided to focus on our return users, Marketing Managers and academics.

The Marketing Manager

Marketing Managers primarily care about tracking sentiment—who is tweeting about what, how often, and how people feel about a brand or site over time. But up until recently, there were only a few ways to gain access to targeted Twitter data, most of which was presented in a very raw format that was difficult to extrapolate from.

Our plan was to change all this with The Archivist, and make it easy to find, share and interpret large swaths of data suited to the Marketing Manager's needs.

The Academic

Because of our experience with The Archivist Desktop, we knew that there was a surprisingly large demand for the Archivist in academia. During the last year or so, we’ve talked with with numerous professors and students who have found The Archivist Desktop useful for their research.

Whereas the Marketing Manager wants to track 'sentiment', the academic is interested in raw data that's accurate, easy to access and appropriate for use in research.

Wireframes

I thought it might be useful to provide the full PowerPoint walk-through of our wireframe set, which gave our team an understanding of how different users might come to and interact the site.

What we couldn't do (Feature Scoping)

Coming to agreement on the wireframes was easy, but brought up questions about what data we could actually display to users. As I noted earlier, I spent a lot of time thinking about the frequent requests for filtering by date, secondary keywords and other items. Because of how Twitter works, some of this data wasn't consistently available. Other items were so gnarly we couldn't scope them into this release.

Visualization explorations

As Karsten and I were wrapping up the early Archivist Desktop version, I explored additional visualizations I thought we might be able to incorporate into the downloadable WPF application. Since we were working in WPF at that point, I felt free to explore visualizations that might be useful to our audience. Some that I came up with:

For a few specific reasons, we decided to ship The Archivist using the ASP.NET charting contols, and made the decision to feature the following charts.

- Tweets over time

- Top Users

- Top Words

- Top URLs

- Tweet vs. Retweet

- Top Sources (software)

- Use ASP.Net Charting Controls

In my next post, I'll talk about taking the site from wireframes and explorations to a functional HTML + CSS3 + jQuery site, and how I worked with Karsten, who made the beauty of the backend happen in the cloud.

Do you use the same process as us, moving from problem to persona to wireframes to design? Leave a comment and be sure to follow us on Twitter @Mixonline

See my Archive and Mine Tweets In Azure Blobs with The Archivist Application from MIX Online Labs (updated 7/11/2010) for more details about the architecture of The Archivist and live links to archives of my tweets and those for SQL Azure and OData.

See my Archive and Mine Tweets In Azure Blobs with The Archivist Application from MIX Online Labs (updated 7/11/2010) for more details about the architecture of The Archivist and live links to archives of my tweets and those for SQL Azure and OData.

Return to section navigation list>

Windows Azure Infrastructure

• Tom Simonite asserts “Tools that benchmark performance promise to reveal the strengths and weaknesses of competing cloud providers” as a preface to his Pitting Cloud against Cloud post of 7/16/2010 to the MIT Technology Review blog:

New software developed to measure the performance of different cloud computing platforms could make it easier for prospective users to figure out which of these increasingly popular services is right for them.

Right now, developers have little means of comparing cloud providers, which lease access to computing power based in vast and distant data centers. Until actually migrating their software to a cloud service, they can't know exactly how fast that service will perform calculations, retrieve data, or respond to sudden spikes in demand. But Duke University computer scientist Xiaowei Yang and her colleague Ang Li are trying to make the cloud market more like the car market, where, as Yang says, "you can compare specifications like engine size or top speed."

Working with Srikanth Kandula and Ming Zhang of Microsoft Research in Redmond, WA, Yang and Li have developed a suite of benchmarking tools that make it possible to compare the performance of different cloud platforms without moving applications between them. These tools use algorithms to measure the speed of computation, and shuttle data around to test the speed at which new copies of an application are created, the speed at which data can be stored and retrieved, the speed at which it can be shuttled between applications inside the same cloud, and the responsiveness of a cloud to network requests from distant places. The researchers used the software to test the services offered by six providers: Amazon, Microsoft, Google, GoGrid, RackSpace and CloudSites. Results of those tests were combined with the providers' pricing models to allow for quick comparisons. [Emphasis added.]

The results are among the first attempts to compare the performance of several clouds platforms, says Yang. "We found that it's very hard to find a provider that is best in all metrics," she says. "Some are twice as fast for just 10 percent extra cost, which is a very good deal, but at the same time their storage service is actually very slow and has a lot of latency variation." Another provider showed good computation speeds but was less quick at spawning new instances of an application--something that might be necessary for a service that experiences peaks in demand, as a video site does when some of its content goes viral. "It seems like in today's market it is hard to pick a provider that is good at everything," says Yang.

See my Ang Li and Xiaowei Yang of Duke University and Srikanth Kandula and Ming Zhang of Microsoft Research co-wrote a recent CloudCmp: Shopping for a Cloud Made Easy item in Windows Azure and Cloud Computing Posts for 6/21/2010+ for an abstract and more details about the project.

See my Ang Li and Xiaowei Yang of Duke University and Srikanth Kandula and Ming Zhang of Microsoft Research co-wrote a recent CloudCmp: Shopping for a Cloud Made Easy item in Windows Azure and Cloud Computing Posts for 6/21/2010+ for an abstract and more details about the project.

It’s disappointing that “The researchers aren't yet willing to disclose the performance scores of specific providers.” However, “they plan to make their tools publicly available.”

• “CIO.com's Bernard Golden discusses what cloud agility means and shares examples of the kind of business agility that is fostered by cloud computing” in Cloud Computing: Two Kinds of Agility of 7/16/2010:

A key benefit often discussed about cloud computing is how it enables agility. This benefit is real and powerful. However, the term agility is used to describe two different kinds of benefit; both are real, but one of them will, ultimately, be seen as offering the greatest impact. This post will discuss the two types of agility and provide some examples of how compelling the second type is.

What does cloud agility mean? It's tied to the rapid provisioning of computer resources. Cloud environments can usually provide new compute instances or storage in minutes, a far cry from the very common weeks (or months, in some organizations) the same provisioning process can take in typical IT shops.

As one could imagine, the dramatic shortening of the provisioning timeframe enables work to commence much more quickly. No more submitting a request for computing resources and then anxiously watching e-mail for a fulfillment response. As agility may be defined as "the power of moving quickly and easily; nimbleness" it's easy to see how this rapid provisioning is referred to advancing agility.

But here is where the definition gets a bit muddled. People conflate two different things under the term agility: engineering resource availability, and business response to changing conditions or opportunity.

Both types of agility are useful, but the latter type will ultimately prove to be the more compelling and will come to be seen as the real agility associated with cloud computing.

The problem with delivering compute resources to engineers more quickly is it is a local optimization — it makes a portion of internal IT processes more agile, but doesn't necessarily shorten the overall application supply chain, which stretches from initial prototype to production rollout.

In fact, it's all too common for cloud agility to enable developers and QA to get started on their work more quickly, but for the overall delivery time to remain completely unchanged, stretched by slow handover to operations, extended shakedown time in the new production environment, and poor coordination with release to the business units.

Moreover, if cloud computing comes to be seen as an internal IT optimization with little effect on how quickly compute capability rolls out into mainline business processes, the potential exists for IT to never receive the business unit support it requires to fund the shift to cloud computing. It may be that cloud computing will end up like virtualization, which in many organizations is stuck at 20 percent or 30 percent penetration, unable to garner the funding necessary to support wider implementation. If the move to cloud computing is presented as "helps our programmers program faster," necessary funding will probably never materialize.

• Audrey Watters asks Will The Cloud Mean the End of IT As We Know It? in this 7/15/2010 post to the ReadWriteCloud blog:

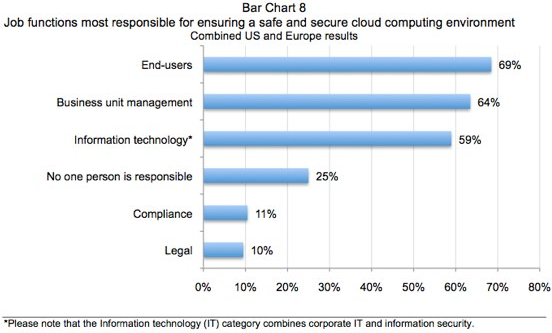

![]() A study published earlier this year by CA and the Ponemon Institute, a privacy and security group surveyed over 900 IT professionals in the U.S. and Europe about their perceptions, predictions, and practices as on-site systems migrate to the cloud.

A study published earlier this year by CA and the Ponemon Institute, a privacy and security group surveyed over 900 IT professionals in the U.S. and Europe about their perceptions, predictions, and practices as on-site systems migrate to the cloud.

The results are particularly striking as they reveal some of the obstacles that cloud computing faces from those who are often responsible for helping implement and maintain a company's technology infrastructure.

Key findings from the study include the following:

- IT practitioners lack confidence in their organizations' ability to secure data and applications deployed in cloud computing environments (especially public clouds).

- IT practitioners in both the US and Europe admit they do not have complete knowledge of all the cloud computing resources deployed within their organizations today.

- Because cloud computing deployment decisions are decentralized (especially SaaS), respondents see end-users (or business management) as more responsible for ensuring a safe cloud computing environment than corporate IT.

- IT practitioners in both the US and Europe rate the security posture of on-premise computing resources as substantially higher than comparable computing resources in the cloud.

- IT practitioners believe the security risks most difficult to curtail in the cloud computing environment include securing the physical location of data assets and restricting privileged user access to sensitive data.

In a recent article Venkat S. Devraj looks at the ways in which IT departments going to have to prepare themselves for the cloud, whether they want to make the move or not. He contends that part of the struggle with cloud implementation is not the technical obstacles. "The struggles have not emanated necessarily from lack of desire, budget or technology leadership," he writes, "but from cultural challenges."

These challenges include a perceived shift in power dynamics as self-service models muddy the line between where IT operations and end-users. He believes that IT personnel fear that end users will be granted administrative privileges that will make their activities difficult to oversee and control and will, in turn, increase IT's workload. He also suggests that the cloud will blur some of IT's internal silos, as cloud-based delivery models challenge some of the separations between people in charge of servers, storage, databases, software, and so on.

As organizations adopt cloud technologies - either sanctioned by IT or not - it remains to be seen how this will impact IT departments. Will it be, as some have suggested, the end of IT as we know it?

• Timothy Prickett Morgan asserted Dell OEMs server management from Microsoft in a 7/15/2010 post to the Channel Register UK blog:

If you use Dell PowerEdge servers in conjunction with Microsoft's Windows operating system, Dell is going to make your life a little bit easier while also saving you some dough.

Like its peers in the x64 server racket, Dell has service processors and box management tools to manage its PowerEdge rack and tower servers and the blades in its PowerEdge blade chassis. But to get higher level management at the operating system or application level, Dell likes to partner, sometimes loosely, sometimes tightly with OEM deals, as it does with Egenera for PAN Manager and Microsoft for Systems Center. More recently, Dell has started to snap up management tool companies, such as KACE for PC lifecycle management or Scalent for physical and virtual server provisioning.

Microsoft released Systems Center Essentials 2010 to manufacturing at the end of April and it was generally available on June 1. The tool meshes its Windows system management console with the Virtual Machine Manager 2008 R2 plug-in for the Hyper-V hypervisor, giving system administrators a single console from which they can manage both physical and virtual servers. Before, you had to buy these tools separately and plug them together.

Because it wants tight integration between the Windows software stack and its PowerEdge servers, Dell has gone one step further and OEMed the SCE 2010 software from Microsoft. The server maker has worked with Microsoft to integrate its Windows management console with its own OpenManage tools, which include Pro Packs, Management Packs, and Update Catalogs.

The integration between OpenManage and SCE 2010 is available for customers who don't buy the Dell version, because, according to Enrico Bracalente, senior strategist for systems management product marketing at Dell's Enterprise Product Group, Dell is not trying to lock PowerEdge customers into its OEM version to get tighter integration. The OEM version is supported by Dell, not Microsoft, which gives SMB shops one throat to choke and one support bill instead of two (just like Dell front ends support for Windows Server licenses at SMBs).

And according to Bracalente, Dell's pricing "is a bit more aggressive than Microsoft's own pricing" for SCE 2010. Exactly how much, he did not want to say, but prices start at $5,000 from Dell for spanning 50 physical or virtual servers or up to 500 client devices. Microsoft charges $103 for the SCE 2010 management server, and $870 if you don't already have a license to the SQL Server 2008 Standard Edition database it requires. It costs $103 per server node and $17 per PC client on top of this. So we're talking about a 15 per cent discount for users, and probably some profits for Dell since it undoubtedly gets SCE 2010 at a much deeper discount from Microsoft as part of its OEM agreement.

The management server of the OEM version of SCE 2010 can run on PowerEdge servers spanning the 9G to 11G generations, and can control machines that go back as far as the 8G generation.

As far as Bracalente knows, Dell is the first and only server maker to offer an OEM version of SCE 2010. Dell had an OEM deal for the prior Systems Center 2007 tools, and Fujitsu had an OEM version that implemented parts of that earlier Microsoft management toolset.

A variety of articles and blog posts have identified Dell as a major hardware supplier to Microsoft data centers.

The HPC in the Cloud blog’s Platform Computing Survey: Private Clouds High on IT Execs Agenda in 2010 post of 7/15/2010 reports:

Demand for private clouds remains undiminished in 2010, with 28 percent of organizations planning a deployment in 2010, according to the third annual benchmark survey of delegates by Platform Computing at the International Supercomputing Conference (ISC'10) in June. While the level of intent to deploy is the same as in 2009 (also 28 percent), the main drivers have changed dramatically, reflecting improved awareness and understanding of the benefits of private clouds. As the leader in cluster, grid and cloud management software, Platform Computing has been tracking adoption of private clouds and the drivers for uptake. [Link added.]

While improving efficiency was the main motivator in 2009 (41 percent), the 2010 survey reveals that drivers for deploying private cloud have evened out in 2010: efficiency (27 percent), cost cutting (25 percent), experimenting with cloud (19 percent), resource scalability (17 percent) and IT responsiveness (6 percent). This suggests that there has been an improvement in the level of understanding of the benefits which private clouds can deliver. Furthermore, the increased significance placed on cost cutting (25 percent compared to 17 percent in 2009) also suggests that the cautious economic climate has influenced drivers for adoption.

When asked for the first time if they consider cloud to be a new concept, 62 percent of executives believe it to be an extension of clusters and grids, with only 17 percent thinking it is a new technology. This indicates that greater awareness of private clouds has resulted in recognition that private clouds are the natural next step for organizations already using clusters or grids. While the appetite for private cloud in 2010 remains as strong as in 2009 and general understanding is better, IT executives seem unconvinced about the benefits of using an external service provider for 'cloud bursting' – where the public cloud is tapped into when a company's own resources reach capacity – with over three quarters (79 percent) stating that they have no plans to do this in 2010.

With greater understanding of what private clouds can deliver, executives feel that the barriers to adopting private cloud have also softened. Organizational culture was cited as the biggest inhibiting factor in 2009 (37 percent), but this is perceived by executives to be less of an issue in 2010 (26 percent). Instead IT departments have become more focused on the detail behind private clouds: with security (26 percent), complexity of managing (25 percent), application software licensing (12 percent) and upfront costs (6 percent) cited as potential barriers.

Randy Clark, CMO, Platform Computing, commented: "What's interesting is that private cloud deployment intent continues to be strong, independent of public cloud intentions. That cost is growing as a business driver while organizational culture becomes less of a barrier, speaks to maturing use cases, pilots and deployments. We expect that private clouds will continue to outpace public cloud models but that the correlation between private clouds and hybrid use-cases such as 'cloud bursting' will increase over time. We will continue to measure the market qualitatively and quantitatively to see if this happens."

<Return to section navigation list>

Windows Azure Platform Appliance

• Gavin Clark reported about Rackspace adopting WAPA in Cloud fluffer ready to lighten Microsoft's load in a 7/15/2010 article for the Channel Register blog:

Web-hosting giant Rackspace could be among the first to deliver private versions of Microsoft's Azure cloud, free of Redmond's control.

Rackspace has had discussions with Microsoft to run Azure in its own data centers, the company's told The Reg.

Already a Microsoft hosting and cloud partner, Rackspace believes the world is too big and too complicated with different security standards and regional requirements for Microsoft to deliver everything on its own.

Microsoft this week announced the Windows Azure Platform Appliance containing Windows Azure compute and SQL Azure storage.

Rackspace chief technology officer John Engates told us: "I think it's a great idea to allow private versions of Azure because realistically, Microsoft can't run all the world's IT in its own data centers."

He added: "If and when we get strong demand for Azure in our datacenter, we'll certainly consider offering it."

The service provider also believes it's got the experience in hosting and .NET to make Azure usable and to help ease customers concerns over the newness of the architecture – most, for example, won't even know what SQL Azure is or be willing to commit at this stage..

Lew Moorman, Rackspace's president for cloud and chief strategy officer, said: "It's like house hunting and buying the house and every single element in it. You can't customize or do your own thing, and that's a big commitment for people... In time we could host it, but people are nervous about giving everything to Microsoft."

Windows Azure Platform Appliance came to light after The Reg broke the news on Microsoft's Project Talisker, a plan to let Microsoft customers and service providers run their own, private versions of Azure. Until Talisker, Microsoft's said only its datacenters would host Azure.

The appliance software will be tested on servers by Dell, Hewlett-Packard, and Fujitsu in their data centers, with plans to deliver the appliance on hardware with services from Dell and HP once they've got a clearer idea of what the product should be, how it might work, pricing, and licensing.

Engates said the appliance concept would let customers run an Azure-like capability to meet the specific needs of their own environments or let Rackspace run Azure for them in its own datacenters.

Rackspace claims 80,000 cloud customers, but installing Azure would mean a change. Rackspace seems to define cloud by server virtualization, as it offers VMware's vSphere with its Windows Server and Red-Hat Linux stacks in addition to services and support.

Rackspace has been agnostic on the software side: while it hosts specific applications as services, such as Microsoft's email and SharePoint, Rackspace also offers vanilla .NET and Linux/Apache/MySQL/PHP (LAMP) stacks that customers can launch code on.

Running Azure, though, would mean commitment to the complete Azure compute and storage fabric and Rackspace would not be allowed to customize the code.

Azure comprises a layer to cluster servers running on Microsoft's hypervisor, SQL Azure storage, and the .NET Framework using Microsoft's Common Language Runtime and ASP.NET.

Engates said he had "high confidence" Rackspace can run Azure when it's ready to ship, noting the company's already very familiar with hosting Microsoft's applications and operating systems.

• Krishnan Subramanian answered Why Windows Azure Is Not Available For On-Premise Deployments? with an ancient Microsoft post in a 7/15/2010 post to the Cloud Ave blog:

When Microsoft announced Windows Azure appliance few days back at PDC10, I was critical of their plans to sell the technology bundled with hardware from their partners.

What I expected from Microsoft was the availability of Azure stack to be used with the existing infrastructure, something like what one can do with Eucalyptus, for example. Expecting the enterprises to buy physical compute infrastructure along with the platform stack is a big downer for me. I guess they are taking the approach to protect their existing cash cow (the sales of Windows Server OSes and other tools). Forcing the potential customers to buy hardware appliance defeats the very idea of drastically cutting down the capital costs with the move to cloud. Even though private clouds are capital intensive compared to public clouds, I feel that letting the enterprises use their existing infrastructure while embracing cloud like features is a more sensible approach than forcing them to buy new hardware along with the platform stack. Their decision to not offer the stack to install on the existing compute infrastructure is the biggest disappointment from this announcement.

Immediately after I posted this one, James Urquhart, the author of the famous Wisdom Of The Clouds blog told me that the reason why they are not offering it could be linked to a possibility that Azure is a combination of software and data center architecture. My initial position on my post was based on the fact that Microsoft has not explicitly talked about the issue. However, James' comment made me think about that possibility. Now, it has officially come out of Microsoft.

According to a blog post by Steve Martin, a senior manager at Microsoft, Azure cannot run on any datacenter. He clearly highlights how the implementation of Windows Azure needs some of their global data-center hardware design and large scale multi-tenancy. Since these cannot be done on many of the existing datacenters, an offering similar to Eucalyptus doesn't make any sense.

We don’t envision something on our price list called “Windows Azure” that is sold for on-premises deployment. Some implementation details aren’t going to be practical for customers, such as our global data-center hardware design and large scale multi-tenancy features which are integral to Windows Azure and the Azure Services Platform.

Also, he clearly highlights how they are going to address this market in the same post

We will continue to evolve Windows Server and System Center focusing significantly on technologies like virtualization, app and web server capabilities, single-pane management tools for managing on-premise and cloud in the same way, etc. which extend the enterprise data center in significant ways. We’ll continue to license Windows Server and System Center (and therefore the shared innovation derived from Windows Azure) to hosters through our SPLA program.

If you are interested in understanding Microsoft's strategy on the private cloud side, I urge you to read his post.

Update: The above post by Steve Martin was more than a year old. I overlooked the date stamp and assumed it was a clarification after the announcement of Azure appliance. It was an oversight on my part. However, the post does offer an official clarification for my comments after the announcement of Azure appliance. My apologies for making it appear as if the blog post is a recent one.

A year is an eternity in the cloud computing business.

Microsoft’s LukaD puts the interdependence of software and hardware into more recent perspective in his Bringing the Windows Azure Cloud On-Premise! post of 7/16/2010:

Yep, it looks like, by popular demand Microsoft is enabling the Windows Azure Platform inside of your datacenter.

Windows Azure Appliance was announced on the keynote of the Worldwide Partner Conference, here is a brief description of the functionality:

“Windows Azure™ platform appliance is a turnkey cloud platform that customers can deploy in their own datacenter, across hundreds to thousands of servers. The Windows Azure platform appliance consists of Windows Azure, SQL Azure and a Microsoft-specified configuration of network, storage and server hardware. This hardware will be delivered by a variety of partners.”

Nice!

<Return to section navigation list>

Cloud Security and Governance

• David Tesar posted a 00:28:52 Cloud Security Panel Interview at TechEd NA 2010 Channel9 video segment to Microsoft Technet on 7/16/2010:

Mark Russinovich, Andy Malone, and Patrick Hevesi give us insight into the cloud security space at TechEd North America with Ch9 Live. We dig into quite a few areas such as:

- What are some of the tradeoffs with security in regards to the different type of cloud offerings?

- What are the biggest concerns in regards to security with moving to the cloud?

- Can you trust the data security boundaries with cloud technologies like Windows Azure?