Windows Azure and Cloud Computing Posts for 1/4/2011+

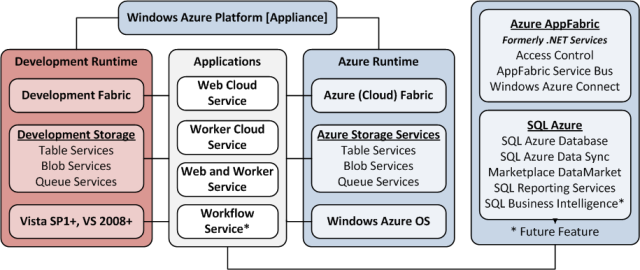

Windows Azure

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), VM Role, Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles so far today.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi described SQL Azure - The Year in Review in a 1/4/2011 post to the SQL Azure Blog:

Happy New Year everybody!

The year 2010 was an exciting one for developers, customers, and Microsoft as we all made the dive into the cloud with SQL Azure and the Windows Azure platform. The year 2011 is already shaping up to be even more exciting with what the industry is doing with cloud data.

The last year has been eventful – on February 1, 2010, SQL Azure went live. We also unveiled previews of SQL Azure Data Sync and SQL Azure Reporting to extend data and BI everywhere. The innovations we’re seeing from customers and partners are fantastic – you can learn more about how people are using the service by clicking here.

Rather than have me tell you how much we believe in our vision for cloud data, I encourage you to read the Forrester analyst report, “SQL Azure Raises the Bar On Cloud Databases” by Noel Yuhanna. Highlights include SQL Azure’s ability to take on enterprise workloads and specifically called out the unique multi-tenant highly-available architecture as a competitive advantage. Since that time, we’ve been hard at work improving the core product, building out additional training and reference material.

Our new year’s resolution in 2011 is to make it even easier to use SQL Azure and directly impact your business, the project you’re working on, or the website you’re building. Did you know you can try SQL Azure for free? Visit here for more details. It literally takes less than 15 minutes to sign up.

As you embark on your journey to cloud data, here are a few sites you might want to bookmark:

- SQL Azure website: offers, case studies and videos

- SQL Azure blog: for the latest news

- SQL Azure MSDN developer center: for reference and technical information

- SQL Azure TechNet Wiki: for whitepapers and technical guidance

- Windows Azure Platform Training Kit: a great free developer resource with labs for learning how to use SQL Azure and the Windows Azure platform

Some great interviews in 2010 with SQL Azure subject matter experts are here:

- Kent McNall, CEO of Quosal & Stephen Yu, VP of Development, Quosal

- Vittorio Polizzi, CTO of EdisonWeb

- David Robinson, SQL Azure Sr PM, Microsoft

- Faisal Mohamood, Sr PM Entity Framework, Microsoft

- David Robinson, SQL Azure Sr PM, Microsoft (TechEd New Zealand)

Whitepapers and Wiki Articles for learning more about SQL Azure:

- Sharding with SQL Azure

- Inside SQL Azure

- Developing Applications for the Cloud on Microsoft Windows Azure Platform

- SQL Azure FAQ on the TechNet Wiki

In 2011, look for a series of instructional videos on how to develop and manage SQL Azure databases, more interviews with product team members and customers alike as well as breaking news on SQL Azure developments. If you’d like to see something unique from us, leave a comment.

Mark Kromer (@mssqldude) posted What 2011 Will Bring in Microsoft SQL Server Land on 1/3/2011:

I am going to continue in this blog, on SearchSQLServer, SQL Magazine BI Blog and Twitter to focus on these core areas that you should watch for in 2011 for those of you that follow Microsoft SQL Server: Oracle integration, best practices, BI visualizations and SQL 11 (Denali).

But what are the new investment areas @ Microsoft and new & exciting outside of the classic SQL Server 2005/2008/2011 topics? Let’s focus on these for 2011 because it is clear this is the direction that Microsoft will take us:

- Cloud Computing (SQL Azure & Windows Azure)

- Database scale-out and load balancing with virtualization, SQL Azure and Parallel Data Warehouse

- Silverlight BI hyper-cool visualizations on PCs, Slate and Windows Phone 7

- How to take things to the next level with SQL 11

- Cloud BI

What else interests you for SQL world in 2011?

<Return to section navigation list>

MarketPlace DataMarket and OData

Malcolm Sheridan explained Using Helper Methods with ASP.NET Web Matrix Beta 3 on 1/4/2011 with the Northwind OData service as an example:

One of the great features of ASP.NET Web Pages is the ability to create reusable code through Helpers. Helpers are written in either Razor syntax, or even by creating class libraries in C# or VB.NET. Helpers always return IHtmlString, which is HTML encoded string. Helpers can be created in ASP.NET MVC 3 RC, but I thought I’d show you how to create and use these powerful language features in WebMatrix.

If you are new to ASP.NET WebMatrix, check my article ASP.NET WebMatrix Beta 2 - Getting Started

Before you begin you need to install the latest version of WebMatrix. The current release is Beta 3 and it can be installed easily through the Web Platform Installer. Once the install is finished you’re ready to begin.

Helpers reduce the code you need to write by implementing the Don’t Repeat Yourself (DRY) principal. For example take the following scenario where you want to print out a list of numbers.

In the scenario above, a variable called numbers holds a collection of integers, then using a foreach loop, they’re rendered in the browser. This is fine if you use this functionality in one place, but if you have to repeat it, then you should create a helper method. Helper methods can be created inside of WebMatrix, or alternatively they can be created in Visual Studio and referenced by your WebMatrix project.

Creating Helpers in Webmatrix

If you create Helpers inside of WebMatrix, you must first create a new folder called App_Code.

Any files inside of App_Code are compiled at runtime. The next step is to create the Helper. Start by adding a new cshtml or vbhtml file to the App_Code folder. For this example I’m going to create a Helper called CollectionHelper. This file can be called anything you like.

Now you’re ready to start coding! All Helpers are defined by the @helper keyword. Here’s the Helper method for the previous example.

The Helper method is Print. It accepts a collection of integers and renders them in the browser. Helper methods return type is IHtmlString, which guarantees the HTML to be encoded, so you won’t be opening yourself up to XSS attacks. To use the new Helper, you reference it like it’s a static class.

Now this Helper can be called from any page and we won’t have to repeat ourselves.

Creating Helpers in Visual Studio

There are times when you need more functionality than rendering numbers. For example if you wanted to call a web service and return some data. This can be done in WebMatrix, but it’s much simpler do create in Visual Studio. To create Helpers inside Studio, you need to create a class library, and then add that assembly to the bin folder in the WebMatrix project. The Helper I’m going to create returns data about the Northwind database via a public OData service. The public address is http://services.odata.org/OData/OData.svc/. First of all open Studio 2010 and create a new Class Library Project. Add a Service Reference to the Northwind OData service. Here's the resulting code.

The GetProducts method returns a IHtmlString type, and it will be filled with the description of each product from the database. Before using this assembly in WebMatrix, jump back into WebMatrix and add a bin folder. Next copy the Class Library assembly and paste it into the bin folder inside the WebMatrix project. By default WebMatrix creates projects under C:\Users\{user id}\Documents\My Web Sites.

To use this it is just the same as using a Helper in the previous example.

And the results are below.

So now we're using WebMatrix to call a Helper that in turn calls a WCF OData service. Brilliant!

Helpers are a great way to stop yourself from duplicating code. Hopefully you use them.

The entire source code of this article can be downloaded here.

The Slangin’ Code blog explained Adding Basic Authentication to a WCF OData service (and authenticating via an odata4j client if you’re into that sort of thing) on 12/30/2010:

I’ve been slowly building my first Android application over the last several months. Along the way, I’ve also jumped headfirst into Java development. My C# background and excellent online documentation have made the transition quite simple, but consuming a WCF OData service from an Android application added a whole new set of challenges.

First, which format should I use to retrieve the data? At first, I looked into getting JSON back and using one of the various JSON libraries to do the parsing. This seemed messy and early experiments were not promising. After going down a few more rabbit holes, I stumbled upon the fledgling yet excellent odata4j library. odata4j did a great job of retrieving data from my WCF OData service and parsing it wasn’t too difficult. There are several examples on the project home page to help you get started. The Netflix OData Catalog was invaluable in getting the query syntax just right.

I won’t go into too much detail about the specifics of my Android application’s architecture (at least not in this post), but will mention that all of the data downloading and synching is done via a background account sync process. This allows the application to access and edit the data without hanging during sync operations.

Now that the scene has been set, let’s talk WCF OData service authentication. First, I’ll save us both some time and credit the code for securing the WCF service to this great MSDN blog post. That post walks you through the creation of an HTTP module and a custom authentication provider. The user name and password are Base64 encoded and passed to the service via the request header with each request. The custom authentication provider handles verifying credentials against a database, etc. If that’s all you were looking for, that blog has everything you need. Case closed. If you then need to authenticate against that WCF OData service using odata4j, read on playa.

I knew that to add the Base64 encoded credentials to the request header, I’d have to somehow hook into where the odata4j ODataConsumer is created. After reading a bit about the OClientBehavior parameter in one of the overloads to

ODataConsumer.create(), I looked for examples. The only thing I could find was the AzureTableBehavior class. I studied the class a bit and used it as a template for implementing the AuthenticatedTableBehavior class below.public class AuthenticatedTableBehavior implements OClientBehavior { private final String userId; private final String password; public AuthenticatedTableBehavior(Context context, Account account) { AccountManager am = AccountManager.get(context); this.userId = account.name; this.password = am.getPassword(account); } @Override public ODataClientRequest transform(ODataClientRequest request) { try { String credentials = userId + ":" + password; byte[] credentialBytes = credentials.getBytes("utf8"); String encodedCredentials = base64Encode(credentialBytes); request.header("Authorization", "Basic " + encodedCredentials); return request; } catch (Exception e) { throw new RuntimeException(e); } } private static String base64Encode(byte[] value) { return Base64.encodeBase64String(value).trim(); } private static byte[] base64Decode(String value) { return Base64.decodeBase64(value); } }Once you have added the above class to your project, simply pass an instance of the AuthenticatedTableBehavior class to the

ODataConsumer.create()method similar to:ODataConsumer consumer = ODataConsumer.create(ROOT_URL, new AuthenticatedTableBehavior(context, account));Note that you’ll have to pass an instance of android.content.Context and android.accounts.Account. Both will be passed to your sync service if you’re using account synchronization to retrieve your data. You’ll need to adjust the AuthenticatedTableBehavior class to fit your needs if you’re doing something different.

Let me know if this is helpful to you and thanks in advance for any feedback.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Buck Woody posted his Windows Azure Learning Plan - Application Fabric on 1/4/2011:

This is one in a series of posts on a Windows Azure Learning Plan. You can find the main post here. This one deals with the Application Fabric for Windows Azure. It serves three main purposes - Access Control, Caching, and as a Service Bus.

Overview and Training

Overview and general information about the Azure Application Fabric, - what it is, how it works, and where you can learn more.

General Introduction and Overview

Access Control Service Overview

Microsoft Documentation

http://msdn.microsoft.com/en-gb/windowsazure/netservices.aspx

Learning and Examples

Sources for online and other Azure Appllications Fabric training

Application Fabric SDK

Application Fabric Caching Service Primer

Hands-On Lab: Building Windows Azure Applications with the Caching Service

Architecture

Azure Application Fabric Internals and Architectures for Scale Out and other use-cases.

Azure Application Fabric Architecture Guide

Windows Azure AppFabric Service Bus - A Deep Dive (Video)

Access Control Service (ACS) High Level Architecture

Applications and Programming

Programming Patterns and Architectures for SQL Azure systems.

Various Examples from PDC 2010 on using Azure Application as a Service Bus

Creating a Distributed Cache using the Application Fabric

Azure Application Fabric Java SDK

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

Michael Desmond described how BizTalk Server 2010 exposes LOB functionality beyond the firewall via Windows Azure AppFabric Connect in his Line-of-Business Dev in 2011 article of 1/1/2011 for Visual Studio Magazine:

With all the activity around mobile and Web technologies, it's easy to think that Microsoft might take its eye off the ball in the area of line-of-business (LOB) development. However, according to Rob Sanfilippo, analyst for research firm Directions on Microsoft, business developers actually have a lot to look forward to in 2011.

BizTalk 2010, launched in October, provides new ways to expose LOB functionality beyond the firewall via Windows Azure AppFabric Connect.

"This service can reduce the development burden by allowing Windows Workflow Foundation activities to be dropped into a workflow designer and tied into LOB applications through BizTalk adapters," Sanfilippo wrote in an e-mail interview. "Workflows can be hosted and managed with less effort now using Windows Server AppFabric hosting."

The upcoming SQL Azure Reporting Services will also enable remote access to LOB data stored in the cloud or on-premises.

Office 2010 Business Connectivity Services (BCS) also earned mention. Sanfilippo said the new Duet Enterprise product, which links SAP LOB applications to Office via SharePoint BCS, illustrates how BCS can link diverse data sources. "Duet itself is not new, but its use of BCS is, and since Duet Enterprise could find a decently sized customer base, it will be a notable step toward legitimizing the BCS technology," Sanfilippo wrote.

One technology we might see less of in 2011, according to Sanfilippo, is Windows Presentation Foundation (WPF). "With the introduction of out-of-browser support in Silverlight 3, WPF may have lost a lot of its remaining loyalists."

Michael is the editor in chief of the Redmond Developer News online magazine.

Full disclosure: I’m a contributing editor for Visual Studio Magazine and write articles for Redmond Developer News.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Riccardo Becker described Things to consider when migrating to Azure part 2 in a 1/4/2011 post:

Here some other issues I stumbled upon by self-learning and researching around migrating your current on-premise apps to Azure. As mentioned before, just having things run in the cloud is not that difficult, but having things run in a scalable, well designed, fully using the possibilities in Azure, in a cost efficient way is something different. Here are some other things to consider.

To be able to meet the SLA's you need to assure that your app runs with a minimum of two instances (rolecount = 2 in your configuration file per deployment of web, worker or VM role)

To make things easy as possible and make as few changes as possible consider using SQL Azure Migration Wizard to migrate on-premise databases to SQL Azure databases (http://sqlazuremw.codeplex.com/)

- Moving your intranet applications to Azure probably requires changes in your authentication code. While intranet apps commonly use AD for authentication, Web apps in the cloud still can use your AD information but you need to setup AD federation or use a mechanism like Azure Connect to enable the use of AD in your cloud environment.

- After migrating your SQL Database to the Cloud you need to change your connection string but also realize that you need to "connect" to a database and that you cannot use in your code. SQL Azure is about connecting to databases itself. Also realize that it is not possible to use Windows Authentication. Encrypt your web.config or other config files where your connection strings reside.

- It's a good habit to treat your application as "insecure" at all times and use the proper Thread Model to put your finger on possible security breaches. This will keep you alert in any design decision you make regarding security. Look at http://msdn.microsoft.com/en-us/library/ms998283.aspx how you can encrypt your configuration files using RSA.

- Additional security to your SQL Azure assets can be provided by using the firewall which allows you to specify IP addresses that are allowed to connect to your SQL Azure database.

I'll post more on this blog when i stumble upon more...

I agree with Riccardo’s choice of the SQL Azure Migration Wizard to migrate on-premises SQL Server databases to SQL Azure. The SQL Server Migration Assistants (SSMAs) for Access and MySQL support direct migration to SQL Azure; SSMAs for Oracle, and Sybase probably will be upgraded to SQL Azure migration in 2011.

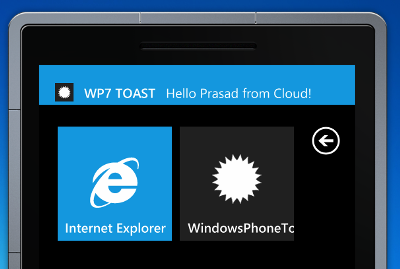

Renuka Prasad (@prasad02) updated his Windows Phone 7 – Toast Notification Using Windows Azure Cloud Service Code Project app on 12/26/2010 (missed when updated):

Windows Phone 7 – Step by Step how to create WP7 Toast Notification using Windows Azure Cloud Service with WCF Service Web Role

Content

- Introduction

- MyCloudService - Windows Azure Cloud Service With WCF Service Web Role

- WpfAppNotificationComposer - WPF Application To Send Notification

- WindowsPhoneToast – Windows Phone Application To Receive Notification

- History

- My Other Posts In CodeProject

Introduction

In this post I am going to show the step by step approach to create the Toast Notificaton on Wndows Phone7.

Before that you need to understand what is Microsoft Push Notification, to understand Windows Phone 7 notifications and flow please go through my earlier post from the below link

Microsoft Push Notification in Windows Phone 7 - In Code Project

Please Go Through The 2 Minutes Youtube Demo

Toast - Microsoft Push Noification In Windows Phone 7 (WP7)MyCloudService - Windows Azure Cloud Service With WCF Service Web Role

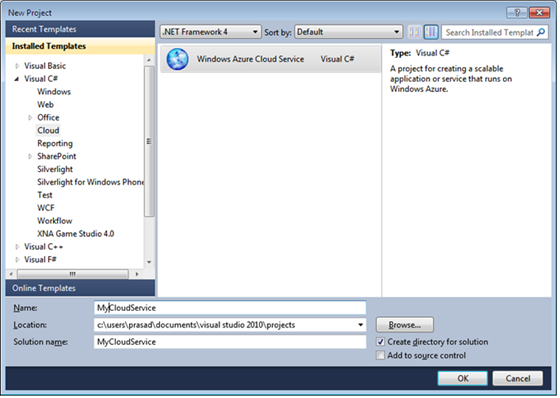

First Let us start by creating the “Windows Azure Cloud Service with WCF Service Web Role”

Start Visual Studio 2010 with (UAC Account)

In the menu File->New->Projects Choose the project templete “Windows Azure Cloud Service” and name it as MyCloudService accordingly.

In the new Cloud Service project dialog, select the WCF Service web role and add to cloud service solution list and Click on Ok button.

In the IService1.cs interface file just clear the auto generated code related to Composite type that is [DataContract] and methods that is [OperationContract] and create 2 interface methods [OperationContract] as shown below… One is to subscribe from windows phone 7 and other is used to sendNotification from WPF Application.

Collapse | Copy Code

using System; using System.Collections.Generic; using System.Linq; using System.Runtime.Serialization; using System.ServiceModel; using System.ServiceModel.Web; using System.Text; namespace WCFServiceWebRole1 { [ServiceContract] public interface IService1 { [OperationContract] void Subscribe(string uri); [OperationContract] string SendToastNotification(string message); } }Create the class DeviceRef ChannelURI with public property.

Collapse | Copy Code

public class DeviceRef { public DeviceRef(string channelURI) { ChannelURI = channelURI; } public string ChannelURI { get; set; } }In the Service1.svc.cs file we need to implement interface methods. below is the complete code

Collapse | Copy Code

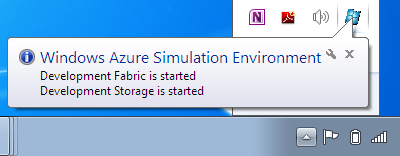

using System; using System.Collections.Generic; using System.Linq; using System.Runtime.Serialization; using System.ServiceModel; using System.ServiceModel.Web; using System.Text; //add the following references using System.Collections.ObjectModel; //for Collection<t> using System.Net; //for HttpWebRequest,HttpWebResponse using System.IO; //for stream namespace WCFServiceWebRole1 { public class Service1 : IService1 { //add the static collection to hold the subscribed URIs static Collection<deviceref> DeviceRefCollection = new Collection<deviceref>(); //implementation of the Subscribe method //which accept channelURI as a parameter, in this example called from Window Mobile 7 application public void Subscribe(string channelURI) { DeviceRefCollection.Add(new DeviceRef(channelURI)); } //implementation of the SendToastNotification method //which accept message as a parameter, in this example called from WPF application public string SendToastNotification(string message) { string notificationStatus = ""; string notificationChannelStatus = ""; string deviceConnectionStatus = ""; //loop through deviceref to get the ChannelURI and send toast for each device/windows phone app subscribed. foreach (DeviceRef item in DeviceRefCollection) { //create the HttpWebRequest to Microsoft Notification Server //this require internet connection HttpWebRequest request = (HttpWebRequest)WebRequest.Create(item.ChannelURI); request.Method = "POST"; request.ContentType = "text/xml; charset=utf-8"; request.Headers["X-MessageID"] = Guid.NewGuid().ToString(); request.Headers.Add("X-NotificationClass", "2"); request.Headers.Add("X-WindowsPhone-Target", "toast"); //toast message templete string notificationData = "<?xml version=\"1.0\" encoding=\"utf-8\"?>" + "<wp:Notification xmlns:wp=\"WPNotification\">" + "<wp:Toast>" + "<wp:Text1>WP7 TOAST</wp:Text1>" + "<wp:Text2>" + message + "</wp:Text2>" + "</wp:Toast>" + "</wp:Notification>"; byte[] contents = Encoding.Default.GetBytes(notificationData); request.ContentLength = contents.Length; //write to request stream using (Stream requestStream = request.GetRequestStream()) { requestStream.Write(contents, 0, contents.Length); } //get the HttpWebResponse status from Microsoft Notification Server using (HttpWebResponse response = (HttpWebResponse)request.GetResponse()) { notificationStatus = response.Headers["X-NotificationStatus"]; notificationChannelStatus = response.Headers["X-SubscriptionStatus"]; deviceConnectionStatus = response.Headers["X-DeviceConnectionStatus"]; } } //return the status to WPF Application return notificationStatus + " : " + notificationChannelStatus + " : " + deviceConnectionStatus; } } public class DeviceRef { public DeviceRef(string channelURI) { ChannelURI = channelURI; } public string ChannelURI { get; set; } } }Build the Windows Azure Cloud Service project and run. On the system tray the below icon will appear related to cloud development fabric and development storage.

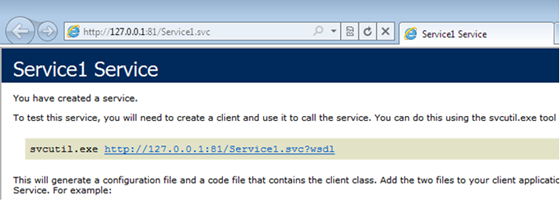

Verify the IP address with port in the development fabric.

The browser will be launched with same IP address and port number, please provide the servicename for example service1.svc at the end of the url if Service1.svc is not found.

Now the “Windows Azure cloud service with WCF web role” is ready to serve.

WpfAppNotificationComposer - WPF Application To Send Notification

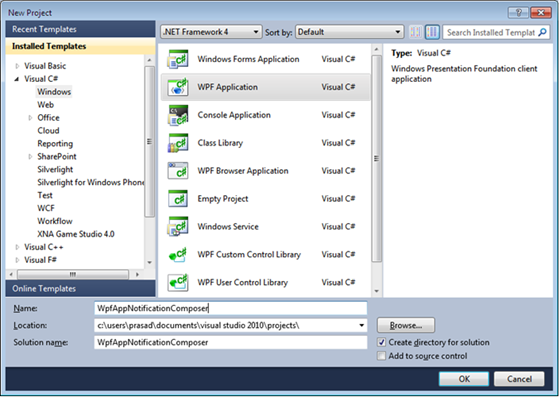

Second Let us create the new project “WPF Application to send Notification”

Create new WPF Application with the name “WPFNotificationComposer” as shown below

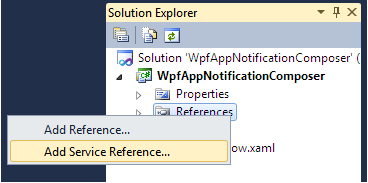

Go to reference in the solution explorer and right click on that and choose Add Service Reference… In the popup menu as shown below.

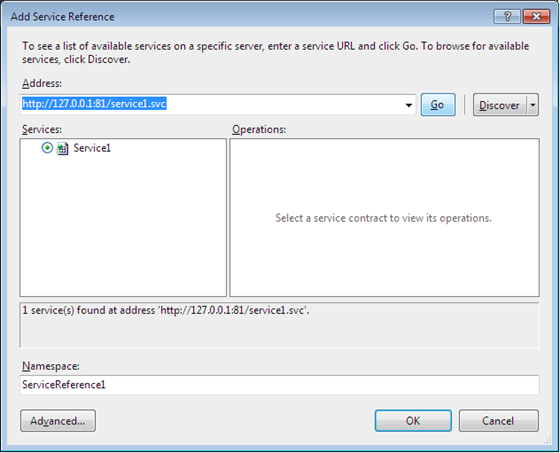

In the Add Service Reference Dialog, Enter the URL of the cloud service in the Address:, in this case it is http://127.0.0.1:81/Service1.svc

Give the name to the Namespace: ServiceReference1

Click on Go button and then Click on Ok button once the Service1 is listed as below in the Services:

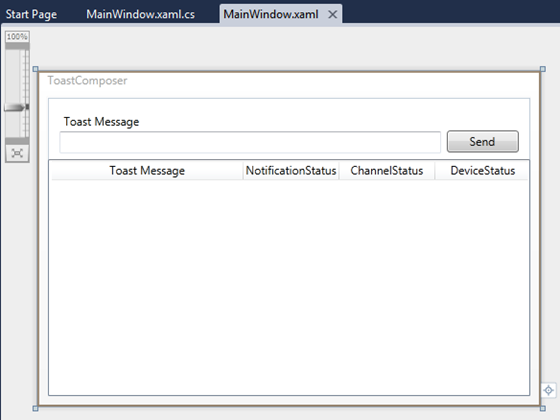

MainWindow.xaml

In the MainWindow.xaml just add 4 controls, label,textbox,button and listview and design as shown below.

Below is the complete MainWindow.xaml code

Collapse | Copy Code

<Window x:Class="WpfAppNotificationComposer.MainWindow" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" Title="ToastComposer" Height="350" Width="525"> <Grid> <TextBox Height="23" HorizontalAlignment="Left" Margin="12,35,0,0" Name="txtToast" VerticalAlignment="Top" Width="398" /> <Button Content="Send" Height="23" HorizontalAlignment="Right" Margin="0,34,12,0" Name="btnSend" VerticalAlignment="Top" Width="75" Click="btnSend_Click" /> <Label Content="Toast Message" Height="28" HorizontalAlignment="Left" Margin="12,12,0,0" Name="lblToast" VerticalAlignment="Top" /> <ListView Margin="0,64,0,0" x:Name="lvStatus"> <ListView.View> <GridView> <GridViewColumn Width="200" Header="Toast Message" DisplayMemberBinding="{Binding Path=ToastMessage}" /> <GridViewColumn Width="100" Header="NotificationStatus" DisplayMemberBinding="{Binding Path=NotificationStatus}" /> <GridViewColumn Width="100" Header="ChannelStatus" DisplayMemberBinding="{Binding Path=ChannelStatus}" /> <GridViewColumn Width="100" Header="DeviceStatus" DisplayMemberBinding="{Binding Path=DeviceStatus}" /> </GridView> </ListView.View> </ListView> </Grid> </Window>MainWindow.xaml.cs created a class ToastStatus and created a Service1Client object for cloud service. and on the send button click event called the cloud service and added the status to listview accordingly.

Collapse | Copy Code

using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.Windows; using System.Windows.Controls; using System.Windows.Data; using System.Windows.Documents; using System.Windows.Input; using System.Windows.Media; using System.Windows.Media.Imaging; using System.Windows.Navigation; using System.Windows.Shapes; namespace WpfAppNotificationComposer { /// <summary> /// Interaction logic for MainWindow.xaml /// </summary> public partial class MainWindow : Window { //Service1 client for cloud service. private ServiceReference1.Service1Client proxy = null; public MainWindow() { InitializeComponent(); } private void btnSend_Click(object sender, RoutedEventArgs e) { if (proxy == null) { proxy = new ServiceReference1.Service1Client(); } string status = proxy.SendToastNotification(txtToast.Text); string[] words = status.Split(':'); lvStatus.Items.Add(new ToastStatus(txtToast.Text.Trim(), words[0].Trim(), words[1].Trim(), words[2].Trim())); } } public class ToastStatus { public ToastStatus(string toastMessage,string notificationStatus,string channelStatus,string deviceStatus) { ToastMessage = toastMessage; NotificationStatus = notificationStatus; ChannelStatus = channelStatus; DeviceStatus = deviceStatus; } public string ToastMessage { get; set; } public string NotificationStatus { get; set; } public string ChannelStatus { get; set; } public string DeviceStatus { get; set; } } }Just build and Run the project.

Now the “WPF Application to send notification” is ready to Compose & Send toast message.

WindowsPhoneToast – Windows Phone Application To Receive Notification

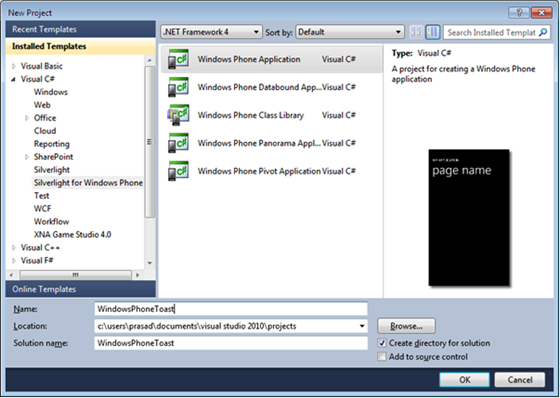

Third and finally Let us create the new project “Windows Phone Application to receive notification”

Start Visual Studio 2010 as administrator (UAC Account)

In the new projects templete choose the “Windows phone Application”

name it as “WindowsPhoneToast” and click on Ok button.

Add service reference Cloud Service in the same way we added in the WpfAppNotificationComposer project, Give the name to the Namespace: MyCloudServiceReference1

In the app.xaml.cs add the following reference

Collapse | Copy Code

//add namespace using Microsoft.Phone.Notification; //for HttpNotificationChannel using System.Diagnostics; //for debug.writelineDeclare class level channel variable

Collapse | Copy Code

//declare NotificationChannel variable. public static HttpNotificationChannel channel;In the public App() constructor just add the notification related code below InitializePhoneApplication();

Collapse | Copy Code

// Phone-specific initialization InitializePhoneApplication(); //HttpNotificationChannel , find the channel name this is our own channel name //here I have given "MyChannel" first, find the existing channel if not found //then create the new channel channel = HttpNotificationChannel.Find("MyChannel"); if (channel == null) { //create new channel channel = new HttpNotificationChannel("MyChannel"); //once if we initialize the channel it will take some time to fire the ChannelUriUpdated event //this will connect to microsoft push notification cloud service/some microsoft service and bring the unique channel URI, //for this Internet connection to your system is required. System.Threading.Thread.Sleep((30 * 1000));// wait for some time to happen this proocess channel.ChannelUriUpdated += new EventHandler<notificationchannelurieventargs>(channel_ChannelUriUpdated); channel.Open(); } if (!channel.IsShellToastBound) channel.BindToShellToast(); channel.ErrorOccurred += new EventHandler<notificationchannelerroreventargs>(channel_ErrorOccurred); }Add the following event handlers accordingly

Collapse | Copy Code

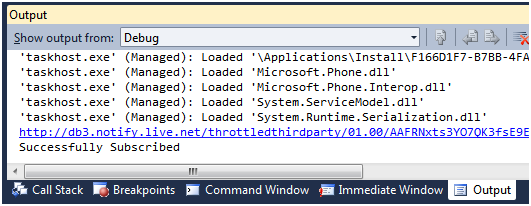

void channel_ErrorOccurred(object sender, NotificationChannelErrorEventArgs e) { Debug.WriteLine("An error occured while initializing the notification channel"); Debug.WriteLine(e.Message); } void channel_ChannelUriUpdated(object sender, NotificationChannelUriEventArgs e) { MyCloudServiceReference1.Service1Client proxy = new MyCloudServiceReference1.Service1Client(); //write the channel uri on output window Debug.WriteLine(e.ChannelUri.AbsoluteUri); proxy.SubscribeCompleted += new EventHandler<system.componentmodel.asynccompletedeventargs>(proxy_SubscribeCompleted); proxy.SubscribeAsync(e.ChannelUri.AbsoluteUri); } void proxy_SubscribeCompleted(object sender, System.ComponentModel.AsyncCompletedEventArgs e) { //write the subscription status on output window Debug.WriteLine("Successfully Subscribed"); }We are done with the coding now launch the the windows phone 7 app on the emulator.

While running the this Windows Phone 7 Application, it will generate the unique URI from Microsoft Cloud Service as shown below and also it will subscribe the unique URI generated from Microsoft Cloud Service to the cloud service written above. and if cloud service created above is not running we have to first launch the cloud service created above.

Once the application is running on the Emulator we have to pin the windows phone application to the start/home screen. please go through my earlier post.

How to Pin/Un Pin applications to Start/Home Screen on Windows Phone 7/Tiles on Windows Phone 7

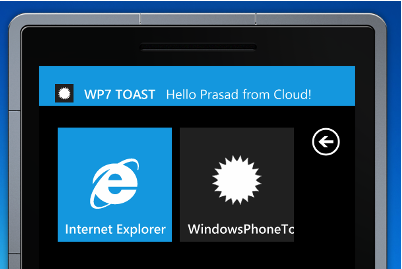

Stay in the start page of the windows phone 7 after pinning the WindowsPhoneToast application.

Now run the ToastComposer – the WPF application created above

Enter the toast message and click on send button

To understand the status please go through the

Push Notification Service Response Codes for Windows Phone from MSDN.Now go back to the Windows Phone 7 Emulator and verify the toast message, even when application is not running we are able to receive the toast if the application is pinned to start.

<Return to section navigation list>

Visual Studio LightSwitch

Kunal Chowhury continued his LightSwitch series with a Beginners Guide to Visual Studio LightSwitch (Part - 5) post of 1/4/2011 to the Silverlight Show blog:

Kunal Chowhury continued his LightSwitch series with a Beginners Guide to Visual Studio LightSwitch (Part - 5) post of 1/4/2011 to the Silverlight Show blog:

This article is Part 5 of the series “Beginners Guide to Visual Studio LightSwitch”:

- Beginners Guide to Visual Studio LightSwitch (Part – 1) – Working with New Data Entry Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 2) – Working with Search Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 3) – Working with Editable DataGrid Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 4) – Working with List and Details Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 5) – Working with Custom Validation

Visual Studio LightSwitch is a new tool for building data-driven Silverlight Application using Visual Studio IDE. It automatically generates the User Interface for a DataSource without writing any code. You can write a small amount of code also to meet your requirement.

In my previous chapter “Beginners Guide to Visual Studio LightSwitch (Part – 4)” I guided you step-by-step process to create a List and Details screen. There I demonstrated you, how to integrate two or more tables inside a single screen.

In this chapter, I am going to demonstrate you of doing custom validation using Visual Studio LightSwitch. This time we will jump into writing some code to extend the functionalities.

Background

If you are new to Visual Studio LightSwitch, I will first ask you to read the previous three chapters of this tutorial, where I demonstrated it in detail. In my 3rd chapter, I discussed the following topics:

- Creating the List and Details Screen

- UI Screen Features

- Adding a New Table

- Creating the Validation Rules

- Adding Relationship between two tables

- Creating the new List and Details Screen

- Application in Action

In this chapter we learn how to write custom validation for our table fields. Read it and start implementing your own logic. A quick jump to code will be there (for the first time). Enjoy reading the tutorial.

Setting up the basic application

Hope, you already read my first four chapters mentioned above. If not, read them first. It will be easy for you to get the base. Let’s start creating our new LightSwitch project. You know how to do that. Select the “LightSwitch Application for C#” template from the new project dialog. Give a proper name & location for your project and click “OK” to start creating the basic project.

Give some time to Visual Studio 2010 IDE to create the project. Once the project has been created, you will see the below screen inside the Visual Studio IDE:

Now you need to create a new table to start with the application. Create a simple basic table where we can add some custom validation logic. Chose your fields as per your requirement. For our sample, we will create a Employee table and there we will have some columns in it. Let us create 4 custom columns named “Firstname” of type(String), “Lastname” of type(String), “InTime” of type(DateTime) and “OutTime” of type(DateTime) to store the records of employees in and out time of particular day.

Here is the table structure for your reference:

Check “Required” field to do the null validation automatically by the tool. Hence, we don’t have to do it explicitly. I already demonstrated the same in my earlier chapters. Read them to understand it.

Validating Fields

Now it’s time to start the validation process for each field. Let’s start with the “Firstname”. Click on the row as marked in the below screenshot. Now go to the properties panel and scroll down it to the end. There you will find a grouped item named “Validation”. This panel hosts all the information of validation related to the selected field. Have a look it here:

As “Firstname” field is a String, you will see only “Maximum Length” as the default validation rule. If you want to change the maximum length of the string field, you can do that from here. If you want to write some custom validation rule, you can do it here by just clicking on the “Custom Validation” link from the properties panel. See the above screenshot for the details.

Let us check for the other field. This time we will do it for DateTime field. Select the InTime as shown below and check the properties panel. In the validation tab you will now see two fields “Minimum Value” and “Maximum Value”. Here you can specify your own custom value.

As mentioned above, we also have a link called “Custom Validation” here. You can write your own logic for validation by clicking it. Let’s do it. This is the first time we are going to write some code for LightSwitch application.

Click the “Custom Validation” link in the properties panel. This will open the code editor window inside the IDE. This editor window is very familiar to you. In the editor window, you will see the class file opened for you named “Employee”. This is nothing but the name of the table. It has a single event there and it looks as below:

You can do whatever validation logic you want it here. Want to write some code? Oh yeah! Ok, before doing anything, let me show you the API to throw the message on validation error. The output parameter “results” of type “EntityValidationResultsBuilder” has some APIs. If you want to throw some property validation error, use the “AddPropertyError()” method to return back the error message to the end user.

Have a look into the APIs mentioned in the below screenshot:

Ok, as per our requirement of the application, the OutTime of the employee must be after the InTime. An employee can’t go out if he/she is not in, am I right? So, let’s write some logic for that. If InTime is greater than OutTime (by any mistake by the end user, of-course), we will throw the error message to the user. We will use AddPropertyError() which takes string as message.

Have a look into the implementation here:

Hope, you got it. So, do it for the OutTime field too. Go to the table designer and select the row for “OutTime”. From the properties panel, click the “Custom Validation”, as we did it earlier. You will see the event generated for that in the code editor window.

Here, check whether OutTime is less than InTime. If so, throw the error as shown below:

We are ready with the custom validation. If you want to check for the various default validation rule for different controls, try it out. The custom validation is same for all the cases and if any issue, let me know. I will try to answer you as soon as possible.

See it in Action

Before closing this tutorial, you must want to see the demo in action. You must want to check whether we achieve our requirement or not. Hence, create a screen to check it out. For our sample, we will create an Editable Grid Screen for simplicity. Add the screen and don’t forget to chose the Employees table from the Screen Data dropdown.

Click “OK” and the IDE will create the screen for you. To show this demo, we don’t need to do any UI customization. Build the project. Hope, it will build successfully. If any issue, try to fix the error. Now run the application. You will see that, the application is up into the screen with the editable datagrid page opened in the main tab.

Try to add some records and you will notice that, the InTime and OutTime field has been automatically populated by the application. It will show you the current date time. Try to save the record. It will save properly without any error as the DateTime inserted is perfect.

Now, add a new record. Click on the InTime column. You will see that the calendar control. Yes, as you selected the column as DateTime type, the tool automatically added the calendar control for you. Now chose an earlier date from the calendar for the InTime field. Don’t change anything for the OutTime. Try to save the record. Woot, this time also the record saved successfully, because the validation rule passed the case.

Let us do something wrong now. Try to select a future date for the InTime. You will see the validation rule fails and the error message shown in the screen with a Red border to the InTime field. Have a look it here:

If you place the cursor into the Date field now, you will see the same error message that we wrote in the code window for the validation error of the InTime field.

Try to change the OutTime to a previous date. Woo, you will see the validation rule failed here too and the field automatically marked with a Red border.

If you place the cursor inside the field, you will see the error message shown into the screen. This is the same message we entered for the validation error of the OutTime field.

Try to save the table data after adding some records with empty text for the Firstname and Lastname field. You will see that, here also the validation failed because we marked those field as Required field. Hence, null record will throw validation error.

Correct the validation error and now you will be able to save the records without any issue.

End Note

You can see that, throughout the whole application (for all the previous 4 chapters) I never wrote a single line of code. I never did write a single line of XAML code to create the UI. It is presented by the tool template automatically. It has a huge feature to do automatically. From the UI design to add, update, delete and even sort, filter all are done automatically by the framework.

Only in this chapter, I introduced the code with you to extend the validation rule. I hope, you enjoyed this chapter of the series too. Huge nos. of figures I used here, so that, you can understand each steps very easily. If you liked this article, please don’t forget to share your feedback here. Appreciate your feedback, comments, suggestion and vote.

Paul Patterson (@PaulPatterson) posted a list of his LightSwitch articles catagorized as pre- and post-Beta 1 in LightSwitch Article Index of 1/4/2011:

The number of LightSwitch articles on my blog is starting to grow. This landing “page” should provide you an easy reference to all the articles I have published about LightSwitch.

Articles are listed in order of most recent first.

After Beta 1 Release

- Send an Email from LightSwitch – Leverage the System.Net.Mail namespace to send an email from your LightSwitch application.

- Creating and Using a Query – Create better contextual data visualization using LightSwitch queries.

- Creating Table Relationships – A primer on relational database management systems and how to implement relationships in LightSwitch.

- Entity Field Custom Validation – This article shows you how to perform custom validation on an entity field.

- Computed Field Property – Create an entity field that displays a value that is computed.

- Customizing Field Properties – Use the LightSwitch designer to customize the presentation of your field and its data.

- Adding Telerik Controls – An example of how to use Telerik Silverlight controls in your LightSwitch application.

- Adding Custom Silverlight Controls – This article is actually a reference to a Michael Washington article at aDefWebServer.com.

- Customizing a Data Entity – Use the designer to customize a LightSwitch data entity.

- Solution Explorer Logical View and File View – Know when to switch between both views when developing a LightSwitch application.

- Quick Start – An example scenario where I create a quick, and dirty, LightSwitch application.

- First Use – An article illustrating what happened when I selected to create a LightSwitch application using Visual Studio 2010.

- My First #fail at the Beta 1 Installation – Just like the title says. Hopefully you can learn from my mistakes:)

- Beta 1 Available for MSDN Subscribers – Where to download LightSwitch Beta 1

Before Beta 1 Release

- A Value Proposition for the Enterprise - A former Business Analyst’s take on what LightSwitch can bring to the table.

- CodeCast (Episode 88) Takeaway Notes – Some notes I took when listening to Jay Schmelzer on a CodeCast episode.

- A Data Driven Approach – Some notes and thoughts about how a data driven approach is used for developing LightSwitch applications.

- Beyond the Basics (Channel 9 Video) Takeaway Notes – More notes from an Channel9 video where Beth Massi interviews Joe Binder from the LightSwitch team.

- Behind the Pretty Face – A reiteration of an LightSwitch team blog article on the architecture behind LightSwitch.

- Visual Studio IDE – This is an article that examines the Visual Studio IDE used for designing LightSwitch applications.

- An Introduction – This is my first article which introduces LightSwitch.

Return to section navigation list>

Windows Azure Infrastructure

Kevin Timmons described Microsoft’s 2011 plans for new and expanded data centers in his Shedding Light on Our New Cloud Farms post of 1/4/2011 to the Global Federation Services blog:

It’s an exciting time for Microsoft’s datacenter program. In addition to operating one of the largest global datacenter footprints in the industry, we have been super busy working on multiple next-generation, modular facilities that are in various phases of construction.

One of our most innovative new datacenters is set to open in Quincy, WA in early 2011 and incorporates key learnings from award-winning facilities that Microsoft opened last year in Chicago and Dublin. The Dublin facility uses server PODs and outside air economization to cool the servers, which significantly reduces cooling expense and infrastructure costs. We took a slightly different approach with our Chicago datacenter which utilizes water-side economization for cooling and improves scalability by using IT Pre-Assembled Components (ITPACs.) An ITPAC is a pre-manufactured, fully-assembled module that can be built with a focus on sustainable materials such as steel and aluminum and can house as little as 400 servers and as many as 2,000 servers, significantly increasing flexibility and scalability. You can learn more about our ITPACs by going to the ITPAC video.

View our Modular Datacenter slide deckThe expansion in Quincy takes these ideas a step further by extending the flexibility of PACs across the entire facility using modular “building blocks” for electrical, mechanical, server and security subsystems. This increase in flexibility enables us to even better support the needs of what can often be a very unpredictable online business and allows us to build datacenters incrementally as capacity grows. Our modular design enables us to build a facility in significantly less time while reducing capital costs by an average of 50 to 60 percent over the lifetime of the project.

When Phase 1 opens in Quincy it will be located adjacent to our existing 500,000-square-foot facility. However, the new datacenter is radically different. The building will actually resemble slightly more modern versions of the tractor sheds I spent so much time around during my childhood in rural Illinois.

Tractor shed in my home town of Mt. Pulaski, IL

The building’s utilitarian appearance belies its many hidden innovations. The structure is virtually transparent to ambient outdoor conditions, allowing us to essentially place our servers and storage outside in the cool air while still protecting it from the elements. The interior layout is specifically designed to allow us to further innovate in the ways that we deploy equipment in future phases of the project. And, like any good barn, the protective shell serves to keep out critters and tumbleweeds. Additional phases have been planned for the Quincy site and will be built based on demand. Those phases will incorporate even more cutting-edge methods to deploy servers and storage in ways that have never been seen before in the industry.

We will open other modular datacenters later in 2011 in Virginia and Iowa and I’ll be sharing more information about those facilities at that time. Our modular approach to design and construction with these facilities will allow us to substantially lower cost per megawatt to build and run our datacenters while significantly reducing time to market. This is the holy grail for most datacenter professionals…. fast, cheap and reliable – what more could you ask for? [Links to related Data Center stories in mid-2010 added.]

We’ve been sharing our research and best practices around modularity with our partners and others in the industry for a number of years and I’m thrilled to see the industry begin moving in this direction. By sharing our learnings, we’ve helped others build more sustainable facilities and reduce our collective carbon footprint.

Stay tuned for more information about our future datacenter projects in 2011.

Kevin is General Manager of Microsoft’s Datacenter Services.

It appears that Microsoft settled its beef with the Washington state legislature about charging state sales tax on computing equipment used in data centers. Computing equipment was exempt from state sales taxes when Microsoft built the Quincy, WA (Northwest US region) datacenter, but the legislature let the exemption lapse in 2010. Quincy supported testers of Windows Azure and SQL Azure CTPs, but the South Central US (San Antonio, TX) and North Central US (Chicago, IL) regions took over for North American users when the Windows Azure Platform entered commercial (paid) operation in early 2010.

Mary Jo Foley (@maryjofoley) posted a Microsoft set to 'turn on' new Washington datacenter article about the same subject to ZDNet’s All About Microsoft blog on 1/4/2011.

Lori MacVittie (@lmacvittie) asserted Sometimes it’s not about how many resources you have but how you use them as an introduction to her Achieving Scalability Through Fewer Resources post of 1/4/2011 to F5’s Dev Central blog:

The premise upon which scalability through cloud computing and highly virtualized architectures is built is the rapid provisioning of additional resources as a means to scale out to meet demand. That premise is a sound one and one that is a successful tactic in implementing a scalability strategy.

But it’s not the only tactic that can be employed as a means to achieve scalability and it’s certainly not the most efficient means by which demand can be met.

WHAT HAPPENED to EFFFICIENCY?

One of the primary reasons cited in surveys regarding cloud computing drivers is that of efficiency. Organizations want to be more efficient as a means to better leverage the resources they do have and to streamline the processes by which additional resources are acquired and provisioned when necessary. But somewhere along the line it seems we’ve lost sight of enabling higher levels of efficiency for existing resources and have, in fact, often ignored that particular goal in favor of simplifying the provisioning process.

After all, if scalability is as easy as clicking a button to provision more capacity in the cloud, why wouldn’t you?

The answer is, of course, that it’s not as efficient and in some cases it may be an unnecessary expense.

The danger with cloud computing and automated, virtualized infrastructures is in the tendency to react to demand for increases in capacity as we’ve always reacted: throw more hardware at the problem. While in the case of cloud computing and virtualization this has morphed from hardware to “virtual hardware”, the result is the same – we’re throwing more resources at the problem of increasing demand. That’s not necessarily the best option and it’s certainly not the most efficient use of the resources we have on hand.

There are certainly efficiency gains in this approach, there’s no arguing that. The process for increasing capacity can go from a multi-week, many man-hour manual process to an hour or less, automated process that decreases the operational and capital expenses associated with increasing capacity. But if we want to truly take advantage of cloud computing and virtualization we should also be looking at optimizing the use of the resources we have on hand, for often it is the case that we have more than enough capacity, it simply isn’t being used to its full capacity.

CONNECTION MANAGEMENT

Discussions of resource management generally include compute, storage, and network resources. But they often fail to include connection management. That’s a travesty as TCP connection usage is increases dramatically with modern application architectures and TCP connections are resource heavy; they consume a lot of RAM and CPU on web and application servers to manage. In many cases the TCP connection management duties of a web or application server are by far the largest consumers of resources; the application itself actually consumes very little on a per-user basis.

Optimizing those connections – or the use of those connections – then, should be a priority for any efficiency-minded organization, particularly those interested in reducing the operational costs associated with scalability and availability. As is often the case, the tools to make more efficient the use of TCP connections is likely already in the data center and has been merely overlooked: the application delivery controller.

The reason for this is simple: most organizations acquire an application delivery controller (ADC) for its load balancing capabilities and tend to ignore all the bells and whistles and additional features (value) it can provide. Load balancing is but one feature of application delivery; there are many more that can dramatically impact the capacity and performance of web applications if they employed as part of a comprehensive application delivery strategy.

An ADC provides the means to perform TCP multiplexing (a.k.a. server offload, a.k.a. connection management). TCP multiplexing allows the ADC to maintain millions of connections with clients (users) while requiring only a fraction of that number to the servers. By reusing existing TCP connections to web and application servers, an ADC eliminates the overhead in processing time associating with opening, managing, and closing TCP connections every time a user accesses the web application. If you consider that most applications today are Web 2.0 and employ a variety of automatically updating components, you can easily see that eliminating the TCP management for the connections required to perform those updates will decrease not only the number of TCP connections required on the server-side but will also eliminate the time associated with such a process, meaning better end-user performance.

INCREASE CAPACITY by DECREASING RESOURCE UTILIZATION

Essentially we’re talking about increasing capacity by decreasing resource utilization without compromising availability or performance. This is an application delivery strategy that requires a broader perspective than is generally available to operations and development staff. The ability to recognize a connection-heavy application and subsequently employ the optimization capabilities of an application delivery controller to improve the efficiency of resource utilization for that application require a more holistic view of the entire architecture.

Yes, this is the realm of devops and it is in this realm that the full potential of application delivery will be realized. It will take someone well-versed in both network and application infrastructure to view the two as part of a larger, holistic delivery architecture in order to assess the situation and determine that optimization of connection management will benefit the application not only as a means to improve performance but to increase capacity without increasing associated server-side resources.

Efficiency through optimization of resource utilization is an excellent strategy to improving the overall delivery of applications whilst simultaneously decreasing costs. It doesn’t require cloud or virtualization, it simply requires a better understanding of applications and their underlying infrastructure and optimizing the application delivery infrastructure such that the innate behavior of such infrastructure is made more efficient without negatively impacting performance or availability. Leveraging TCP multiplexing is a simple method of optimizing connection utilization between clients and servers that can dramatically improve resource utilization and immediately increase capacity of existing “servers”.

Organizations looking to improve their bottom line and do more with less ought to closely evaluate their application delivery strategy and find those places where resource utilization can be optimized as a way as to improve efficiency of the use of existing resources before embarking on a “throw more hardware at the problem” initiative.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), VM Role, Hyper-V and Private Clouds

Brian Ehlert posted The Azure VM Role reboot reset – understanding the pale blue cloud on 1/4/2011:

Here is a bit more insight into the behavior of VMs in Azure – and one more point that VM Role is NOT a solution for Infrastructure as a Service.

Lest begin with a very simple, one VM scenario:

With a VM Role VM – you (the developer, the person that wants to run your VM on Azure) uploads a VHD into what is now VHD specific storage, in a specific datacenter.

You then create an application in the tool of your choice and define the VHD with the service settings – this links your VHD to a VM definition, firewall configuration, load balancer configuration, etc.

You deploy your VM Role centric service and sit back and wait – then test and voila! it works.

You do stuff with your VM, the VM life changes and all is happy – or is it.

Now, you – being a curious individual – click the “Reboot” button in the Azure portal. You think, cool, I am rebooting my VM – but you aren’t you are actually resetting your service deployment. You return to your VM Role VM to find changes missing.

This takes us into behaviors of the Azure Fabric. On a quick note – if you wanted some type of persistence, you need to use Azure storage for that. Back to the issue at had – your rolled back VM. Lets explore a possibility for why this is.

BTW - This behavior is the same for Web Roles, and Worker Roles as well – but it is the Azure base OS image, not yours.

Basically what happened was no different than a revert to a previous snapshot using Hyper-V, or the old VirtualPC rollback mode. When a VM is deployed there is a base VHD (this can be a base image – or your VM Role VHD) and there is a new differencing disk that is spawned off.

You selecting reboot actually tossed out the differencing disk which contains your latest changes and created a new one, thus reverting your VM Role VM. This is all fine and dandy, however my biggest question is: What are the implications upon authentication mechanism such as Active Directory – AD does not deal with rollbacks of itself or of domain joined machines very well at all.

My scenario is that you are using Azure Connect Services connecting back to a domain controller in your environment – you join the domain, someone clicks reboot and your machine is no longer domain joined or you have a mess of authentication errors. Again, this is not the Azure model.

The Azure model I in this case is that your VM reboots at the fabric layer back to the base image (they recommend that you prepare with sysprep) and it re-joins your domain as a new machine – with all of the pre-installed software.

This is all about persistence and where that persistence resides. In the VMs of your service there is no persistence, the persistence resides within your application and its interaction with Azure storage or writing back to some element within the enterprise.

This is important to understand, especially if you think of Azure as IaaS - which you need to stop doing. It is a platform. It is similar to a hypervisor but it is not a hypervisor in your interaction with it as a developer or ITPro.

Tim Greene asserted “Before jumping in you need to master virtualization, garner C-level support and have a business case” as a deck for his 2011 tech priorities: Private cloud beckons article of 1/3/2011 for NetworkWorld’s Data Center blog:

The indisputable economic benefits of cloud computing for certain applications drive businesses to consider building clouds of their own, but they need to make sure they are prepared before jumping into the cloud.

Consideration of people, governance, price and technology all need to come into play, says Alan Boehme, senior vice president of IT strategy and enterprise architecture for ING Americas, a user of cloud technology and a founder of the Cloud Security Alliance.

Plus organizations need to climb a virtualization-maturity curve before they have what they need to take on the challenges of a private cloud, says James Staten, a vice president and principal analyst for Forrester Research.

Climbing the curve calls for going through a four-step process consisting of selling virtualization to reluctant users, deploying it in earnest, optimizing the use rates of the server pool, and creating incentives that encourage sharing virtual infrastructure across the business, he says.

During the first stage, which most IT departments have already completed, IT goes begging to find developers within their organizations who will try the technology. In the next stage IT makes it easy to get a virtual machine outside the approval process needed for a physical machine.

Staten calls this the hero phase because IT can reduce the time and cost of adding new resources. During this phase the ratio between the number of physical servers that would be needed with and without virtualization is reduced and becomes a benchmark for success.

In Stage 3, that ratio becomes less relevant as the business tries to maximize utilization rates of server resources across the pool of physical servers, and the key metric is that utilization rate. During this phase, tools and procedures must be put in place to minimize virtual machine sprawl, making sure that virtual machines that aren't being used are shut down so the server resources can be reused, Staten says.

The final stage calls for active marketing of virtualization to divisions of the business and convincing them it's best for the organization as a whole to share resources. The most effective means is to show the dramatic difference in time it takes to authorize and turn on a physical server (a matter of weeks) vs. turning on a new virtual machine in the data center (a day to a week) vs. turning up a VM in the cloud using a standard template (immediately). When a central store of servers can be shared among business units, the business is ready for adopting a private cloud, he says.

For businesses that haven't climbed very high yet on the maturity curve, there are faster ways, Boehme says. For example, a group within IT can be created to set up a relationship with a cloud service provider to carve out a dedicated cloud for the business customer within its service cloud infrastructure. That relieves many of the burdens of creating a business owned and maintained cloud. …

The Paimail Computer Info blog posted Private Cloud Computing: A Game Changer for Disaster Recovery on 12/18/2010, which PrivateCloud.com reprinted on 1/4/2011:

Private cloud computing offers a number of significant advantages – including lower costs, faster server deployments, and higher levels of resiliency. What is often over looked is how the Private Cloud can dramatically changes the game for IT disaster recovery in terms of significantly lower costs, faster recovery times, and enhanced testability.

Before we talk about the private cloud, let’s explore the challenges of IT disaster recovery for traditional server systems.

Most legacy IT systems are comprised of a heterogeneous set of hardware platforms – added to the system over time – with different processors, memory, drives, BIOS, and I/O systems. In a production environment, these heterogeneous systems work as designed, and the applications are loaded onto the servers and maintained and patched over time.

Offsite backups of these heterogeneous systems can be performed and safely stored at an offsite location. There are really 2 options for backing up and restoring the systems:

1) Back up the data only – where the files are backed up from the local server hard drives to the offsite location either through tapes, online or between data centers over a dedicated fiber connection. The goal is to assure that all of the data is captured and recoverable. To recover the server in the case of a disaster, the operating system needs to be reloaded and patched to the same level as the production server, the applications need to be reloaded, re-patched, and configured, and then the backed up data can be restored to the server. Reloading the operating system and applications can be a time consuming process, and assuring that the system and applications are patched to the same levels as the production server can be subject to human memory and error – both of which can lengthen the recovery time.

2) Bare Metal Restore – a much faster way to recover the entire system. BMR creates an entire snapshot of the operating system, applications, system registry and data files, and restores the entire system on similar hardware exactly as it was configured in the production system. The gotcha is the “similar hardware” requirement. This often requires the same CPU version, BIOS, and I/O configuration to assure the recovery will be operational. In a heterogeneous server environment, duplicate servers need to be on-hand to execute a bare metal restoration for disaster recovery. As a result, IT disaster recovery for heterogeneous servers systems either sacrifice recovery time or requires the hardware investment be fully duplicated for a bare metal restoration to be successful.

Enter disaster recovery for private cloud computing. First, with all of the discussion about “cloud computing”, let me define what I mean by private cloud computing. Private Cloud computing is a virtualized server environment that is:

• Designed for rapid server deployment – as with both public and private clouds, one of the key advantages of cloud computing is that servers can be turned up & spun down at the drop of a hat.

• Dedicated – the hardware, data storage and network are dedicated to a single client or company and not shared between different users.

• Secure – Because the network is dedicated to a single client, it is connected only to that client’s dedicated servers and storage.

• Compliant – with the dedicated secure environment, PCI, HIPAA, and SOX compliance is easily achieved.

As opposed to public cloud computing paradigms, which are generally deployed as web servers or development systems, private cloud computing systems are preferred by mid and large size enterprises because they meet the security and compliance requirements of these larger organizations and their customers.

When production applications are loaded and running on a private cloud, they enjoy a couple of key attributes which dramatically redefine the approach to disaster recovery:

1) The servers are virtualized, thereby abstracting the operating system and applications from the hardware.

2) Typically (but not required) the cloud runs on a common set of hardware hosts – and the private cloud footprint can be expanded by simply adding an additional host.

3) Many larger private cloud implementations are running with a dedicated SAN and dedicated cloud controller. The virtualization in the private cloud provides the benefits of bare metal restoration without being tied to particular hardware. The virtual server can be backed up as a “snapshot” including the operating system, applications, system registry and data – and restored on another hardware host very quickly.

This opens up 4 options for disaster recovery, depending on the recovery time objective goal.

1) Offsite Backup – The simplest and fastest way to assure that the data is safe and offsite is to back up the servers to a second date center that is geographically distanced from the production site. If a disaster occurs, new hardware will need to be located to run the system on, which can extend the recovery time depending on the hardware availability at the time of disaster.

2) Dedicated Warm Site Disaster Recovery – This involves placing hardware servers at the offsite data center. If a disaster occurs, the backed up virtual servers can be quickly restored to the host platforms. One advantage to note here is that the hardware does not need to match the production hardware. The disaster recovery site can use a scaled down set of hardware to host a select number of virtual servers or run at a slower throughput than the production environment.

3) Shared Warm Site Disaster Recovery – In this case, the private cloud provider delivers the disaster recovery hardware at a separate data center and “shares” the hardware among a number of clients on a “first declared, first served” basis. Because most disaster recovery hardware sits idle and clients typically don’t experience a production disaster at the same time, the warm site servers can be offered at a fraction of the cost of a dedicated solution by sharing the platforms across customers.

4) Hot Site SAN-SAN Replication – Although more expensive than warm site disaster recovery, SAN-SAN replication between clouds at the production and disaster recovery sites provides the fastest recovery and lowest data latency between systems. Depending on the recovery objectives, the secondary SAN can be more cost effective in terms of the amount and type of storage, and the number and size of physical hardware servers can also be scaled back to accommodate a lower performance solution in case of a disaster.

An often overlooked benefit of private cloud computing is how it changes the IT disaster recovery game. Once applications are in production in a private cloud, disaster recovery across data centers can be done at a fraction of the cost compared to traditional heterogeneous systems, and deliver much faster recovery times.

Another example that appears to be a dedicated, virtualized data center rather than a private cloud.

<Return to section navigation list>

Cloud Security and Governance

No significant articles so far today.

<Return to section navigation list>

Cloud Computing Events

Jeff Barr (@jeffbarr, pictured below) announced on 1/4/2011 a Webinar - Analytics in the Cloud With Deepak Singh on 1/27/2010:

On January 27, 2010, Deepak Singh, PhD (Senior Business Development Director for AWS and author of the Business, Bytes, Genes, and Molecules blog) will discuss Analytics in the Cloud in a live webinar. Deepak will explain how businesses of any size can rapidly and cost-effectively analyze data sets of any size.

The webinar is free but you need to register!

Bruno Terkaly announced on 1/3/2011 a New Meetup: The Windows Azure AppFabric - Possibly the most compelling part of Azure to be held 1/27/2011 at 6:30 PM in Microsoft’s San Francisco office:

Announcing a new Meetup for Azure Cloud Computing Developers Group!

- What: The Windows Azure AppFabric - Possibly the most compelling part of Azure

- When: Thursday, January 27, 2011 6:30 PM

- Where: Microsoft San Francisco (in Westfield Mall where Powell meets Market Street) 835 Market Street Golden Gate Rooms - 7th Floor San Francisco, CA 94103

There is no question that there is a growing demand for high-performing, connected systems and applications. Windows Azure AppFabric has an interesting array of features today that can be used by ISVs and other developers to architect hybrid on-premise/in-cloud applications. Components like Service Bus, Access Control Service and the Caching Service. are very useful in their own right when used to build hybrid applications.

The AppFabric Service Bus is key to integration in Windows Azure. The AppFabric Service Bus is an extremely powerful way to connect systems and content outside the firewalls of companies, unifying it with internal, often legacy systems’ data. The AppFabric facilitates transmitting content to any device, anywhere in the world, at any time.

So why do you think we need the App Fabric?

- Operating systems are still located—trapped is often a better word—on a local computer, typically behind a firewall and perhaps network address translation (NAT) of some sort. This problem is true of smart devices and phones, too.

- As ubiquitous as Web browsers are, their reach into data is limited to an interactive exchange in a format they understand.

- Code re-use is low for many aspects of software. Think about server applications, desktop or portable computers, smart devices, and advanced cell phones. Microsoft leads the way in one developer experience across multiple paradigms.

- Legacy apps are hard to re-write, due to security concerns or privacy restrictions. Often times the codebase lacks the support of the original developers.

- The Internet is not always the network being used. Private networks are an important part of the application environment, and their insulation from the Internet is a simple fact of information technology (IT) life.

Come join me for some non-stop hands-on coding on January 27th. I've created a series of hands-on labs that will help you get started with the Windows Azure Platform, with an emphasis on the AppFabric.

RSVP to this Meetup: http://www.meetup.com...

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Adron Hall started a new apples-to-oranges cloud-comparison series with Cloud Throw Down: Part 1 – Operating Systems & Languages of 1/4/2011:

The clouds available from Amazon Web Services, Windows Azure, Rackspace and others have a few things in common. They’re all providing storage, APIs, and other bits around the premise of the cloud. They all also run on virtualized operating systems. This blog entry I’m going to focus on some key features and considerations I’ve run into over the last year or so working with these two cloud stacks. I’ll discuss a specific feature and then will rate it declaring a winner.

Operating System Options

AWS runs on their AMI OS images, running Linux, Windows, or whatever you may want. They allow you to execute these images via the EC2 instance feature of the AWS cloud.

Windows Azure runs on Hyper-V with Windows 2008. Their VM runs Windows 2008. Windows Azure allows you to run roles which execute .NET code or Java, PHP, or other languages, and allow you to boot up a Windows 2008 OS image on a VM and run whatever that OS might run.

Rating & Winner: Operating Systems Options goes to Amazon Web Services

Development Languages Supported

AWS has a .NET, PHP, Ruby on Rails, and other SDK options. Windows Azure also has .NET, PHP, Ruby on Rails, and other SDK options. AWS provides 1st class citizen support and development around any of the languages, Windows Azure does not but is changing that for Java. Overall both platforms support what 99% of developers build applications with, however Windows Azure does have a few limitations on non .NET languages.

Rating & Winner: Development Languages Supported is a tie. AWS & Windows Azure both have support for almost any language you want to use.

Operating System Deployment Time

AWS can deploy one of hundreds of any operating system image with a few clicks within the administration console. Linux instances take about a minute or two to startup, with Windows instances taking somewhere between 8-30 minutes to startup. Windows Azure boots up a web, service, or CGI role in about 8-15 minutes. The Windows Azure VM Role reportedly boots up in about the same amount of time that AWS takes to boot a Windows OS image.

The reality of the matter is that both clouds provide similar boot up times, but the dependent factor is which operating system you are using. If you’re using Linux you’ll get a boot up time that is almost 10x faster than a Windows boot up time. Windows Azure doesn’t have Linux so they don’t gain any benefit from this operating system. So really, even though I’m rating the cloud, this is really a rating about which operating system is faster to boot.

Rating & Winner: Operating System Deployment Time goes to AWS.

Today’s winner is hands down AWS. Windows Azure, being limited to Windows OS at the core, has some distinct and problematic disadvantages with bootup & operating system support options. However I must say that both platforms offer excellent language support for development.

To check out more about either cloud service navigate over to:

That’s the competitions for today. I’ll have another throw down tomorrow when the tide may turn against AWS – so stay tuned! :)