Windows Azure and Cloud Computing Posts for 1/8/2011+

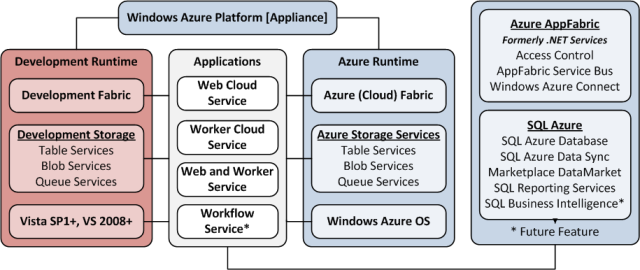

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

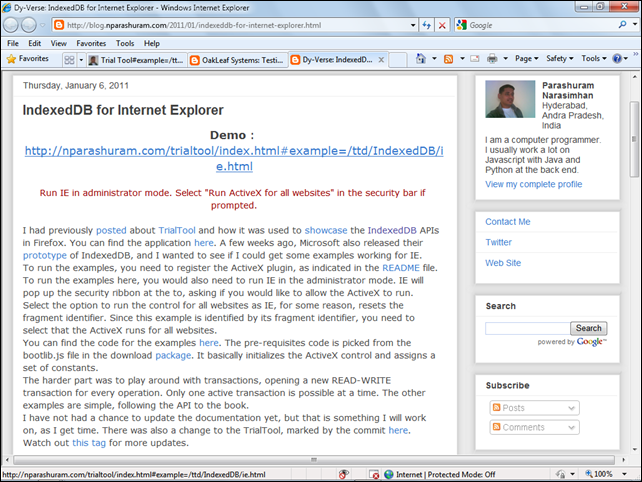

Updated my Testing IndexedDB with the Trial Tool Web App and Microsoft Internet Explorer 8 or 9 Beta of 1/7/2011 with screen captures from a corrected version of Trial Tool:

Update 1/9/2011: Parashuram eliminated spurious “Transaction Complete undefined” messages in the console response of the Save Data, Get Data and Create Index examples.

Narasimhan Parashuram (@nparashuram) added this comment to my Testing IndexedDB with the SqlCeJsE40.dll COM Server and Microsoft Internet Explorer 8 or 9 Beta post (updated 1/6/2011) about his TrialTool test pages for IE8 and IE9:

Here is a showcase of the IndexedDB API for IE - http://blog.nparashuram.com/2011/01/indexeddb-for-internet-explorer.html

- that opens this page:

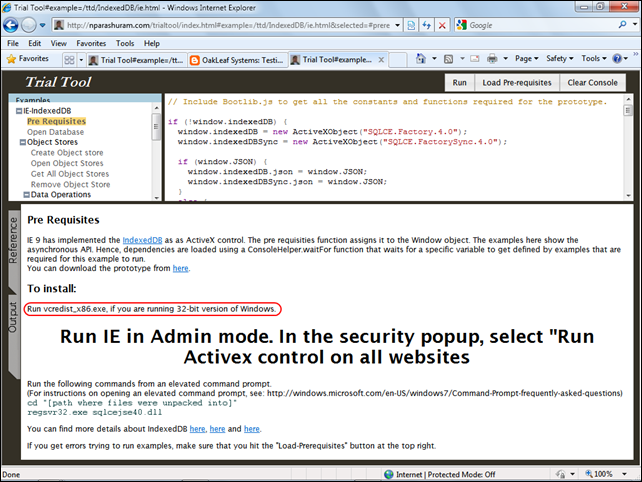

Following the Demo link opens a page that’s identical to that described in my Testing IndexedDB with the Trial Tool Web App and Mozilla Firefox 4 Beta 8 (updated 1/6/2011). Clicking the Pre-Requisites link in the Examples list opens this page with additional instructions in the console window:

Important: Note the requirement for running vcredist_x86.exe, which you’ll find in the IndexedDB install directory, from a command prompt if you’re using 32-bit Windows. You must run vcredist_x86.exe before you run regsvr32.exe sqlcejse40.dll. Close and reopen IE after registering the COM server. You must run commands and IE with an Administrator account and enable running the two ActiveX controls from all Websites when prompted.

This version of the Trial Tool provides automated equivalents of the COM server package’s CodeSnippets, as well as many examples that the snippets don’t cover.

The post continues with the results of running several examples.

<Return to section navigation list>

SQL Azure Database and Reporting

Rob Tiffany posted Reducing SQL Server I/O Contention during Sync :: Tip 2 on 1/7/2010:

In my last Sync/Contention post, I beat up on a select group of SAN administrators who aren’t willing to go the extra mile to optimize the very heart of their organization, SQL Server. You guys know who you are.

This time, I want to look at something more basic, yet often overlooked.

All DBAs know that Joining tables on non-indexed columns is the most expensive operation SQL Server can perform. Amazingly, I run into this problem over and over with many of my customers. Sync technologies like the Sync Framework, RDA and Merge Replication allow for varying levels of server-side filtering. This is a popular feature used to reduce the size of the tables and rows being downloaded to Silverlight Isolated Storage or SQL Server Compact.

It’s also a performance killer when tables and columns participating in a Join filter are not properly indexed. Keeping rows locked longer than necessary creates undue blocking and deadlocking. It also creates unhappy slate and smartphone users who have to wait longer for their sync to complete.

Do yourself a favor and go take a look at all the filters you’ve created and makes sure that you have indexes on all those Joined columns.

Rob’s earlier post is Reducing SQL Server I/O Contention during Sync :: Tip 1 of 1/5/2010:

Sync technologies like Merge Replication and the Sync Framework track changes on SQL Server using triggers, stored procedures and special tracking tables. The act of tracking changes made by each SQL Server Compact or Silverlight sync subscriber can cause a lot of locking and blocking on the server. This diminishes performance and sometimes leads to deadlocks.

Therefore, don’t listen to your SAN administrator when he says the RAID 5 will do. RAID 1 or 10 must always be used for all databases, tempdb, and transaction logs. Furthermore, each of these database objects must be placed on their own dedicated RAID arrays. No sharing! Remembers, as a DBA and sync expert, knowledge of SAN configuration must always be part of your skillset.

Although Rob addresses on-premises SQL Server instances in these posts, they’re also applicable to SQL Azure and SQL Azure Data Sync.

MS-Certs.com continued its SQL Azure series with Migrating Databases and Data to SQL Azure (part 7) on 1/5/2010:

2.4. Executing Your Migration Package

You're now ready to test your SSIS package. In Visual Studio, click the green arrow on the toolbar to begin package execution. Execution starts at the Clear Data task—which, as you recall, deletes all the data from the UserDocs, Users, and Docs tables. Execution next goes to the first data flow, which queries data from the local Docs table (source) and copies it to the TechBio database in the Docs table in SQL Azure (destination). Execution then goes the second and third data flow tasks.

When execution is complete, all the tasks are green, as shown in Figure 15, letting you know that they executed successfully. Any tasks that are yellow are currently executing. Red tasks are bad: that means an error occurred during the execution of the task, regardless of whether the task was in the control flow or data flow, and execution stops.

If your tasks are all green, you can go back to your root beer. Otherwise, the best place to start debugging is the Output window. All output, including errors, is written to this window. You can find errors easily by looking for any line that starts with Error: toward the end of the list.

Errors you receive may be SSIS specific or SQL Azure specific. For example, did you define your connections correctly? Microsoft makes testing connections very simple, and this doesn't mean the Test Connection button. The Source Editors dialog—regardless if whether it's an OLE DB or ADO.NET Editor—includes a Preview button that provides a small preview of your data, up to 200 rows. This ensures that at least your source connection works correctly.

2.5. Verifying the Migration

When you have everything working and executing smoothly, in Visual Studio click the blue square button on the toolbar to stop execution. Go back to SSMS, and query the three tables in your SQL Azure instance to verify that data indeed copied successfully. As shown in Figure 5-16, you should see roughly 100 rows in the Users table, two rows in the Docs table, and two rows in the UserDocs table.

Figure 16. Viewing migrated data in SSMS

2.6. Other Cases to Consider

This example was simple; the source and destination tables were mirrors of each other, including column names and data types. This made data migration easy. However, in some cases the source and destination tables differ in column names and data types. There are tasks that help with this, such as the Derived Column, Data Conversion, and Lookup tasks. If you're using these tasks and are getting errors, start by looking at these tasks to make sure they aren't the source of data-truncation or data-conversion errors.

Again, this section isn't intended to be an SSIS primer. Great books about SSIS are available that focus on beginner topics all the way to advanced topics. Brian Knight is a SQL Server MVP who has written numerous books on SSIS; his books are highly recommended if you're looking for SSIS information and instruction.So far we have talked about SSIS and the SQL Server Generate and Publish Scripts wizard which both offer viable options for migrating your data, but with little differences. For example, SSIS doesn't migrate schema while the Scripts wizard does. Let's talk about the third tool, Bcp, which also provides a method for migrating data to SQL Azure.

Other members of this series:

- Migrating Databases and Data to SQL Azure (part 9)

- Migrating Databases and Data to SQL Azure (part 8)

- Migrating Databases and Data to SQL Azure (part 6) - Building a Migration Package

- Migrating Databases and Data to SQL Azure (part 5) - Creating an Integration Services Project

- Migrating Databases and Data to SQL Azure (part 4) - Fixing the Script

- Migrating Databases and Data to SQL Azure (part 3) - Reviewing the Generated Script

- Migrating Databases and Data to SQL Azure (part 2)

- Migrating Databases and Data to SQL Azure (part 1) - Generate and Publish Scripts Wizard

<Return to section navigation list>

MarketPlace DataMarket and OData

Chris Sells recommended Be Careful with Data Services Authentication + Batch Mode in a 1/7/2010 post:

I was doing something quite innocent the other day: I was trying to provide authentication on top of the .NET 4.0 WCF Data Services (DS) on a per method basis, e.g. let folks read all they want but stop them from writing unless they’re an authorized user. In the absence of an authorized user, I threw a DataServicesException with a 401 and the right header set to stop execution of my server-side method and communicate to the client that it should ask for a login.

In addition, on the DS client, also written in .NET 4.0, I was attempting to use batch mode to reduce the number of round trips between the client and the server.

Once I’d cleared away the other bugs in my program, it was these three things in combination that caused the trouble.

The Problem: DataServicesException + HTTP 401 + SaveChanges(Batch)

Reproducing the problem starts by turning off forms authentication in the web.config of a plain vanilla ASP.NET MVC 2 project in Visual Studio 2010, as we’re going to be building our own Basic authentication:

Next, bring in the Categories table from Northwind into a ADO.NET Entity Data Model:

The model itself doesn’t matter – we just need something to allow read-write. Now, to expose the model, add a WCF Data Service called “NorthwindService” and expose the NorthwindEntities we get from the EDMX:

public class NorthwindService : DataService<NorthwindEntities> { public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Categories", EntitySetRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; } ... }Notice that we’re allowing complete read/write access to categories on our service, but what we really want is to let everyone read and only allow authenticated users to write. We can do that with a change interceptor:

[ChangeInterceptor("Categories")] public void OnChangeCategory(Category category, UpdateOperations operation) { // Authenticate string[] userpw = GetCurrentUserPassword(); if (userpw == null || !userpw[0].Equals("admin", StringComparison.CurrentCultureIgnoreCase) || !userpw[1].Equals("pw")) { HttpContext.Current.Response.

AddHeader("WWW-Authenticate", "Basic realm=\"Northwind\""); throw new DataServiceException(401, "Unauthorized"); } } // Use HTTP Basic authentication string[] GetCurrentUserPassword() { string authorization = HttpContext.Current.Request.Headers["Authorization"]; if (string.IsNullOrEmpty(authorization)) { return null; } if (!authorization.StartsWith("Basic")) { return null; } byte[] base64 = Convert.FromBase64String(authorization.Substring(6)); string[] userpw = Encoding.ASCII.GetString(base64).Split(':'); if (userpw.Length != 2) { return null; } return userpw; }The change interceptor checks whether the client program provided a standard HTTP Basic authentication header and, if so, pulls out the admin user name/password pair. If it isn’t found, we set the “WWW-Authenticate” header and throw a DataServicesException, which will turn into an HTTP error response, letting the client know “I need some credentials, please.”

The code itself is very simplistic and if you want better code, I recommend Alex James’s most excellent blog series on Data Services and Authentication. However, it’s good enough to return a 401 Authorized HTTP error back to the client. If it’s the browser, it’ll prompt the user like so:

The browser isn’t a very interesting program, however, which is why I added a service reference for my new service to my plain vanilla console application and wrote this little program:

class Program { static void Main(string[] args) { var service =

new NorthwindEntities(new Uri(@"http://localhost:14738/NorthwindService.svc"));

service.Credentials = new NetworkCredential("admin", "pw");

var category = new Category() { CategoryName = "My Category" }; service.AddToCategories(category); //service.SaveChanges(); // works service.SaveChanges(SaveChangesOptions.Batch); // #fail

Console.WriteLine(category.CategoryID); } }Here we’re setting up the credentials for when the service asks, adding a new Category and calling SaveChanges. And this is where the trouble started. Actually, this is where the trouble ended after three days of banging my head and 4 hours with the WCF Data Services team (thanks Alex, Pablo and Phani!). Anyway, we’ve got three things interacting here:

- The batch mode SaveChanges on the DS client which bundles your changes into a send OData round-trip for efficiency. You should use this when you can.

- The DataServicesException which bundles extra information about your server-side troubles into the payload of the response so that a knowledgeable client, like the .NET DS client, can pull it out for you. You should use this when you can.

- The HTTP authentication scheme which doesn’t fail when it doesn’t get the authentication it needs, but rather asks for the client to provide it. You should use this when you can.

Unfortunately, as of .NET 4.0 SP0, you can’t use all of these together.

What happens is that non-batch mode works just fine when our server sends back a 401 asking for login credentials, pulling the credentials out of the server reference’s Credentials property. And so does batch mode.

However, where batch mode falls down is with the extra payload data that the DataServicesExpection packs into the HTTP error resposne, which confuses it enough so that the exception isn’t handled as a request for credentials, but rather reflected back up to the client code. It’s the interaction between all three of these that causes the problem, which means that until there’s a fix in your version of .NET, you need a work-around. Luckily, you’ve got three to choose from.

Work-Around #1: Don’t Use DataServiceException

If you like, you can turn off the extra information your service endpoint is providing with the DataServiceException and just set the HTTP status, e.g.

HttpContext.Current.Response.AddHeader("WWW-Authenticate", "Basic realm=\"Northwind\""); //throw new DataServiceException(401, "Unauthorized"); HttpContext.Current.Response.StatusCode = 401; HttpContext.Current.Response.StatusDescription = "Unauthorized"; HttpContext.Current.Response.End();This fix only doesn’t work with Cassini, but Cassini doesn’t work well in the face of HTTP authentication anyway, so moving to IIS7 should be one of the first things you do when facing an authentication problem.

Personally, I don’t like this work-around as it puts the onus on the service to fix a client problem and it throws away all kinds of useful information the service can provide when you’re trying to test it.

Work-Around #2: Don’t Use Batch-Mode

If you use “SaveChanges(SaveChangesOptions.None)” or “SaveChanges()” (None is the default), then you won’t be running into the batch-mode problem. I don’t like this answer, however, since batch-mode can significantly reduce network round-trips and therefore not using it decreases performance.

Work-Around #3: Pre-Populate the Authentication Header

Instead of doing the “call an endpoint,” “oops I need credentials,” “here you go” dance, if you know you’re going to need credentials (which I argue is most often the case when you’re writing OData clients), why not provide the credentials when you make the call?

var service =

new NorthwindEntities(new Uri(@http://localhost/BatchModeBug/NorthwindService.svc));

service.SendingRequest += delegate(object sender, SendingRequestEventArgs e) { var userpw = "admin" + ":" + "pw"; var base64 = Convert.ToBase64String(Encoding.ASCII.GetBytes(userpw)); e.Request.Headers.Add("Authorization", "Basic " + base64); };Notice that we’re watching for the SendingRequest event on the client-side so that we can pre-populate the HTTP Authentication header so the service endpoint doesn’t have to even ask. Not only does this work around the problem but it reduces round-trips, which is a good idea even if/when batch-mode is fixed to respond properly to HTTP 401 errors.

Chris is a Program Manager in the Distributed Systems Group at Microsoft.

Julian Scopinaro posted Exposing Data on the Web via OData to the Southworks blogs on 1/5/2010:

Online auction leader eBay is always on the lookout for new technologies to introduce to the community of developers consuming their exposed APIs. Recently it has given a new step in this direction: with the new eBay OData API developers can now build applications leveraging search from eBay Findings API using the Open Data Protocol (OData).

But why expose information via OData? Because OData applies web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON to provide access to information from a variety of applications and services, leveraging the most widespread standards, navigational and caching infrastructure of the internet. This way it offers eBay a standardized way for programmable data to be made available across the web, freeing it from silos that still exist in applications today.

eBay’s OData implementation was recently presented in public at Microsoft’s PDC 10 event during Steve Ballmer’s keynote. To learn how this was accomplished and understand how OData works you can also watch Pablo Castro’s session on how to create custom OData services. Also useful is eBay’s OData API documentation which was recently released.

Tableau Software described What's New in Tableau 6.0 in a 1/2010 page:

Tableau 6.0 is the end of business intelligence as you know it...

... and the beginning of something extraordinary.

From the speed of the Data Engine to the I-can't-believe-it ease of Data Blending, Tableau 6 will change what you can achieve with data. And with more than 60 new features including beautiful combination charts and page trails, you'll never forget the first time you had 6.

Tableau is an alternative to Excel PowerPivot as a BI manipulation tool for MarketPlace DataMart.

The Microsoft Case Studies team wrote Software Developers Gain the Ease of LINQ Data Queries to Compelling Cloud Content on 12/29/2010 (missed when posted):

Developers want to write, test, and tune data queries interactively. LINQPad has long offered this ability for Language-Integrated Query (LINQ) technology, enabling developers to interactively query databases in LINQ. Now, LINQPad extends its support to DataMarket, part of the Windows Azure Marketplace, giving developers the same interactive access to data in the cloud, for more compelling, high-performance applications.

Situation

Software developer Joseph Albahari wanted an alternative to traditional SQL for writing database queries. Complex SQL could be awkward to use. The differences between programming languages such as C# and Microsoft Visual Basic, and SQL databases, made it difficult to use the former to query the latter—a problem that developers call an “impedance mismatch.”When Albahari learned about Language-Integrated Query (LINQ) from Microsoft, he knew that he had found an alternative.

LINQ is a set of extensions to the Microsoft .NET Framework that encompass language-integrated query, set, and transform operations. It extends C# and Microsoft Visual Basic with native language syntax for queries and provides class libraries to take advantage of these capabilities. “LINQ is a significantly more productive querying language,” Albahari says. “It’s simpler, tidier, and higher level. Not having to worry about lower-level details when I write my queries is a big win. LINQ is one of the best technologies that Microsoft has ever produced.”

Albahari was so impressed with LINQ that he built a tool to take advantage of it. He describes that tool, LINQPad, as a “miniature integrated development environment,” a code scratchpad that developers can use to quickly and interactively write and test LINQ queries and general-purpose code snippets. LINQPad is designed to complement the Microsoft Visual Studio development system: Developers can use LINQPad to rapidly write, test, and tune working code snippets, which they can paste directly into Visual Studio.

Developers have responded enthusiastically. Over the past three years, LINQPad has been downloaded 250,000 times and has what Albahari estimates to be tens of thousands of active users.

Meanwhile, people have found a new way to store and access data: in the cloud. Albahari wanted to keep LINQPad relevant by using it to give developers easy, streamlined access to data sources in the cloud.

Solution

Albahari has met that aim by building support for DataMarket, which is part of the Windows Azure Marketplace, into LINQPad. DataMarket is a service that makes it possible for developers and information workers to discover, purchase, and manage premium data subscriptions in the Windows Azure platform, the Microsoft cloud-based computing platform.

Figure 1: The DataMarket connection window in LINQPad makes it easy

for developers to access DataMarket, create an account, and enter an

authentication key.DataMarket brings together data, imagery, and real-time web services from leading commercial data providers and authoritative public data sources into one location, under a unified provisioning and billing framework. The service also includes application programming interfaces that make it possible for developers and information workers to consume this premium content with virtually any platform, application, or business workflow.

One of the keys to facilitating support for DataMarket in LINQPad is the Open Data (OData) Protocol. OData is a protocol for querying and updating data across the Internet. It is based on web standards and technologies that enable it to expose data from databases, web services, and applications and to make that data available to websites, databases, and content management systems.

As part of their commitment to standards, both Microsoft and Albahari have built OData support into their products. OData is part of the .NET Framework and is supported in both DataMarket and LINQPad.

Figure 2: Queries to DataMarket using LINQPad can be written more

simply than traditional queries, and return plain-English results.The support for OData in LINQPad made it easy for Albahari to write a DataMarket driver for his product. As a result, developers get LINQPad support for DataMarket out of the box. In a typical example of use, a developer uses LINQPad in the production of a new application and, in particular, in developing and testing an OData query to a data source in DataMarket that the developer then incorporates into the new application.

As a first step, a developer working in LINQPad adds a connection to DataMarket by choosing it as a data source with a couple of mouse clicks. That action also makes it possible for the developer to use LINQPad to subscribe to DataMarket and to locate an account key, if needed (see Figure 1).

After connecting to DataMarket, the developer inserts the URL of the desired data source—perhaps climate information—into LINQPad. The developer’s data set then shows up in LINQPad just like any other data source. The developer then uses the standard functionality in LINQPad to compose a query and run it against the data set—perhaps requesting user-specified climate measures for user-specified locations and date ranges.

The results of the query are returned to LINQPad. Once the query is proven successful, the developer can add it to the application, which can be anything from a simulation game to a climate modeling application (see Figure 2).

Benefits

By adopting support for DataMarket in LINQPad, Albahari says he is making his own product more useful to developers, giving them an easy way to access compelling content and helping to speed the performance of the data queries they develop.Supports Development of Compelling Applications

Albahari expects the easy access to DataMarket from LINQPad to make LINQPad increasingly useful to developers. As more of the market for data moves to cloud-based services, developers will be able to use LINQPad to access that data seamlessly, giving them another way to make their applications increasingly useful to end users.“DataMarket is becoming the premier source of data in the cloud,” says Albahari. “Developers want easy access to that data. LINQPad gives it to them without the need to learn new tools. It greatly lowers the bar for developers to experiment with, and take advantage of, the tremendous resources available on DataMarket.”

By making it easier for developers to access data in DataMarket through LINQPad, Albahari expects that developers will be more likely to use DataMarket data. The interoperability that LINQPad has with Microsoft development tools, especially Visual Studio, will facilitate the incorporation of DataMarket queries into applications created with those Microsoft tools.

Speeds Performance of Data Queries

According to Albahari, LINQPad not only encourages the development of applications that consume content from DataMarket, but also speeds the development and performance of those applications.“Using LINQPad to access DataMarket lets developers write, test, and tune queries without having to create any classes or projects. “It’s a faster, more streamlined process—developers can see the results instantly, without going through a save-compile-run cycle.”

Albahari also points out that the use of OData in LINQPad means that queries execute on the server rather than on the client, so data is filtered at source before being returned to the client system. The result is that the data download to the client is smaller and faster than it otherwise would be, boosting performance and reducing network bandwidth consumption.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Alik Levin explained Windows Azure AppFabric Access Control Service v2 - Adding Identity Provider Using Management Service on 1/8/2011:

This is quick intro to how to use Windows Azure AppFabric Access Control Service (ACS) v2 Management Service. In this sample I will show you what’s needed to add ADFS as an identity provider.

Summary of steps:

- Step 1 - Collect configuration information.

- Step 2 - Add references to required services and assemblies.

- Step 3 – Create Management Service Proxy

- Step 4 - Add identity provider

- Step 5 - Test your work

The full sample with other functionalities is available here - Code Sample: Management Service.

The simplified sample I used for this walkthrough is here.

Other ACS code samples available here - Code Samples Index.

Step 1 - Collect configuration information.

- Obtain Management Client password using ACS Management Portal. Login to the Access Control Service management portal. In Administration section click on Management Service link. On the Management Service page click on ManagementClient link (ManagementClient is actual username for the service). In the Credentials section click either on Symmetric Key or Password link. The value in each is the same. This is the password.

- Obtain signing certificate from https://<<YOURNAMESPACE>>.accesscontrol.appfabriclabs.com/FederationMetadata/2007-06/FederationMetadata.xml. It should appear as a very long string in the XML document.

- Make a note of your namespace, AppFabric host name (for lab it is accesscontrol.appfabriclabs.com )

- You should have these handy.

string serviceIdentityUsernameForManagement = "ManagementClient"; string serviceIdentityPasswordForManagement = "QL1nPx/iuX...YOUR PASSWORD FOR MANAGEMENT SERVICE GOES HERE"; string serviceNamespace = "YOUR NAMESPACE GOES HERE"; string acsHostName = "accesscontrol.appfabriclabs.com"; string signingCertificate = "MIIDIDCCAgigA... YOUR SIGNING CERT GOES HERE"; //WILL BE USED TO CACHE AND REUSE OBTAINED TOKEN. string cachedSwtToken;Step 2 - Add references to required services and assemblies.

- Add reference to System.Web.Extensions.

- Add service reference to Management Service. Obtain Management Service URL.(https://<<YOURNAMESPACE>>.accesscontrol.appfabriclabs.com/v2/mgmt/service)

- Add the following declarations

using System.Web; using System.Net; using System.Data.Services.Client; using System.Collections.Specialized; using System.Web.Script.Serialization;Step 3 – Create Management Service Proxy

To create Management Service proxy:

- Using configuration information collected earlier instantiate the proxy:

string managementServiceHead = "v2/mgmt/service/"; string managementServiceEndpoint = string.Format("https://{0}.{1}/{2}", serviceNamespace, acsHostName, managementServiceHead); ManagementService managementService = new ManagementService(new Uri(managementServiceEndpoint)); managementService.SendingRequest += GetTokenWithWritePermission;- Implement GetTokenWithWritePermission and its helper methods. It adds SWT OAuth token to Authorization header of HTTP request.

public static ManagementService CreateManagementServiceClient() { string managementServiceHead = "v2/mgmt/service/"; string managementServiceEndpoint = string.Format("https://{0}.{1}/{2}", serviceNamespace, acsHostName, managementServiceHead); ManagementService managementService = new ManagementService(new Uri(managementServiceEndpoint)); managementService.SendingRequest += GetTokenWithWritePermission; return managementService; } public static void GetTokenWithWritePermission(object sender, SendingRequestEventArgs args) { GetTokenWithWritePermission((HttpWebRequest)args.Request); } /// <summary> /// Helper function for the event handler above, adding the SWT token to the HTTP 'Authorization' header. /// The SWT token is cached so that we don't need to obtain a token on every request. /// </summary> public static void GetTokenWithWritePermission(HttpWebRequest args) { if (cachedSwtToken == null) { cachedSwtToken = GetTokenFromACS(); } args.Headers.Add(HttpRequestHeader.Authorization, string.Format("OAuth {0}", cachedSwtToken)); } /// <summary> /// Obtains a SWT token from ACSv2. /// </summary> private static string GetTokenFromACS() { // request a token from ACS WebClient client = new WebClient(); client.BaseAddress = string.Format("https://{0}.{1}", serviceNamespace, acsHostName); NameValueCollection values = new NameValueCollection(); values.Add("grant_type", "password"); values.Add("client_id", serviceIdentityUsernameForManagement); values.Add("username", serviceIdentityUsernameForManagement); values.Add("client_secret", serviceIdentityPasswordForManagement); values.Add("password", serviceIdentityPasswordForManagement); byte[] responseBytes = client.UploadValues("/v2/OAuth2-10/rp/AccessControlManagement", "POST", values); string response = Encoding.UTF8.GetString(responseBytes); // Parse the JSON response and return the access token JavaScriptSerializer serializer = new JavaScriptSerializer(); Dictionary<string, object> decodedDictionary = serializer.DeserializeObject(response) as Dictionary<string, object>; return decodedDictionary["access_token"] as string; }Step 4 - Add identity provider

To add Identity Provider:

- Add your identity provider as issuer (svc is an instance of a proxy to Management Service):

Issuer issuer = new Issuer { Name = identityProviderName }; svc.AddToIssuers(issuer); svc.SaveChanges(SaveChangesOptions.Batch);- Add identity provider:

IdentityProvider identityProvider = new IdentityProvider() { DisplayName = identityProviderName, Description = identityProviderName, WebSSOProtocolType = "WsFederation", IssuerId = issuer.Id }; svc.AddObject("IdentityProviders", identityProvider);- Create identity provider’s signing key based on the certificate obtained earlier

IdentityProviderKey identityProviderKey = new IdentityProviderKey() { DisplayName = "SampleIdentityProviderKeyDisplayName", Type = "X509Certificate", Usage = "Signing", Value = Convert.FromBase64String(signingCertificate), IdentityProvider = identityProvider, StartDate = startDate, EndDate = endDate, }; svc.AddRelatedObject(identityProvider, "IdentityProviderKeys", identityProviderKey);- Update identity provider’s sign in address:

IdentityProviderAddress realm = new IdentityProviderAddress() { Address = "http://yourdomain.com/sign-in/", EndpointType = "SignIn", IdentityProvider = identityProvider, }; svc.AddRelatedObject(identityProvider, "IdentityProviderAddresses", realm);

svc.SaveChanges(SaveChangesOptions.Batch);- Make identity provider available to relying parties the Management relying party.

foreach (RelyingParty rp in svc.RelyingParties) { // skip the built-in management RP. if (rp.Name != "AccessControlManagement") { svc.AddToRelyingPartyIdentityProviders(new RelyingPartyIdentityProvider() { IdentityProviderId = identityProvider.Id, RelyingPartyId = rp.Id }); } }

svc.SaveChanges(SaveChangesOptions.Batch);Step 5 - Test your work

To test your work:

- Log on to Access Control Service Management Portal.

- On the Access Control Service page click on Rule Groups link in Trust Relationships section.

- Click on any of the available rules.

- On the Edit Rule Group page click on Add Rule link.

- On the Add Claim Rule page choose newly added Identity Provider from the dropdown list in the Claim Issuer section.

- Leave the rest with default values.

- Click on Save button.

- You have just created passthrough rule for the Identity Provider and if no error returned chances you are good to go.

Related Books

- Programming Windows Identity Foundation (Dev - Pro)

- A Guide to Claims-Based Identity and Access Control (Patterns & Practices) – free online version

- Developing More-Secure Microsoft ASP.NET 2.0 Applications (Pro Developer)

- Ultra-Fast ASP.NET: Build Ultra-Fast and Ultra-Scalable web sites using ASP.NET and SQL Server

- Advanced .NET Debugging

- Debugging Microsoft .NET 2.0 Applications

Related Info

- Windows Identity Foundation (WIF) and Azure AppFabric Access Control (ACS) Service Survival Guide

- Videos: Windows Azure Security Essentials For Decision Makers, Security Architecture, Access, and Secure Development

- Video: What’s Windows Azure AppFabric Access Control Service (ACS) v2?

- Video: What Windows Azure AppFabric Access Control Service (ACS) v2 Can Do For Me?

- Video: Windows Azure AppFabric Access Control Service (ACS) v2 Key Components and Architecture

- Video: Windows Azure AppFabric Access Control Service (ACS) v2 Prerequisites

- Video: Windows Azure AppFabric Access Control Service (ACS) v2 Prerequisites

Attachment:

ACSManagementService.zip

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Corrado Cavalli (@corcav) explained How to inspect Azure package contents in a 1/9/2010 post:

Sometimes you need to inspect what’s inside an Azure package content (.cspkg file) before it gets published to the cloud because you need to be sure that some required assemblies are in maybe simply because you need to figure why your app doesn’t work when running from the cloud.

A common case is when you use WCF RIA Services and you forget to include both System.ServiceModel.DomainServices.Hosting and System.ServiceModel.DomainServices.Server inside development package by marking both with CopyLocal=true (any data access related libraries must be included too BTW…)

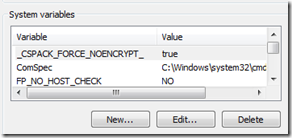

The trick to allow package inspection is to add an environment variable named _CSPACK_FORCE_NOENCRYPT_ with value set to true.

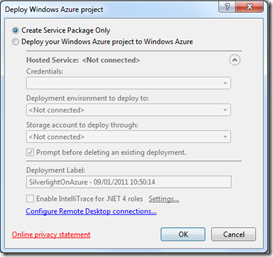

After this, open (or restart) Visual Studio and create a package right clicking on Azure project and selecting Publish option:

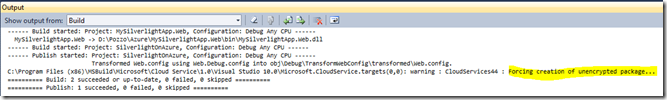

You can see on Visual Studio output windows that package has been created without encryption and this allow us to treat it as normal.zip file

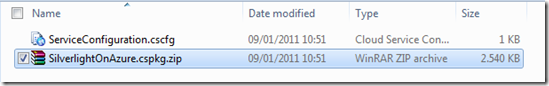

Now you can inspect you package by simply renamig the .cspkg file to .zip

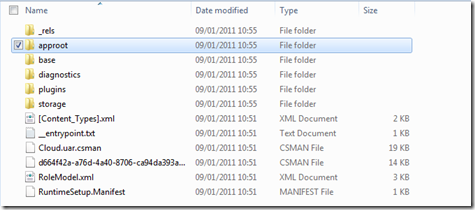

inside .cspkg you have several files, rename into .zip extension the one that has .cssx extension (is probably the bigger one…)

An inside approot\ClientBin you’ll find (finally!) the xap file that I’m sure you know how to open

Ron Jacobs (@ronljacobs) described how you can contribute to Workflow on Windows Azure Research in a 1/6/2011 post to his MSDN blog:

Our team is busily working on some great new stuff to bring first class support for Workflow to Windows Azure. We want to hear from you so we can be sure to deliver on what you need.

Drop me a line if you want to chat and you

- Have workflow (WF3/ WF4) solutions running on Azure or other cloud systems today

- Have workflow (WF3/ WF4) solutions on-premises and moving that solution to Azure in the next 3 months

- Have solutions running on Azure today and are contemplating adding Workflow to your solution

Follow me on Twitter

<Return to section navigation list>

Visual Studio LightSwitch

Frans Bouma (@FransBouma) explained How to find and fix performance problems in ORM powered applications on 1/8/2010:

Once in a while we get requests about how to fix performance problems with our framework. As it comes down to following the same steps and looking into the same things every single time, I decided to write a blogpost about it instead, so more people can learn from this and solve performance problems in their O/R mapper powered applications. In some parts it's focused on LLBLGen Pro but it's also usable for other O/R mapping frameworks, as the vast majority of performance problems in O/R mapper powered applications are not specific for a certain O/R mapper framework.

Too often, the developer looks at the wrong part of the application, trying to fix what isn't a problem in that part, and getting frustrated that 'things are so slow with <insert your favorite framework X here>'. I'm in the O/R mapper business for a long time now (almost 10 years, full time) and as it's a small world, we O/R mapper developers know almost all tricks to pull off by now: we all know what to do to make task ABC faster and what compromises (because there are almost always compromises) to deal with if we decide to make ABC faster that way. Some O/R mapper frameworks are faster in X, others in Y, but you can be sure the difference is mainly a result of a compromise some developers are willing to deal with and others aren't. That's why the O/R mapper frameworks on the market today are different in many ways, even though they all fetch and save entities from and to a database.

I'm not suggesting there's no room for improvement in today's O/R mapper frameworks, there always is, but it's not a matter of 'the slowness of the application is caused by the O/R mapper' anymore. Perhaps query generation can be optimized a bit here, row materialization can be optimized a bit there, but it's mainly coming down to milliseconds. Still worth it if you're a framework developer, but it's not much compared to the time spend inside databases and in user code: if a complete fetch takes 40ms or 50ms (from call to entity object collection), it won't make a difference for your application as that 10ms difference won't be noticed. That's why it's very important to find the real locations of the problems so developers can fix them properly and don't get frustrated because their quest to get a fast, performing application failed.

Performance tuning basics and rules

Finding and fixing performance problems in any application is a strict procedure with four prescribed steps: isolate, analyze, interpret and fix, in that order. It's key that you don't skip a step nor make assumptions: these steps help you find the reason of a problem which seems to be there, and how to fix it or leave it as-is. Skipping a step, or when you assume things will be bad/slow without doing analysis will lead to the path of premature optimization and won't actually solve your problems, only create new ones.

The most important rule of finding and fixing performance problems in software is that you have to understand what 'performance problem' actually means. Most developers will say "when a piece of software / code is slow, you have a performance problem". But is that actually the case? If I write a Linq query which will aggregate, group and sort 5 million rows from several tables to produce a resultset of 10 rows, it might take more than a couple of milliseconds before that resultset is ready to be consumed by other logic. If I solely look at the Linq query, the code consuming the resultset of the 10 rows and then look at the time it takes to complete the whole procedure, it will appear to me to be slow: all that time taken to produce and consume 10 rows? But if you look closer, if you analyze and interpret the situation, you'll see it does a tremendous amount of work, and in that light it might even be extremely fast. With every performance problem you encounter, always do realize that what you're trying to solve is perhaps not a technical problem at all, but a perception problem.

The second most important rule you have to understand is based on the old saying "Penny wise, Pound Foolish": the part which takes e.g. 5% of the total time T for a given task isn't worth optimizing if you have another part which takes a much larger part of the total time T for that same given task. Optimizing parts which are relatively insignificant for the total time taken is not going to bring you better results overall, even if you totally optimize that part away. This is the core reason why analysis of the complete set of application parts which participate in a given task is key to being successful in solving performance problems: No analysis -> no problem -> no solution.

One warning up front: hunting for performance will always include making compromises. Fast software can be made maintainable, but if you want to squeeze as much performance out of your software, you will inevitably be faced with the dilemma of compromising one or more from the group {readability, maintainability, features} for the extra performance you think you'll gain. It's then up to you to decide whether it's worth it. In almost all cases it's not. The reason for this is simple: the vast majority of performance problems can be solved by implementing the proper algorithms, the ones with proven Big O-characteristics so you know the performance you'll get plus you know the algorithm will work. The time taken by the algorithm implementing code is inevitable: you already implemented the best algorithm. You might find some optimizations on the technical level but in general these are minor.

Let's look at the four steps to see how they guide us through the quest to find and fix performance problems.

Isolate

The first thing you need to do is to isolate the areas in your application which are assumed to be slow. For example, if your application is a web application and a given page is taking several seconds or even minutes to load, it's a good candidate to check out. It's important to start with the isolate step because it allows you to focus on a single code path per area with a clear begin and end and ignore the rest. The rest of the steps are taken per identified problematic area. Keep in mind that isolation focuses on tasks in an application, not code snippets. A task is something that's started in your application by either another task or the user, or another program, and has a beginning and an end. You can see a task as a piece of functionality offered by your application.

Analyze

Once you've determined the problem areas, you have to perform analysis on the code paths of each area, to see where the performance problems occur and which areas are not the problem. This is a multi-layered effort: an application which uses an O/R mapper typically consists of multiple parts: there's likely some kind of interface (web, webservice, windows etc.), a part which controls the interface and business logic, the O/R mapper part and the RDBMS, all connected with either a network or inter-process connections provided by the OS or other means. Each of these parts, including the connectivity plumbing, eat up a part of the total time it takes to complete a task, e.g. load a webpage with all orders of a given customer X.

To understand which parts participate in the task / area we're investigating and how much they contribute to the total time taken to complete the task, analysis of each participating task is essential. Start with the code you wrote which starts the task, analyze the code and track the path it follows through your application. What does the code do along the way, verify whether it's correct or not.

Analyze whether you have implemented the right algorithms in your code for this particular area. Remember we're looking at one area at a time, which means we're ignoring all other code paths, just the code path of the current problematic area, from begin to end and back. Don't dig in and start optimizing at the code level just yet. We're just analyzing. If your analysis reveals big architectural stupidity, it's perhaps a good idea to rethink the architecture at this point. For the rest, we're analyzing which means we collect data about what could be wrong, for each participating part of the complete application.

Reviewing the code you wrote is a good tool to get deeper understanding of what is going on for a given task but ultimately it lacks precision and overview what really happens: humans aren't good code interpreters, computers are. We therefore need to utilize tools to get deeper understanding about which parts contribute how much time to the total task, triggered by which other parts and for example how many times are they called. There are two different kind of tools which are necessary: .NET profilers and O/R mapper / RDBMS profilers.

.NET profiling

.NET profilers (e.g. dotTrace by JetBrains or Ants by Red Gate software) show exactly which pieces of code are called, how many times they're called, and the time it took to run that piece of code, at the method level and sometimes even at the line level. The .NET profilers are essential tools for understanding whether the time taken to complete a given task / area in your application is consumed by .NET code, where exactly in your code, the path to that code, how many times that code was called by other code and thus reveals where hotspots are located: the areas where a solution can be found. Importantly, they also reveal which areas can be left alone: remember our penny wise pound foolish saying: if a profiler reveals that a group of methods are fast, or don't contribute much to the total time taken for a given task, ignore them. Even if the code in them is perhaps complex and looks like a candidate for optimization: you can work all day on that, it won't matter.

As we're focusing on a single area of the application, it's best to start profiling right before you actually activate the task/area. Most .NET profilers support this by starting the application without starting the profiling procedure just yet. You navigate to the particular part which is slow, start profiling in the profiler, in your application you perform the actions which are considered slow, and afterwards you get a snapshot in the profiler. The snapshot contains the data collected by the profiler during the slow action, so most data is produced by code in the area to investigate. This is important, because it allows you to stay focused on a single area.

O/R mapper and RDBMS profiling

.NET profilers give you a good insight in the .NET side of things, but not in the RDBMS side of the application. As this article is about O/R mapper powered applications, we're also looking at databases, and the software making it possible to consume the database in your application: the O/R mapper. To understand which parts of the O/R mapper and database participate how much to the total time taken for task T, we need different tools.

There are two kind of tools focusing on O/R mappers and database performance profiling: O/R mapper profilers and RDBMS profilers. For O/R mapper profilers, you can look at LLBLGen Prof by hibernating rhinos or the Linq to Sql/LLBLGen Pro profiler by Huagati. Hibernating rhinos also have profilers for other O/R mappers like NHibernate (NHProf) and Entity Framework (EFProf) and work the same as LLBLGen Prof. For RDBMS profilers, you have to look whether the RDBMS vendor has a profiler. For example for SQL Server, the profiler is shipped with SQL Server, for Oracle it's build into the RDBMS, however there are also 3rd party tools.

Which tool you're using isn't really important, what's important is that you get insight in which queries are executed during the task / area we're currently focused on and how long they took. Here, the O/R mapper profilers have an advantage as they collect the time it took to execute the query from the application's perspective so they also collect the time it took to transport data across the network. This is important because a query which returns a massive resultset or a resultset with large blob/clob/ntext/image fields takes more time to get transported across the network than a small resultset and a database profiler doesn't take this into account most of the time.

Another tool to use in this case, which is more low level and not all O/R mappers support it (though LLBLGen Pro and NHibernate as well do) is tracing: most O/R mappers offer some form of tracing or logging system which you can use to collect the SQL generated and executed and often also other activity behind the scenes. While tracing can produce a tremendous amount of data in some cases, it also gives insight in what's going on.

Interpret

After we've completed the analysis step it's time to look at the data we've collected. We've done code reviews to see whether we've done anything stupid and which parts actually take place and if the proper algorithms have been implemented. We've done .NET profiling to see which parts are choke points and how much time they contribute to the total time taken to complete the task we're investigating. We've performed O/R mapper profiling and RDBMS profiling to see which queries were executed during the task, how many queries were generated and executed and how long they took to complete, including network transportation.

All this data reveals two things: which parts are big contributors to the total time taken and which parts are irrelevant. Both aspects are very important. The parts which are irrelevant (i.e. don't contribute significantly to the total time taken) can be ignored from now on, we won't look at them.

The parts which contribute a lot to the total time taken are important to look at. We now have to first look at the .NET profiler results, to see whether the time taken is consumed in our own code, in .NET framework code, in the O/R mapper itself or somewhere else. For example if most of the time is consumed by DbCommand.ExecuteReader, the time it took to complete the task is depending on the time the data is fetched from the database. If there was just 1 query executed, according to tracing or O/R mapper profilers / RDBMS profilers, check whether that query is optimal, uses indexes or has to deal with a lot of data.

Interpret means that you follow the path from begin to end through the data collected and determine where, along the path, the most time is contributed. It also means that you have to check whether this was expected or is totally unexpected. My previous example of the 10 row resultset of a query which groups millions of rows will likely reveal that a long time is spend inside the database and almost no time is spend in the .NET code, meaning the RDBMS part contributes the most to the total time taken, the rest is compared to that time, irrelevant. Considering the vastness of the source data set, it's expected this will take some time. However, does it need tweaking? Perhaps all possible tweaks are already in place. In the interpret step you then have to decide that further action in this area is necessary or not, based on what the analysis results show: if the analysis results were unexpected and in the area where the most time is contributed to the total time taken is room for improvement, action should be taken. If not, you can only accept the situation and move on.

In all cases, document your decision together with the analysis you've done. If you decide that the perceived performance problem is actually expected due to the nature of the task performed, it's essential that in the future when someone else looks at the application and starts asking questions you can answer them properly and new analysis is only necessary if situations changed.

Fix

After interpreting the analysis results you've concluded that some areas need adjustment. This is the fix step: you're actively correcting the performance problem with proper action targeted at the real cause. In many cases related to O/R mapper powered applications it means you'll use different features of the O/R mapper to achieve the same goal, or apply optimizations at the RDBMS level. It could also mean you apply caching inside your application (compromise memory consumption over performance) to avoid unnecessary re-querying data and re-consuming the results.

After applying a change, it's key you re-do the analysis and interpretation steps: compare the results and expectations with what you had before, to see whether your actions had any effect or whether it moved the problem to a different part of the application. Don't fall into the trap to do partly analysis: do the full analysis again: .NET profiling and O/R mapper / RDBMS profiling. It might very well be that the changes you've made make one part faster but another part significantly slower, in such a way that the overall problem hasn't changed at all.

Performance tuning is dealing with compromises and making choices: to use one feature over the other, to accept a higher memory footprint, to go away from the strict-OO path and execute queries directly onto the RDBMS, these are choices and compromises which will cross your path if you want to fix performance problems with respect to O/R mappers or data-access and databases in general. In most cases it's not a big issue: alternatives are often good choices too and the compromises aren't that hard to deal with. What is important is that you document why you made a choice, a compromise: which analysis data, which interpretation led you to the choice made. This is key for good maintainability in the years to come.

Most common performance problems with O/R mappers

Below is an incomplete list of common performance problems related to data-access / O/R mappers / RDBMS code. It will help you with fixing the hotspots you found in the interpretation step.

- SELECT N+1: (Lazy-loading specific). Lazy loading triggered performance bottlenecks. Consider a list of Orders bound to a grid. You have a Field mapped onto a related field in Order, Customer.CompanyName. Showing this column in the grid will make the grid fetch (indirectly) for each row the Customer row. This means you'll get for the single list not 1 query (for the orders) but 1+(the number of orders shown) queries. To solve this: use eager loading using a prefetch path to fetch the customers with the orders. SELECT N+1 is easy to spot with an O/R mapper profiler or RDBMS profiler: if you see a lot of identical queries executed at once, you have this problem.

- Prefetch paths using many path nodes or sorting, or limiting. Eager loading problem. Prefetch paths can help with performance, but as 1 query is fetched per node, it can be the number of data fetched in a child node is bigger than you think. Also consider that data in every node is merged on the client within the parent. This is fast, but it also can take some time if you fetch massive amounts of entities. If you keep fetches small, you can use tuning parameters like the ParameterizedPrefetchPathThreshold setting to get more optimal queries.

- Deep inheritance hierarchies of type Target Per Entity/Type. If you use inheritance of type Target per Entity / Type (each type in the inheritance hierarchy is mapped onto its own table/view), fetches will join subtype- and supertype tables in many cases, which can lead to a lot of performance problems if the hierarchy has many types. With this problem, keep inheritance to a minimum if possible, or switch to a hierarchy of type Target Per Hierarchy, which means all entities in the inheritance hierarchy are mapped onto the same table/view. Of course this has its own set of drawbacks, but it's a compromise you might want to take.

- Fetching massive amounts of data by fetching large lists of entities. LLBLGen Pro supports paging (and limiting the # of rows returned), which is often key to process through large sets of data. Use paging on the RDBMS if possible (so a query is executed which returns only the rows in the page requested). When using paging in a web application, be sure that you switch server-side paging on on the datasourcecontrol used. In this case, paging on the grid alone is not enough: this can lead to fetching a lot of data which is then loaded into the grid and paged there. Keep note that analyzing queries for paging could lead to the false assumption that paging doesn't occur, e.g. when the query contains a field of type ntext/image/clob/blob and DISTINCT can't be applied while it should have (e.g. due to a join): the datareader will do DISTINCT filtering on the client. this is a little slower but it does perform paging functionality on the data-reader so it won't fetch all rows even if the query suggests it does.

- Fetch massive amounts of data because blob/clob/ntext/image fields aren't excluded. LLBLGen Pro supports field exclusion for queries. You can exclude fields (also in prefetch paths) per query to avoid fetching all fields of an entity, e.g. when you don't need them for the logic consuming the resultset. Excluding fields can greatly reduce the amount of time spend on data-transport across the network. Use this optimization if you see that there's a big difference between query execution time on the RDBMS and the time reported by the .NET profiler for the ExecuteReader method call.

- Doing client-side aggregates/scalar calculations by consuming a lot of data. If possible, try to formulate a scalar query or group by query using the projection system or GetScalar functionality of LLBLGen Pro to do data consumption on the RDBMS server. It's far more efficient to process data on the RDBMS server than to first load it all in memory, then traverse the data in-memory to calculate a value.

- Using .ToList() constructs inside linq queries. It might be you use .ToList() somewhere in a Linq query which makes the query be run partially in-memory. Example:

var q = from c in metaData.Customers.ToList() where c.Country=="Norway" select c;This will actually fetch all customers in-memory and do an in-memory filtering, as the linq query is defined on an IEnumerable<T>, and not on the IQueryable<T>. Linq is nice, but it can often be a bit unclear where some parts of a Linq query might run.- Fetching all entities to delete into memory first. To delete a set of entities it's rather inefficient to first fetch them all into memory and then delete them one by one. It's more efficient to execute a DELETE FROM ... WHERE query on the database directly to delete the entities in one go. LLBLGen Pro supports this feature, and so do some other O/R mappers. It's not always possible to do this operation in the context of an O/R mapper however: if an O/R mapper relies on a cache, these kind of operations are likely not supported because they make it impossible to track whether an entity is actually removed from the DB and thus can be removed from the cache.

- Fetching all entities to update with an expression into memory first. Similar to the previous point: it is more efficient to update a set of entities directly with a single UPDATE query using an expression instead of fetching the entities into memory first and then updating the entities in a loop, and afterwards saving them. It might however be a compromise you don't want to take as it is working around the idea of having an object graph in memory which is manipulated and instead makes the code fully aware there's a RDBMS somewhere.

Conclusion

Performance tuning is almost always about compromises and making choices. It's also about knowing where to look and how the systems in play behave and should behave. The four steps I provided should help you stay focused on the real problem and lead you towards the solution. Knowing how to optimally use the systems participating in your own code (.NET framework, O/R mapper, RDBMS, network/services) is key for success as well as knowing what's going on inside the application you built. I hope you'll find this guide useful in tracking down performance problems and dealing with them in a useful way.

Visual Studio LightSwitch depends on the Entity Framework v4 O/RM, so Frans’ article belongs here.

Return to section navigation list>

Windows Azure Infrastructure

My Windows Azure Compute Extra-Small VM Beta Now Available in the Cloud Essentials Pack and for General Use post of 1/8/2011 (updated 1/9/2011) reported:

On 1/8/2011 (or, perhaps, a day earlier) the Extra-Small virtual machine (VM) instance became available in a beta version. This means that members of the Microsoft Partner Network can finally take advantage of the 750 hours/month of free Windows Azure Compute instance benefit of the Cloud Essentials Pack, as described in the Extra-Small VMs in Microsoft Cloud Partner – Windows Azure Build and Develop Benefits section.

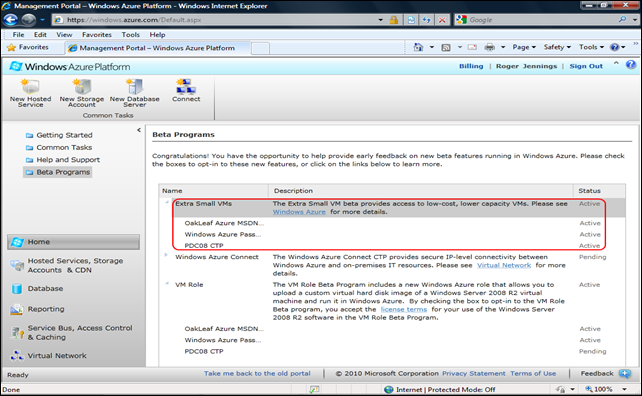

If you signed up for the Extra-Small VM beta and have been on-boarded, you’ll see a Beta page similar to this in the new Windows Azure Portal (click images for full-size 1024x768-px screen captures):

Note: I still haven’t been on-boarded to the Windows Azure Connect beta.

The Extra-Small VM is enabled on each Hosted Service of your account (even a disabled service). In my case:

- OakLeaf Azure MSDN: A free single-instance benefit from my MSDN Ultimate subscription, which hosts the OakLeaf Systems Azure Table Services Sample Project - Paging and Batch Updates Demo in the South Central US data center

- Windows Azure Pass: A free 30-day single Windows Azure and SQL Azure instance pass, which currently hosts a similar OakLeaf Systems Azure Table Services Sample Project (the pass expires on 1/10/2011).

- PDC08 CTP: A disabled instance from the PDC 2008 era that I can’t remove from the Portal

Clicking the Windows Azure link for more details opens the main Windows Azure landing page with cycling Available Now: Extra Small Instance, Windows Azure SDK v1.3, and Virtual Machine Role images:

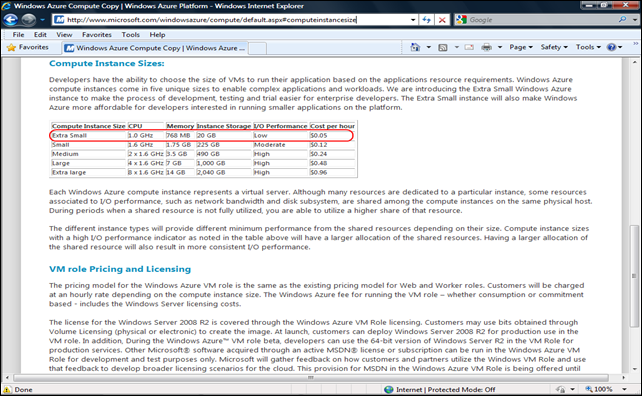

Clicking the Extra Small Instance Learn More Button opens the Windows Azure Compute Copy | Windows Azure … page at the Compute Instance Sizes topic:

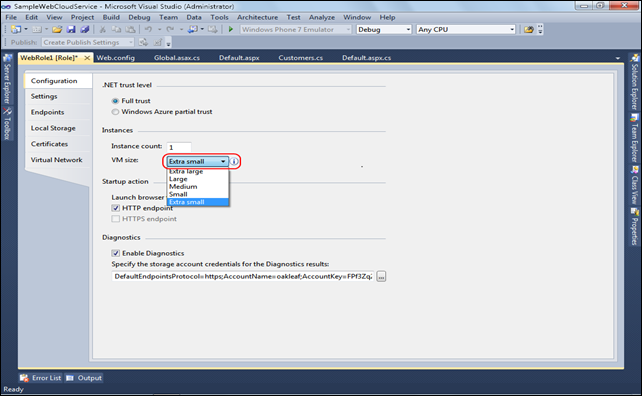

The Windows Azure SDK v1.3 added an Extra Small VM choice to Visual Studio 2010 Web Role’s VM Size list:

Attempting to deploy a solution with an Extra Small VM instance to the Production Fabric prior to Activation of the Extra Small VM size threw a “The subscription is not authorized for this feature” when deploying to Staging. See Dimitri Lyalin’s ExtraSmall instances cannot deploy “The subscription is not authorized for this feature” blog post of 12/1/2010. …

The post continues with a detailed and fully illustrated Extra-Small VMs in Microsoft Cloud Partner – Windows Azure Build and Develop Benefits section about the Cloud Essentials Pack’s offer of a free full-time Extra-Small VM compute instance for members of the Microsoft Partner Network (MPN).

Bruce Guptill, Robert McNeill and Bill McNee authored a 2011 Spending Will Drive Cloud Surge Research Alert for Saugatuck Technology on 1/6/2010. From the summary page:

Based on current economic trends and recent research, Saugatuck expects to see a significant release of cash throughout 2011 by businesses of all types and sizes. Spending will include a significant increase in M&A activity, as well as infrastructure, product, channel and technology investments that increase productivity and foster new revenue opportunities, including expansion into new markets. Contrary to those that see this as a return to traditional IT spending, Saugatuck expects this growth to increasingly favor Cloud IT at the expense of traditional on-premises IT.

Besides the providers of IT, the clear winners of this technology upturn will be those CIOs and business leaders that leverage Cloud IT to develop competitive advantages. Those that lose will include executives and managers who return to, or stand pat on, long-term beliefs of how IT should be acquired, staffed, operated, and funded. Indeed, our research indicates that the fastest growing companies in any marketplace will be those heavily invest in Cloud IT, whether to extend, expand or replace the capabilities and effectiveness of on-premises alternatives.

In short, based on an expected uptick in economic growth, and a global release of cash for business and IT spending, 2011 should be a banner year – perhaps a watershed year – for Cloud IT, and another important milepost charting the long decline of new investment in traditional IT. As a result, look for accelerated investment in Cloud not only by user firms, but by traditional IT Master Brands as well.

Reading the entire article as a PDF file requires site registration.

Tony Bailey (a.k.a. tbtechnet) wrote Scale for Spikes on 1/8/2010:

The Windows Azure platform is clearly well suited for web projects that need to be fired up in a day or so.

More importantly, Windows Azure is superb for meeting spikes in demand without having to configure multiple servers and make numerous back-end services decisions.

If you’re a custom web development business and you have mid to large sized clients looking for fast delivery of applications into the market, check out the Windows Azure platform.

Outback Steakhouse is a great example http://www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000005861

Try out Azure now with a no-cost 30-day account – no credit card required.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Pat Romanski reported Cloud Security Alliance Appoints EMEA Strategy Advisor on 1/5/2010:

Raj Samani [pictured] has been appointed as the Cloud Security Alliance (CSA) EMEA Strategy Advisor. The role is a non-compensated role that is intended to advise the CSA on best practices and a roadmap within EMEA, as well as support existing and developing CSA Chapters where appropriate within EMEA. The CSA is a non-profit organization formed to promote the use of best practices for providing security assurance within Cloud Computing, and provide education on the uses of Cloud Computing to help secure all other forms of computing.

Jim Reavis, Co-founder & Executive Director of the Cloud Security Alliance, said, "The CSA is strongly committed to furthering our work in this region, building on the wonderful work already being done. We look forward to Raj's contributions to help support our mission throughout EMEA.

On appointment of the role, Samani commented, "I am really excited about working closer with the CSA. The Cloud represents a fundamental shift in the way organisations will do business in the 21st century, and the work that the CSA (and CAMM) are doing is fundamental toward widescale secure adoption of cloud computing."

Raj Samani is the EMEA CTO of McAfee, the world's largest dedicated computer security company in the world, and is the Founder of the Common Assurance Maturity Model (CAMM - www.common-assurance.com). He joins having previously been the Vice President for Communications with the ISSA UK chapter in which he presided over the award for Chapter Communications Programme of the Year (2008, 2009).

The Cloud Security Alliance continues to gain mindshare.

See The Cloud Security Alliance announced that its CSA Summit 2011 event will take place on 2/14/2011 at Moscone Center, San Francisco, CA during the RSA 2011 Conference in the Cloud Computing Events section below.

<Return to section navigation list>

Cloud Computing Events

The Cloud Security Alliance announced that its CSA Summit 2011 event will take place on 2/14/2011 at Moscone Center, San Francisco, CA during the RSA 2011 Conference:

About the Cloud Security Alliance Summit

Featured Keynote: Marc Benioff, CEO Salesforce.com

The CSA Summit 2011 provides the most timely and relevant education for securing cloud computing. This year's Summit serves as the venue for the global introduction of several research projects, including research on governance, cloud security reference architectures and cloud-specific computer security incident response teams. The Summit is kicked off with a keynote from the CEO of one of the industry's leading cloud service providers, Marc Benioff of Salesforce.com and includes session presentations and panels from the industry's foremost thought leaders.

The CSA Summit 2011 provides a fantastic opportunity for you to ask questions and learn from experts who are designing and implementing cloud security technologies and governance programs.

CSA Activities and Promotions at RSA

CSA Summit

Located in Red Room 103 (Moscone South), the CSA Summit is 9:00am to 1:00pm. Last year’s summit was an overwhelmingly popular event and although we have doubled the occupancy to accommodate the growing interest in Cloud Security, be sure to arrive early to guarantee yourself a seat. Doors open at 8:30am!

CSA “Secure the Cloud” Alliance Bingo-card Promotion

RSA attendees can enter to win one of four Kinects which will be raffled off during the RSA Conference. Find out more details at the CSA Summit or stop by the CSA Booth # 2718.

CCSK Certification Discount

CSA will be offering a limited number of coupons for $100 off CCSK certification, a substantial savings off the regular price of $295. Please inquire for more information when visiting the CSA booth.

The Certificate of Cloud Security Knowledge (CCSK) is the industry’s first user certification program for secure cloud computing. The online certification is designed to ensure that professionals with a responsibility related to cloud computing have a demonstrated awareness of security threats and best practices for securing the cloud. For certification information please visit http://cloudsecurityalliance.org/certifyme.html

Certificate of Cloud Security Knowledge (CCSK) Training Course February 13

1 day instructor led course to provide the key concepts of cloud computing security. Objective of this course will be to enable the student to successfully pass the Certificate of Cloud Security Knowledge (CCSK) examination. Course will include other important areas of practical cloud security knowledge. The cost for this course is $400, which will include a free token to take the CCSK test, a $295 value.

CSA Cocktail Party

Join CSA founders and corporate members on Wednesday February 16th from 4:00pm to 6:00pm for a drinks and networking in Red Room 102 (Moscone South.)

The North Bay .NET Users Group announced that Bruno Terkaly will present a Windows Phone 7 Meets Cloud Computing event to be held on 1/18/2010 at O'Reilly Media, 1003-1005 Gravenstein Highway North, Sebastopol, CA:

When: Tuesday, 1/18/2011 at 7:00 PM

Where: O'Reilly Media, 1003-1005 Gravenstein Highway North, Sebastopol Tarsier Conference Room (between Building B and Building C) (8 miles west of Santa Rosa)

Event Description:

No phone application is an island. Mobile applications are hungry for a couple of things. First, they need data. Second, they need computing power to process the data. The obvious solution to computing power and connected data is the 'cloud.' If you plan to connect mobile applications to the cloud, then this session is for you. We will start by migrating on-premise data to the cloud, specifically SQL Azure. Next, we will need to create web services to expose that data and make it available to Windows Phone 7 applications. Rather than just show you how to connect to some 'already created' data source, I show you how to build your own infrastructure to expose cloud based data to the world. This is a soup to nuts session that builds everything from scratch and gives you a limitless ability to consume data from Windows Phone 7 applications. I presented this session to 1000's during the Visual Studio 2010 launch and it was very well received. Join me in what I consider to be absolutely essential Windows Phone 7 development skills.

Agenda:

7:00 - 8:00 Presentation by Bruno Terkaly

8:00 - 8:10 Q&A

8:10 - 8:20 book raffle

8:20 - 8:30 wrap upPresenter's Bio:

Bruno is used to sleeping with his passport. As a former Microsoft Premier Field Engineer, he has traveled to distant locations to help customers with problem isolation and correction, live and post-mortem debugging, application design and code reviews, performance tuning (IIS, SQL Server, .NET), application stability, troubleshooting, porting / migration assistance, configuration management, pre-rollout testing and general development consulting. Starting with Turbo C in the late 80’s, Bruno has kept busy teaching and writing code in a multitude of platforms, languages, frameworks, SDKs, libraries, and APIs. He claims that he’d read and write code even if he won the lottery. He is excited about rich applications powering the web, and the technologies that are evolving, morphing and merging – currently Virtualization, SOA, Web 2.0 and SaaS applications, using Visual Studio as the glue to manage these diverse computer worlds. Bruno started his computing pursuits while getting his accounting and finance degree from UC Berkeley’s School of Business. Bruno now works as an developer evangelist, focusing on new and emerging technologies. You can read more about him at www.brunoterkaly.com

Driving Directions:

From highway 101 North, get onto on the 116 West in the town of Cotati. O'Reilly is on highway 116 as you're just leaving the town of Sebastopol. (About 8 miles west of Santa Rosa.)

Jason Baker, Chris Bond, James C. Corbett, JJ Furman, Andrey Khorlin, James Larson,

Jean Michel Leon, Yawei Li, Alexander Lloyd and Vadim Yushprakh (all Google Employees) authored a Megastore: Providing Scalable, Highly Available Storage for Interactive Services paper to be presented at the 5th Biennial Conference on Innovative Data Systems Research (CIDR ’11) on 1/9/2011 at Asilomar, California, USA. From the Abstract and Introduction:

Abstract

Megastore is a storage system developed to meet the requirements of today's interactive online services. Megastore blends the scalability of a NoSQL datastore with the convenience of a traditional RDBMS in a novel way, and provides both strong consistency guarantees and high availability. We provide fully serializable ACID semantics within tne-grained partitions of data. This partitioning allows us to synchronously replicate each write across a wide area network with reasonable latency and support seamless failover between datacenters. This paper describes Megastore's semantics and replication algorithm. It also describes our experience supporting a wide range of Google production services built with Megastore.

Introduction

Interactive online services are forcing the storage community to meet new demands as desktop applications migrate to the cloud. Services like email, collaborative documents, and social networking have been growing exponentially and are testing the limits of existing infrastructure. Meeting these services' storage demands is challenging due to a number of conflicting requirements.

First, the Internet brings a huge audience of potential users, so the applications must be highly scalable. A service can be built rapidly using MySQL [10] as its datastore, but scaling the service to millions of users requires a complete redesign of its storage infrastructure. Second, services must compete for users. This requires rapid development of features and fast time-to-market. Third, the service must be responsive; hence, the storage system must have low latency. Fourth, the service should provide the user with a consistent view of the data|the result of an update should be visible immediately and durably. Seeing edits to a cloud-hosted spreadsheet vanish, however briefly, is a poor user experience. Finally, users have come to expect Internet services to be up 24/7, so the service must be highly available. The service must be resilient to many kinds of faults ranging from the failure of individual disks, machines, or routers all the way up to large-scale outages a ecting entire datacenters.