Windows Azure and Cloud Computing Posts for 5/2/2011+

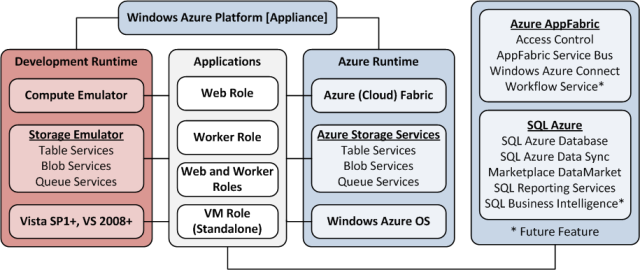

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi reported Just Released: Windows Azure AppFabric Caching Service on 5/2/2011:

In case you missed the news, the Windows Azure AppFabric blog announced on Friday that the Windows Azure AppFabric Caching service is now available as a production service. This service is a distributed, in-memory, application cache service that accelerates the performance of Windows Azure and SQL Azure applications by providing the ability to keep data in-memory, which eliminates the need to retrieve that data from storage or a database. Six different cache size options are available ranging from 128MB to 4GB.

To make it easier to start using this new service, users will not be charged for billing periods prior to August 1, 2011. Some Windows Azure Platform offers include the 128MB cache option for free for a certain period of time for current customers. You can find more details on the Windows Azure Platform Offers page.

To learn more about the Windows Azure AppFabric Caching service, read the blog post on the Windows Azure AppFabric blog.

For questions about the Caching service, please visit the Windows Azure Storage, CDN and Caching Forum.

Neil MacKenzie (@mknz) posted SQL Azure Federations on 5/2/2011:

SQL Azure Federations is a forthcoming technology that supports elastic scalability of SQL Azure databases. It provides Transact SQL support for managing the automated federation of data across multiple SQL Azure databases. It also supports the automatic routing of SQL statements to the appropriate SQL Azure database.

This post differs from other posts on the blog since I have not used SQL Azure Federations. Essentially, the post represents my notes of the many excellent posts on SQL Azure Federations that Cihan Biyikoglu has published on his blog. I also learned a lot from the PDC 10 presentation by Lev Novik on Building Scale-out Database Applications with SQL Azure.

Scalability with State

Elastic scalability is an important driver behind cloud computing. Elasticity, the ability to scale services up and down, allows resource provision to be better matched to resource demand. This allows services to be provided less expensively.

Windows Azure supports elasticity through the provision of role instances. This elasticity is simplified by the requirement that an instance be stateless. Each new instance of a role is identical to every other instance of the role. In a Windows Azure service, state is at the role level and is kept in a durable store such as Windows Azure Table or SQL Azure.

There is, however, a need to support the elastic scalability of durable stores. A common technique is sharding – in which multiple copies of the data store are created, and data distributed to a specific copy or shard of the data store. A simple distribution algorithm is used to allocate all data for which some key is within a given range to the same shard.

Although sharding solves the elastic scalability problem from a theoretical perspective it introduces two practical problems: the routing of data requests to a specific shard; and shard maintenance. An application using a sharded datastore must discover to which shard a particular request is to be routed. The datastore must manage the partitioning of the data among the different shards.

The Windows Azure Table Service solves these problems through auto-sharding. It allocates entities to shards based on the value of the PartitionKey for an entity. The Windows Azure Table Service manages the distribution of these shards (or partitions) across different storage nodes in a manner that is totally transparent to applications using it. These applications route all requests to the same RESTful endpoint regardless of the shard containing the data.

SQL Azure currently provides no built-in support for sharding. Data can be sharded across multiple SQL Azure databases but shard maintenance is the responsibility of the SQL Azure DBA. Furthermore, an application must manage request routing since there is no support for it in SQL Azure. The lack of support for request routing causes problems with connection management since the routing of requests to many different databases fragments the connection pool thereby diminishing its utility.

However, Microsoft is developing SQL Azure Federations to provide native sharding support to SQL Azure. SQL Azure Federations provides simple Transact SQL extensions supporting shard management and provides a single connection endpoint for requests against a SQL Azure database. This feature will bring stateful elastic scalability to a relational environment just as the Windows Azure Table Service provides stateful elastic scalability in a NoSQL environment.

Federations

SQL Azure Federations introduces the concept of a federation which serves as the scalability unit for data in the database. A federation is identified by name, and specified by the datatype of the federation key used to allocate individual rows to different federation members (or shards) of the database. Each federation member is a distinct SQL Azure database. However, SQL Azure Federations exposes a single connection endpoint for the database and all federation members in it. Consequently, applications route all requests to the database regardless of which federation member data resides in. Note that a database can have more than one federation allowing it to support different scalability requirements for different types of data.

A federation is created using a CREATE FEDERATION statement parameterized by the federation name and the datatype of the federation key, as follows:

CREATE FEDERATION FederationName (federationKey RANGE BIGINT)

In SQL Azure Federations v1, the supported datatypes for federation key are: INT, BIGINT, UNIQUEIDENTIFIER, and VARBINARY(900). This statement creates a single federation member into which all federated data is inserted.

An application indicates that subsequent statements should be routed to a particular federation member through the USE FEDERATION statement, as follows:

USE FEDERATION FederationName (federationKey = 1729) WITH RESET, FILTERING = ON

(Your guess as to the meaning of WITH RESET is as good as mine.) Setting FILTERING to ON, as opposed to OFF, causes all SQL statements routed subsequently to the partition to be filtered implicitly by the specified value of the federation key. This is useful when migrating a single tenant database to a multi-tenant, federated database since it allows a federation member to mimic a single tenant database.

Federation Tables

A federated database comprises various types of tables:

- federation tables – large-scale data that scales elastically in federation members.

- reference tables – small-scale lookup data copied to each federation member.

- central tables – resident in the root database that is not distributed to federation members.

Federation tables are those to be sharded across different federation members. A table is declared to be federated when it is created, as follows:

CREATE TABLE tableName

( … )

FEDERATED ON (federationKey = columnName)Reference tables are distributed in toto to each federation member and are not sharded. They are created in a federation member by a normal CREATE TABLE statement that has no decoration for SQL Azure Federation. This means that the connection must currently be USING a federation member.

Central tables exist in the root database and are not distributed to federation members. They are created in the federation root by a normal CREATE TABLE statement that has no decoration for SQL Azure Federation. The USE FEDERATION statement can be used to route connections to the root database as follows:

USE FEDERATION ROOT

Each federation table in a federation must have a column matching the federation key. Every unique index on the table must also contain this federation key. All federation tables in a federation member contain data only for those records in the federation key range allocated to that federation member.

The records with a specific value of federation key in the different federation tables of a federation form an atomic unit since they form an indivisible set of records that are always allocated to the same federation member. In practice, these records are likely related since they likely represent, for example, all the data for a single customer. Furthermore, it may be worth adding the federation key to denormalize a table precisely so that its records belong to the same atomic unit as related data. An obvious example is adding a customer Id to an OrderDetail table so that the order details are federated along with the order and customer information in a federation where the federation key is customer Id.

Cihan Biyikoglu goes much deeper into the rules surrounding federation tables in a post describing Considerations When Building Database Schema with Federations in SQL Azure.

Elastic Scalability of a Federation

A single federation member adds little to scalability, so SQL Azure Federations provides the ALTER FEDERATION statement to support elastic scalability of a federation. This statement has a SPLIT keyword indicating that a federation member should be split into two new federation members with the data being divided at a specified value of federation key. Similarly a MERGE parameter can be used to merge all the data in two federation members into a single federation member. For example, the following statement splits a federation member:

ALTER FEDERATION federationName SPLIT AT (federationKey = 50000)

The ALTER FEDERATION SPLIT statement is central to the elastic scalability provided by SQL Azure Federations. As individual federation members fill up, this statement can be used repeatedly to distribute federation data among more and more federation members. This is not automated as it is with Windows Azure Table, but it is a relatively simple administrative process.

Cihan Biyikoglu has a post – Federation Repartitioning Operations: Database SPLIT in Action – in which he provides a detailed description of the split process. To maintain consistency and high-availability while a federation member is split, the data in it is fully migrated into two new federation members before they are made active federation members. The original federation member is deleted after the split federation members become active. Once the ALTER FEDERATION statement has been issued, the split process is fully automated by SQL Azure Federations.

Note

SQL Azure Federations is not yet available and, as stated at the beginning, this post is based primarily on blog posts written over a period of months. There appear to have been slight syntax changes during that period so it is quite likely that the statements described above may not be completely correct.

In his MIX 11 presentation, David Robinson listed SQL Azure Federations as one of the “CY11 Investment Themes” for the SQL Azure team. Hopefully, this gives some idea of the timeframe when we will finally get our hands on at least a test version of SQL Azure Federations.

My Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s March 2011 issue covers the same ground.

Steve Yi reported MSDN Article: How to Connect to SQL Azure through ASP.NET on 5/2/2011:

MSDN has written an article on how to bind data from Microsoft SQL Azure Database to ASP.Net controls. The article provides a basic walkthrough and an example on how to do it. The article also shows how similar it is working with SQL Azure is to SQL Server. Your web application can continue running within an existing datacenter or with a hoster, and is a great example of showing that in many cases the only change necessary to using the SQL Azure cloud database is just a matter of changing the connection string.

I 'd like to hear what people think. Take a look at the article and give the walkthrough a try.

Visual Studio Magazine posted My (@rogerjenn) SQL or No SQL for Big Data in the Cloud? article for the May 2001 issue on 5/1/2011:

The relational model and SQL dominate today's database landscape. But the Web's "big data" revolution is forcing architects and programmers to consider newer, unfamiliar models when designing and implementing Web-scale applications. Before diving into comparing data models, it's a good idea to agree on a definition of "big data." Adam Jacobs, a senior software engineer at 1010data Inc., describes it as "data whose size forces us to look beyond the tried-and-true methods that are prevalent at that time" in his article, "The Pathologies of Big Data," in the July 2009 issue of ACM Queue. Jacobs explains:

In the early 1980s, it was a dataset that was so large that a robotic "tape monkey" was required to swap thousands of tapes in and out. In the 1990s, perhaps, it was any data that transcended the bounds of Microsoft Excel and a desktop PC, requiring serious software on Unix workstations to analyze. Nowadays, it may mean data that is too large to be placed in a relational database and analyzed with the help of a desktop statistics/visualization package -- data, perhaps, whose analysis requires massively parallel software running on tens, hundreds, or even thousands of servers.

The performance of SQL queries against relational tables decreases as the number of rows increases, leading to a requirement for partitioning, usually by a process called sharding. In the case of SQL Azure, a cloud-based database based on SQL Server 2008 R2, the maximum database size is 50GB, so individual partitions (shards) of 50GB or less in size are linked by a process called federation.

Not Only SQL

Some developers are turning to NoSQL, said to stand for "not SQL" or "not only SQL," to model data for Web-scale applications. NoSQL includes non-relational databases in the following categories:Key/Value, also called Entity/Attribute/Value (EAV), stores are schema-less collections of entities that don't need the same properties. Windows Azure Table Storage and Amazon Web Services SimpleDB are proprietary, cloud-based examples, while BerkeleyDB, ReDis and MemcacheDB are open source implementations.

Document stores, typified by open source CouchDB, MongoDB, RavenDB and Riak, can contain complex data structures, which usually are stored in JavaScript Object Notation (JSON) format. RavenDB is designed to run under Windows with the Microsoft .NET Framework. It has RESTful and .NET client APIs, supports LINQ queries, and can use System.Transaction to enforce ACID transactions. RavenDB requires a commercial license when used with proprietary software.

Column, also called column-family or wide-column, stores define columns in a configuration file and hold column families in rows accessed by a key value. Most column stores are modeled on Google BigTable architecture. Popular open source column-family implementations are Cassandra and Hypertable. Steve Marx, a Microsoft technical strategist on Windows Azure, has a live demonstration of Cassandra running in a Windows Azure project.

Graph stores, or graph databases, are an emerging NoSQL category based on graph theory that use nodes (standalone objects or entities) and edges (lines used to connect nodes and properties) to represent and store information. GraphDB is an open source graph store written in C# that can run in a Windows Azure Worker Role. Microsoft Research offers academic and commercial licenses for community technology previews of its Dryad distributed graph store and DryadLINQ parallel query language, both of which are destined for deployment in Windows Azure. Neo4j is a popular open source graph database for Java.

Co-Relational Model

The head of the Microsoft Cloud Programmability Team, Erik Meijer, whom I call the "father of LINQ," and Gavin Bierman, a senior researcher at Microsoft Research, Cambridge, propose to drain the gulf between the relational and NoSQL data models. In the March 2011 ACM Queue article, "A Co-Relational Model of Data for Large Shared Data Banks", the coauthors apply category theory to prove that "the NoSQL category is the dual of the SQL category -- NoSQL is really coSQL." They assert:The implication of this duality is that coSQL and SQL are not in conflict, like good and evil. Instead they are two opposites that coexist in harmony and can transmute into each other like yin and yang. Interestingly, in Chinese philosophy yin symbolizes open and hence corresponds to the open world of coSQL, and yang symbolizes closed and hence corresponds to the closed world of SQL.

Meijer and Bierman conclude, "Because of the common query language [LINQ] based on monads, both can be implemented using the same principles."

Kash Data Consulting LLC posted Using SQL Azure Data Sync – Data Synchronization in Cloud on 3/10/2011 as part of its SQL Azure Tutorials series (missed when posted):

SQL Azure database is a cloud database from Microsoft. Using SQL Azure, you can definitely have some cost savings specially if you are a small business that does not want a full scale SQL Server setup in-house. With SQL Azure, the greatest advantage of using this cloud database from Microsoft is built-in functionality of high availability, scalability, multi-user support and Fault tolerance capabilities. For a small to medium business, all these critical technologies can be challenging not only to configure but also to implement.

Today we are going to look at another SQL Azure technology, SQL Azure Data Sync. This is a service built on Microsoft Sync framework technology. When you use SQL Azure data sync, you can not only synchronize databases between an on-premise SQL server and another one in the SQL Azure cloud environment, you can also synchronize two or more SQL Azure databases between different cloud servers. When we talk about database synchronization, we are primarily talking about keeping the data in sync in between the databases in different physical SQL Azure locations. In such a manner, it is similar in concept to Replication on a local on-premise SQL Server.

Benefits of SQL Azure Data Sync:

Using SQL Azure data sync will give you the following benefits:

- If you have a distributed database environment, having SQL Azure data sync may be a cost-effective solution to manage the architecture

- Azure data sync will give your end-users located at separate physical offices easy and up to date access to database in the Azure cloud environment.

- The master (HUB) database could be at a physical On premise SQL Server which pushes the changes to one or more separate MS Azure cloud member databases.

- Azure Data sync also incorporates running a maintenance schedule at a regular time which modifies the data changes and keeps all SQL Server databases synchronized.

Setting up SQL Azure Data Sync between Cloud Servers

We are going to walk you through setting up SQL Azure data sync between two separate databases in the cloud.

The first thing you need to do is to go to this website which is SQL Azure labs, http://sqlAzurelabs.com

Next go ahead and click on SQL Azure data sync in the left navigation bar. This is shown in the screen capture right below

Next it will ask you to log into SQL Azure portal. It will do this by bringing up Live.com website. After you are logged in, you will be prompted for SQL Azure data sync service registration. Go ahead and check the agreement and then click Register. After that you will be taken to the Azure data sync management area.

We have included a screen shot on it as follows.

Here you can click Add New to add in new Azure Sync Group. An Azure Sync Group is really a collection of cloud MS SQL azure databases on premise MS SQL server databases. In our case we named it “Northwind–local–cloud” even though in our case we will be synchronizing between two online cloud Azure SQL databases. Click on Register New Server under Member Information. After you click Next you can add your primary (Hub) server information. Next we click on Save. After that

We have included what our primary Hub server looks like right below.

After you have added the primary Azure server you have to select the database to synchronize and then click on Add HUB to make this the primary server. This is going to be the primary Azure Server that all the member Azure servers will be synchronizing data with.Next we are going to add a Member SQL Azure server so we redo the process as shown above and include the member server information. Click on Save to commit the changes.

Here is our member server shown below:

In a similar fashion to the HUB server we have to pick an Azure database and then click Add Member. The screen capture below illustrates this point on Azure data sync.

After you complete the process, you should have two SQL Azure servers, one Hub and one member.

This is what the screens look like after we’re done with both our SQL Azure cloud servers

At this point we are able to select the particular SQL tables we need to synchronize. For our case we are using Northwind cloud database so we will pick CUSTOMERS and ORDERS tables by click on the Right Green arrow. Finally we click on Finish to complete the Azure Sync Group Procedure.

This is shown right below for your understanding.

Now comes the true test as we put the data sync to work. Before we setup the Data synchronization schedule, we are going to log into the Member server and make sure the CUSTOMERS table does not exist.We have included that and the following screen capture from our computer. Notice that we are getting an error with invalid object.

Next we go ahead and switch back to the online SQL Azure data sync management area. We click on Schedule Sync and then select a daily schedule for 12:00 AM in the morning. And summary this will synchronize the two tables from Northwind database on the SQL azure server to the Northwind database on this SQL azure server.Here is what the Schedule Sync looks like for us.

If you click run Dashboard option on the bottom, it will take you to a new screen. This is shown below and is titled Scheduled Synch jobs. Notice that we set up our Azure sync job and it is scheduled.

In order to test we are going to actually click on Sync Now. This will kick off the Azure sync process. Next we click on View log option to see that are changes from the primary Azure server actually cascade down to the secondary Azure server.

Here is a screen capture of what the Sync job log looks like.

After the Sync process completes we are going to login to SQL Azure member server and make sure the data is synchronized. We logged into the server using SQL Server Management Studio and verify that the data is actually there.

This is shown right below

Notice now the data at the member server sbyfn2c9rh.database.windows.net is synchronized with the Hub server.

Related Links on SQL Azure Data Sync for further reading:

<Return to section navigation list>

MarketPlace DataMarket and OData

Glenn Gailey (@ggailey777) reported MSDN Data Services Developer Center Redesign—Lots of [OData] Videos on 5/2/2011:

The WCF Data Service developer center has been redesigned; the purpose of this update is to provide a much more hands-on experience and to make it easier to get started with OData services, and with WCF Data Services in particular. This means, in part, less random content and more videos.

Now, the scenario-based content is broken down into beginners guides, which are all videos, and links to more advanced content, including MSDN documentation and interesting blog posts.

Here are some of the featured “beginner’s guide” videos, all of which were, I believe, created for us by Shawn Wildermuth:

- Building an OData Service—basic OData with WCF Data Service quickstart using the Entity Framework provider, which parallels the WCF Data Services quickstart.

- Building an OData Service from Any Data Source—featuring the reflection provider

- Consuming OData using .NET—a basic WCF Data Services client quickstart, again paralleling the WCF Data Services quickstart.

- Consuming OData using Silverlight—parallels the Silverlight quickstart.

- Consuming OData using Windows Phone 7—parallels the OData for Windows Phone 7 quickstart

- Consuming OData using PHP—Brian Swan did this first, but glad to see it in video form.

- Consuming OData using Objective C—for those iPhone app lovers out there.

These video pages will also (eventually) link to other related MSDN library and blog content, and I’m hoping that Shawn will also publish his sample code projects from these videos to MSDN Code Gallery. I was also excited to find the new article Complete Guide to Building Custom Data Providers up there as well.

Look for more OData-related content going up here in the coming months.

Turker Keskinpala reported an Update to OData Service Validation Tool in a 5/2/2011 post to the OData Blog:

We pointed out when we announced the OData Service Validation Tool at Mix the other week, we are actively working on it and would add more rules to the service on a regular cadence.

We just pushed an update to the service with the following:

- Added 3 new metadata rules

- Fix for https endpoint support

- Fixes for correctly highlighting error/warning lines in payload

- Several other bug fixes

Since the release of the tool, the feedback that we received has been great. Thank you all for trying it and providing feedback.

It's also great to hear that there is interest in the source of the tool and potentially being able to contribute new rules. We heard that feedback and are in the process of making the tool open source. We will update you when the process is completed and the source code is available.

As always, please let us know what you think through the OData mailing list.

Alex James (@adjames) requested OData users to complete an OData Survey in a 5/2/2011 post to the OData blog:

The OData ecosystem is evolving and growing rapidly. So much so that it isn't easy to keep up with developments - a nice problem to have I'm sure you'll agree!

To try to get a more accurate picture of the OData ecosystem we put together a survey. It would be great if you could take a little time (it is a short survey) and let us know how you are using OData.

Pablo Castro posted the slides for his MIX 2011 OData Roadmap Services Powering Next Generation Experiences on 5/1/2011. From the abstract:

At home and work, the way we experience the web (share, search and interact with data) is undergoing an industry-changing paradigm shift from “the web of documents” to the “web of data” which enables new data-driven experiences to be easily created for any platform or device.

Come to this session to see how OData is helping to enable this shift through a hands-on look at the near term roadmap for the Open Data Protocol and see how it will enable a new set of user experiences. From support for offline applications, to hypermedia-driven UI and much more, join us in this session to see how OData is evolving based on your feedback to enable creating immersive user experiences for any device.

Watch the video here.

The WCF Data Services Team posted a Reference Data Caching Walkthrough on 4/13/2011 (missed when posted):

This walkthrough shows how a simple web application can be built using the reference data caching features in the “Microsoft WCF Data Services For .NET March 2011 Community Technical Preview with Reference Data Caching Extensions” aka “Reference Data Caching CTP”. The walkthrough isn’t intended for production use but should be of value in learning about the caching protocol additions to OData as well as to provide practical knowledge in how to build applications using the new protocol features.

Walkthrough Sections

The walkthrough contains five sections as follows:

- Setup: This defines the pre-requisites for the walkthrough and links to the setup of the Reference Data Caching CTP.

- Create the Web Application: A web application will be used to host an OData reference data caching-enabled service and HTML 5 application. In this section you will create the web app and configure it.

- Build an Entity Framework Code First Model: An EF Code First model will be used to define the data model for the reference data caching-enabled application.

- Build the service: A reference data caching-enabled OData service will be built on top of the Code First model to enable access to the data over HTTP.

- Build the front end: A simple HTML5 front end that uses the datajs reference data caching capabilities will be built to show how OData reference data caching can be used.

Setup

The pre-requisites and setup requirements for the walkthrough are:

- This walkthrough assumes you have Visual Studio 2010, SQL Server Express, and SQL Server Management Studio 2008 R2 installed.

- Install the Reference Data Caching CTP. This setup creates a folder at “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\” that contains:

- A .NETFramework folder with:

- i. An EntityFramework.dll that allows creating off-line enabled models using the Code First workflow.

- ii. A System.Data.Services.Delta.dll and System.Data.Services.Delta.Client.dll that allow creation of delta enabled Data Services.

- A JavaScript folder with:

- i. Two delta-enabled datajs OData library files: datajs-0.0.2 and datajs-0.0.2.min.js.

- ii. A .js file that leverages the caching capabilities inside of datajs for the walkthrough: TweetCaching.js

Create the web application

Next you’ll create an ASP.NET Web Application where the OData service and HTML5 front end will be hosted.

- Open Visual Studio 2010 and create a new ASP.NET web application and name it ConferenceReferenceDataTest. When you create the application, make sure you target .NET Framework 4.

- Add the .js files in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\Javascript” to your scripts folder.

- Add a reference to the new reference data caching-enabled data service libraries found in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\.NETFramework”:

- Microsoft.Data.Services.Delta.dll

- Microsoft.Data.Services.Delta.Client.dll

- Add a reference to the reference data caching-enabled EntityFramework.dll found in in “C:\Program Files\WCF Data Services March 2011 CTP with Reference Data Caching\Binaries\.NETFramework”.

- Add a reference to System.ComponentModel.DataAnnotations.

Build your Code First model and off-line enable it.

In order to build a Data Service we need a data model and data to expose. You will use Entity Framework Code First classes to define the delta enabled model and a Code First database initializer to ensure there is seed data in the database. When the application runs, EF Code First will create a database with appropriate structures and seed data for your delta-enabled service.

- Add a C# class file to the root of your project and name it model.cs.

- Add the Session and Tweets classes to your model.cs file. Mark the Tweets class with the DeltaEnabled attribute. Marking the Tweets class with the attribute will force Code First to generate additional database structures to support change tracking for Tweet records.

public class Session

{

public int Id { get; set; }

public string Name { get; set; }

public DateTime When { get; set; }

public ICollection<Tweet> Tweets { get; set; }

}

[DeltaEnabled]

public class Tweet

{

[Key, DatabaseGenerated(DatabaseGeneratedOption.Identity)]

[Column(Order = 1)]

public int Id { get; set; }

[Key]

[Column(Order = 2)]

public int SessionId { get; set; }

public string Text { get; set; }

[ForeignKey("SessionId")]

public Session Session { get; set; }

}

Note the attributes used to further configure the Tweet class for a composite primary key and foreign key relationship. These attributes will directly affect how Code First creates your database.3. Add a using statement at the top of the file so the DeltaEnabled annotation will resolve:

using System.ComponentModel.DataAnnotations;4. Add a ConferenceContext DbContext class to your model.cs file and expose Session and Tweet DbSets from it.

public class ConferenceContext : DbContext { public DbSet<Session> Sessions { get; set; } public DbSet<Tweet> Tweets { get; set; } }5. Add a using statement at the top of the file so the DbContext and DbSet classes will resolve:

using System.Data.Entity;6. Add seed data for sessions and tweets to the model by adding a Code First database initializer class to the model.cs file:

public class ConferenceInitializer : IncludeDeltaEnabledExtensions<ConferenceContext> { protected override void Seed(ConferenceContext context) { Session s1 = new Session() { Name = "OData Futures", When = DateTime.Now.AddDays(1) }; Session s2 = new Session() { Name = "Building practical OData applications", When = DateTime.Now.AddDays(2) }; Tweet t1 = new Tweet() { Session = s1, Text = "Wow, great session!" }; Tweet t2 = new Tweet() { Session = s2, Text = "Caching capabilities in OData and HTML5!" }; context.Sessions.Add(s1); context.Sessions.Add(s2); context.Tweets.Add(t1); context.Tweets.Add(t2); context.SaveChanges(); } }7. Ensure the ConferenceIntializer is called whenever the Code First DbContext is used by opening the global.asax.cs file in your project and adding the following code:

void Application_Start(object sender, EventArgs e) { // Code that runs on application startup Database.SetInitializer(new ConferenceInitializer()); }Calling the initializer will direct Code First to create your database and call the ‘Seed’ method above placing data in the database

8. Add a using statement at the top of the global.asax.cs file so the Database class will resolve:

using System.Data.Entity;Build an OData service on top of the model

Next you will build the delta enabled OData service. The service allows querying for data over HTTP just as any other OData service but in addition provides a “delta link” for queries over delta-enabled entities. The delta link provides a way to obtain changes made to sets of data from a given point in time. Using this functionality an application can store data locally and incrementally update it, offering improved performance, cross-session persistence, etc.

1. Add a new WCF Data Service to the Project and name it ConferenceService.

2. Remove the references to System.Data.Services and System.Data.Services.Client under the References treeview. We are using the Data Service libraries that are delta enabled instead.

3. Change the service to expose sessions and tweets from the ConferenceContext and change the protocol version to V3. This tells the OData delta enabled service to expose Sessions and Tweets from the ConferenceContext Code First model created previously.

public class ConferenceService : DataService<ConferenceContext> { // This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Sessions", EntitySetRights.All); config.SetEntitySetAccessRule("Tweets", EntitySetRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V3; } }4. Right-click on ConferenceService.svc in Solution Explorer and click “View Markup”. Change the Data Services reference as follows.

Change:

<%@ ServiceHost Language="C#" Factory="System.Data.Services.DataServiceHostFactory, System.Data.Services, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" Service="ConferenceReferenceDataTest.ConferenceContext" %>

To:

<%@ ServiceHost Language="C#" Factory="System.Data.Services.DataServiceHostFactory, Microsoft.Data.Services.Delta, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" Service="ConferenceReferenceDataTest.ConferenceService" %>

6. Change the ConferenceService.svc to be the startup file for the application by right clicking on ConferenceService.svc in Solution Explorer and choosing “Set as Start Page”.

7. Run the application by pressing F5.

- In your browser settings ensure feed reading view is turned off. Using Internet Explorer 9 you can turn feed reading view off by choosing Options->Content then clicking “Settings for Feeds and Web Slices”. In the dialog that opens uncheck the “Turn on feed reading view” checkbox.

- Request all Sessions with this OData query: http://localhost:11497/ConferenceService.svc/Sessions (note the port, 11497, will likely be different on your machine) and note there’s no delta link at the bottom of the page. This is because the DeltaEnabled attribute was not placed on the Session class in the model.cs file.

- Request all tweets with this OData query: http://localhost:11497/ConferenceService.svc/Tweets and note there is a delta link at the bottom of the page:

<link rel="http://odata.org/delta" href=http://localhost:11497/ConferenceService.svc/Tweets?$deltatoken=2002 />

The delta link is shown because the DeltaEnabled attribute was used on the Tweet class.

- The delta link will allow you to obtain any changes made to the data based from that current point in time. You can think of it like a reference to what your data looked like at a specific point in time. For now copy just the delta link to the clipboard with Control-C.

8. Open a new browser instance, paste the delta link into the nav bar: http://localhost:11497/ConferenceService.svc/Tweets?$deltatoken=2002, and press enter.

9. Note the delta link returns no new data. This is because no changes have been made to the data in the database since we queried the service and obtained the delta link. If any changes are made to the data (Inserts, Updates or Deletes) changes would be shown when you open the delta link.

10. Open SQL Server Management Studio, create a new query file and change the connection to the ConferenceReferenceDataTest.ConferenceContext database. Note the database was created by a code first convention based the classes in model.cs.

11. Execute the following query to add a new row to the Tweets table:

insert into Tweets (Text, SessionId) values ('test tweet', 1)

12. Refresh the delta link and note that the newly inserted test tweet record is shown. Notice also that an updated delta link is provided, giving a way to track any changes from the current point in time. This shows how the delta link can be used to obtain changed data. A client can hence use the OData protocol to query for delta-enabled entities, store them locally, and update them as desired.

13. Stop debugging the project and return to Visual Studio.

Write a simple HTML5 front end

We’re going to use HTML5 and the reference data caching-enabled datajs library to build a simple front end that allows browsing sessions and viewing session detail with tweets. We’ll leverage a pre-written library that uses the reference data capabilities added to OData and leverages the datajs local store capabilities for local storage.

1. Add a new HTML page to the root of the project and name it SessionBrowsing.htm.

2. Add the following HTML to the <body> section of the .htm file:

<body> <button id='ClearDataJsStore'>Clear Local Store</button> <button id='UpdateDataJsStore'>Update Tweet Store</button> <br /> <br /> Choose a Session: <select id='sessionComboBox'> </select> <br /> <br /> <div id='SessionDetail'>No session selected.</div> </body>3. At the top of the head section, add the following script references immediately after the head element:

<head> <script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-1.5.2.min.js" type="text/javascript"></script> <script src=".\Scripts\datajs-0.0.2.js" type="text/javascript"></script> <script src=".\Scripts\TweetCaching.js" type="text/javascript"></script> …4. In the head section, add the following javascript functions. These functions:

- Pull sessions from our OData service and add them to your combobox.

- Create a javascript handler for when a session is selected from the combobox.

- Create handlers for clearing and updating the local store.

<script type="text/javascript"> //load all sessions through OData $(function () { //query for loading sessions from OData var sessionQuery = " ConferenceService.svc/Sessions?$select=Id,Name"; //initial load of sessions OData.read(sessionQuery, function (data, request) { $("#sessionComboBox").html(""); $("<option value='-1'></option>").appendTo("#sessionComboBox"); var i, length; for (i = 0, length = data.results.length; i < length; i++) { $("<option value='" + data.results[i].Id + "'>" + data.results[i].Name + "</option>").appendTo("#sessionComboBox"); } }, function (err) { alert("Error occurred " + err.message); } ); //handler for combo box $("#sessionComboBox").change(function () { var sessionId = $("#sessionComboBox").val(); if (sessionId > 0) { var sessionName = $("#sessionComboBox option:selected").text(); //change localStore to localStore everywhere and TweetStore to be TweetStore var localStore = datajs.createStore("TweetStore"); document.getElementById("SessionDetail").innerHTML = ""; $('#SessionDetail').append('Tweets for session ' + sessionName + ":<br>"); AppendTweetsForSessionToForm(sessionId, localStore, "SessionDetail"); } else { var detailHtml = "No session selected."; document.getElementById("SessionDetail").innerHTML = detailHtml; } }); //handler for clearing the store $("#ClearDataJsStore").click(function () { ClearStore(); }); //handler for updating the store $("#UpdateDataJsStore").click(function () { UpdateStoredTweetData(); alert("Local Store Updated!"); }); }); </script>5. Change the startup page for the application to the SessionBrowsing.htm file by right clicking on SessionBrowsing.htm in Solution Explorer and choosing “Set as Start Page”.

6. Run the application. The application should look similar to:

- Click the ‘Clear Local Store’ button: This removes all data from the cache.

- Click the ‘Update Tweet Store’ button. This refreshes the cache.

- Choose a session from the combo box and note the tweets shown for the session.

- Switch to SSMS and add, update or remove tweets from the Tweets table.

- Click the ‘Update Tweet Store’ button then choose a session from the drop down box again. Note tweets were updated for the session in question.

This simple application uses OData delta enabled functionality in an HTML5 web application using datajs. In this case datajs local storage capabilities are used to store Tweet data and datajs OData query capabilities are used to update the Tweet data.

Conclusion

This walkthrough offers an introduction to how a delta enabled service and application could be built. The objective was to build a simple application in order to walk through the reference data caching features in the OData Reference Data Caching CTP. The walkthrough isn’t intended for production use but hopefully is of value in learning about the protocol additions to OData as well as in providing practical knowledge in how to build applications using the new protocol features. Hopefully you found the walkthrough useful. Please feel free to give feedback in the comments for this blog post as well as in our prerelease forums here.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, Caching WIF and Service Bus

David Hardin reported on 5/2/2011 the availability of two new articles about Silverlight and Claims-Based Authentication in Windows Azure:

I wrote a series of lessons learned articles which Daniel Odievich helped me publish over on the TechNet Wiki. If you’re planning on Azure hosting Silverlight business applications you’ll want to check out these links before you get started:

Wade Wegner (@wadewegner) posted Cloud Cover Episode 45 - Windows Azure AppFabric Caching with Karandeep Anand on 4/29/2011 (missed when posted):

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show at @CloudCoverShow.

In this episode, Karandeep Anand joins Steve to discuss the newly-released Windows Azure AppFabric Caching service and show you exactly how to get started using Caching in your application.

In the news:

- Windows Azure SDK 1.4 refresh

- News Gator Channel 9 interview

- DeployToAzure project on CodePlex: deploy as part of your TFS build

- Windows Azure AppFabric Caching released!

Get the full source code for http://cloudcovercache.cloudapp.net.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruce Kyle offered Tips on How to Earn the ‘Powered by Windows Azure’ Logo in a 5/2/2011 post to the US ISV Evangelism blog:

The ‘Powered by Windows Azure’ logo is designed for applications that have been verified as running on Windows Azure and meet the requirements outlined here.

Earning the logo is one of the important steps to earning the Silver ISV Competency in the Microsoft Partner Network (MPN). Benefits of earning the ISV Competency include Internal-use rights for software relevant to the Silver ISV/Software competency, marketing campaigns, and more.

How to Earn the ‘Powered by Windows Azure’ Logo

Directions/Steps to get the “Powered By Windows Azure” Logo (and use it for Silver Competency):

1) Create a Microsoft Platform Ready (MPR) account.

NOTE if you are a Partner Network member please use the same LiveID for MPR as is used as the master LiveID of your MPN account (this is required to get credit in MPN for your MPR application(s)) ref: http://www.microsoftplatformready.com/us/Dashboard.aspx

2) Add your Windows Azure application(s) to your MPR Account (this will be the default page after creating your MPR account; otherwise via the “Develop” tab).

3) Click on the “Test” tab

4) View the update “Microsoft Platform Ready Test Tool: Training Video” – it is 11 minutes long and will save you more than 11 minutes after viewing it…

5) Click on “Test My Apps” then click on the “Microsoft Platform Ready Test Tool” link to download it to install and run on Windows 2008 R2 or Windows 7. NOTE: you will need your “Application ID” when running the test so make sure you have it available.

6. Run the test.

7a) If your Windows Azure application test does NOT pass the test then please email devspec@microsoft.com who will help you troubleshoot issues.

7b) If your Windows Azure application test passes the test then package the “Microsoft Platform Ready Test Tool” results as in the screen shots below.

Then browse to and upload the test “Toolkit Results File” (via the [Browse] and [Upload] buttons in the screen capture above).

8) You can now download the Powered by Windows Azure logo artwork from the MPR website.

Go to “Test” in the middle tab; and “View My Reports” on the left tab.

9) View the Marketing Benefits tab > My Marketing Benefits for any additional benefits that are now unlocked.

Next Steps to Earn the ISV Competency

Review the requirements to attain the ISV/Software competency.

Next:

- Provide three verifiable customer references. (Get details.)

- Complete a full profile.

- Pay the silver membership fee.

Special Limited Time Offer of US MPN Members

Get your application compatible SQL Server 2008, SQL Server Express, SQL Server 2008 R2 or Windows Azure between now and May 31, 2011 to receive a free copy of Microsoft Office Professional 2010! Office 2010 gives you the best-in-class tools to help you grow your business. Get your application compatible today! View Promotion Terms & Conditions.

Offer good for US ISVs only. Available for MPN partners only.

About ‘Powered by Windows Azure’ Logo

The Powered By Windows Azure program is designed to indicate to the public that your application meets a set of requirements set out by Microsoft. The logo is reserved for applications that meet the Microsoft requirements as outlined below.

- Completion of the Powered By Windows Azure program application

- Verification by Microsoft that the application or service runs within the valid Windows Azure IP address space

- Validation by submitting parties that application or service is not in violation of law or otherwise engaged in, promoting, or abetting unlawful activities, hate speech, pornography, libel, directly competitive or otherwise objectionable content

- Functionality and performance meets minimum specifications as determined by Microsoft

- Once the submission is accepted, it is the Licensee’s responsibility to ensure the quality of its application or service is maintained, and the eligibility requirements are continuously met.

Getting Started with Windows Azure

See the Getting Started with Windows Azure site for links to videos, developer training kit, software developer kit and more. Get free developer tools too.

For free technical help in your Windows Azure applications, join Microsoft Platform Ready.

Learn What Other ISVs Are Doing on Windows Azure

For other videos about independent software vendors (ISVs) on Windows Azure, see:

- Accumulus Makes Subscription Billing Easy for Windows Azure

- Azure Email-Enables Lists, Low-Cost Storage for SharePoint

- Crowd-Sourcing Public Sector App for Windows Phone, Azure<

- Food Buster Game Achieves Scalability with Windows Azure

- BI Solutions Join On-Premises To Windows Azure Using Star Analytics Command Center

- NewsGator Moves 3 Million Blog Posts Per Day on Azure

- How Quark Promote Hosts Multiple Tenants on Windows Azure

I went through this process a few months ago to earn the Powered By Window Azure logo for my OakLeaf Systems Azure Table Services Sample Project - Paging and Batch Updates Demo.

Brian Hitney described overcoming scalability issues in a Rock, Paper, Azure Deep Dive: Part 2 post of 5/2/2011:

In part 1, I detailed some of the specifics in getting the Rock, Paper, Azure (RPA) up and running in Windows Azure. In this post, I’ll start detailing some of the other considerations in the project – in many ways, this was a very real migration scenario of a reasonably complex application. (This post doesn’t contain any helpful info in playing the game, but those interested in scalability or migration, read on!)

The first issue we had with the application was scalability. Every time players are added to the game, the scalability requirements of course increases. The original purpose of the engine wasn’t to be some big open-ended game played on the internet; I imagine the idea was to host small (10 or less players). While the game worked fine for < 10 players, we started to hit some brick walls as we climbed to 15, and then some dead ends around 20 or so.

This is not a failing of the original app design because it was doing what it was intended to do. In my past presentations on scalability and performance, the golden rule I always discuss is: you have to be able to benchmark and measure your performance. Whether it is 10 concurrent users or a million, there should always be some baseline metric for the application (requests/sec., load, etc.). In this case, we wanted to be able to quickly run (within a few minutes) a 100 player round, with capacity to handle 500 players.

The problem with reaching these numbers is that as the number of players goes up, the number of games played goes up drastically (N * N-1 / 2). Even for just 50 players, the curve looks like this:

Now imagine 100 or 500 players! The first step in increasing the scale was to pinpoint the two main problem areas we identified in the app. The primary was the threading model around making a move. In an even match against another player, roughly 2,000 games will be played. The original code would spin up a thread for each _move_for each game in the match. That means that for a single match, a total of 4,000 threads are created, and in a 100-player round, 4,950 matches = 19,800,000 threads! For 500 players, that number swells to 499,000,000.

The advantage of the model, though, is that should a player go off into the weeds, the system can abort the thread and spin up a new thread in the next game.

What we decided to do is create a single thread per player (instead of a thread per move). By implementing 2 wait handles in the class (specifically a ManualResetEvent and AutoResetEvent) we can accomplish the same thing as the previous method. (You can see this implementation in the Player.cs file in the DecisionClock class.)

The obvious advantage here is that we go from 20 million threads in a 100 player match to around 9,900 – still a lot, but significantly faster. In the first tests, 5 to 10 player matches would take around 5+ minutes to complete. Factored out (we didn’t want to wait) a 100 player match would take well over a day. In this model, it’s significantly faster – a 100 player match is typically complete within a few minutes.

The next issue was multithreading the game thread itself. In the original implementation, games would be played in a loop that would match all players against each other, blocking on each iteration. Our first thought was to use Parallel Extensions (of PFx) libraries built into .NET 4, and kicking off each game as a Task. This did indeed work, but the problem was that games are so CPU intensive, creating more than 1 thread per processor is a bad idea. If the system decided to context switch when it was your move, it could create a problem with the timing and we had an issue with a few timeouts from time to time. Since modifying the underlying thread pool thread count is generally a bad idea, we decided to implement a smart thread pool like the one here on The Code Project. With this, we have the ability to auto scale the threads dynamically based on a number of conditions.

The final issue was memory management. This was solved by design: the issue was that original engine (and Bot Lab) don’t store any results until the round is over. This means that all the log files really start to eat up RAM…again, not a problem for 10 or 20 players – we’re talking 100-200+ players and the RAM just bogs everything down. The number of players in the Bot Lab is small enough where this wasn’t a concern, and the game server handles this by design by using SQL Azure, recording results as the games are played.

Next time in the deep dive series, we’ll look at a few other segments of the game. Until next time!

The Windows Azure Team posted Orion Energy Systems Moves to Windows Azure To Shed Light on Customer Data and Save Money on 5/2/2011:

Orion Energy Systems today announced that it is using the Windows Azure platform to host InteLite, a sensor-and-control solution designed to help its customers save energy and control lighting costs. This move will enable building managers to view energy-consumption data from multiple facilities from one dashboard on the Windows Azure platform, while saving Orion over $57,000 each year in hardware, labor and maintenance costs.

Read the press release to learn more about this announcement. Read the case study to learn more about how the Windows Azure platform has helped Orion Energy Systems provide an improved solution to customers. Click here to learn how other customers are using the Windows Azure platform.

Toddy Mladenov (@toddysm) described Using DebugView to Troubleshoot Windows Azure Deployments in a 5/1/2011 post:

Recently I fell into the same trap lot of other developers fall into – my Windows Azure role instances were cycling, and the logs were not helpful to find out what was happening. Fortunately I was able to connect via Remote Desktop to my instance, and use DebugView to troubleshoot the issue.

Here are the steps you need to go through to troubleshoot your deployment:

- Configure your deployment for Remote Desktop access; for more details see my previous post Remote Desktop Connection to Windows Azure Instance

- In Windows Azure Management Portal select your failing instance and click on the Connect button. Note that the button will not be enabled all the time; normally it becomes enabled when the Guest VM that is hosting your code is up and running (normally, just before your instance is about to become ready)

- Download DebugView from Microsoft’s TechNet site. You may decide to download it directly on the VM if you want to deal with the Enhanced Security for IE. If not you can download it on your local machine and just copy it via the Remote Desktop session.

I copied it under D:\Packages\GuestAgent but you can choose any other folder you have access to.- Start Dbgview.exe and select what you want to capture from the Capture menu. You can start and stop the capturing by clicking on the magnifying glass in the toolbar.

In my particular case DebugView was very helpful in troubleshooting the command line script that I used as startup task.

David Pallman announced the availability of Azure Storage Explorer 4.0.0.9 Refresh with Blob Security Features on 4/30/2011:

An update to Azure Storage Explorer 4 is now available. The Refresh 9 (4.0.0.9) update provides expanded security support for blobs and containers.

Previous versions of Azure Storage Explorer allowed you to set a container's default access level at time of creation (Private, Public Blob, or Public Container) but you couldn't change the access level after the fact. Nor was there any support for Shared Access Signatures or Shared Access Policies. This release provides all of that. Simply click the new Security button in the Blob toolbar and a Blob & Container Security dialog will open.There are three tabs on the Blob & Container Security dialog: Container Access, Shared Access Signatures, and Shared Access Policies.

To change a container's default access level, use the Container Access tab. Select the access level you wish and click Update Access Level.On the Shared Access Signatures tab, you can generate custom URLs that override the default access for container and blobs with custom permission settings (read/write/delete/list) and a time window for validity. You can generate both ad-hoc Shared Access Signatures and policy-based Shared Access Signatures.

For ad-hoc Shared Access Signatures, specify the permissions and time window. You are limited to a maximum of 60 minutes. Click Generate Signature to generate the signature. Once generated, you can use action buttons to copy the signature URL to the clipboard or open it in a browser to test it.Shared Access Signatures based on policies give you the option to change or revoke the privileges even after you have distributed Shared Access Signatures based on them. To generate a Shared Access Signature based on policy, select a policy on the Shared Access Signature tab and click Generate Signature.

The Shared Access Policies tab allows you to manage your shared access policies. Each policy has a name, permissions, and a validity time window. Unlike ad-hoc Shared Access Signatures, Shared Acccess Policies aren't limited to a 60-minute maximum time window.

What's next for Azure Storage Explorer? Probably a maintenance release to address issues and requested features

Ben Lobaugh (@benlobaugh) explained Connecting to SQL Azure with Excel 2010 in a 4/28/2011 post (missed when posted):

If you need to quickly view data from a SQL Azure database, Excel 2010 can do the heavy lifting and handle connecting to SQL Azure and viewing all your tables with ease. From there you can filter and mash your data however you would like.

2. Select the Data tab and click From Other Sources

3. Choose From Data Connection Wizard

4. From the wizard select Other/Advanced and click Next

5. In the ”Data Link Properties” dialog that come up choose Microsoft OLE DB Provider for ODBC Drivers and click Next

6. Click the radio button Use connection string then clickBuild…

7. The Select Data Source” dialog will pop up. Click New…

8. From the “Create New Data Source” list choose SQL Server Native Client 10.0 and click Next

9. Choose a name to save the connection file as and click Next on the following screen click Finish

10. On the following “Create a New Data Source to SQL Server” dialog enter your connection Description, Server address, and click Next

11. Choose With SQL Server authentication…. Input the Login ID specified as the Uid from your database connection string in the Windows Azure Portal. Input your password then click Next

12. For the next few wizard screens I left everything at defaults and simply clicked next. When the “ODBC Microsoft SQL Server Setup” dialog popped up I clicked Test Data Source.. to ensure my connection was successful.

13. Click OK until you get back to the “Data Link Properties” dialog and a “SQL Server Login” box should pop up asking for your password. Enter it and click OK

14. You will be returned to the “Data Link Properties” dialog finally and your connection should be all set to go now. You can click Test Connection if you want to make sure the final product works (never a bad idea), but at this point all you need to do to start using your SQL Azure DB connection is click OK

15. From here on out you can use your connection just as you would any other connection

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) posted on 5/3/2011 a 01:15:54 video clip of her Introduction to Visual Studio Lightswitch session for DevDays 2011 Netherlands:

In this demo-heavy session, you will see, end-to-end, how to build and deploy a data-centric business application using LightSwitch.

This is the first of Beth’s four presos at DevDays 2011 Netherlands. Stay tuned for video clips of the remaining LightSwitch sessions.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Tim Anderson (@timanderson) rings in with a Microsoft’s Scott Guthrie moving to Windows Azure post of 5/3/2011:

According to an internal memo leaked to ZDNet’s Mary Jo Foley, Microsoft’s Scott Guthrie who is currently Corporate VP of the .NET Developer Platform is moving to lead the Azure Application Platform team. This means he will report to Ted Kummert who is in charge of the Business Platform Division, instead of S Somasegar who runs the Developer Division; however both divisions are part of the overall Server and Tools Division. Server and Tools is the division from which Bob Muglia was ousted as president in January; the reason for this is still not clear to me, though I would guess at some significant strategy disagreement with CEO Steve Ballmer.

Guthrie was co-inventor of ASP.NET and is one of the most approachable of senior Microsoft execs; he is popular and respected by developers and his blog is one of the first places I look for in-depth and hands-on explanations of new features in Microsoft’s developer platform, such as ASP.NET MVC and Entity Framework.

I have spent a lot of time researching and using Visual Studio 2010, and while not perfect it is among the most impressive developer products I know, from the detail of the editor and debug features right through to ALM (Application Lifecycle Management) aspects like Team Foundation Server, testing in various forms, and build management. Some of that quality is likely due to Guthrie’s influence. The successful evolution of ASP.NET from web forms towards the leaner and more flexible ASP.NET MVC is another achievement in which I am sure he played a significant role.

Is it wise to take Guthrie away from his first love and over to the Azure platform? Only Microsoft can answer that, and of course he will still be responsible for an ASP.NET platform. I’d guess that we will see further improvement in the Visual Studio tools for Azure as well.

Still, it is a bold move and one that underlines the importance of Azure to the company. In my own research I have gained increasing respect for Azure and I would expect Guthrie’s arrival there to be successful in winning attention from the Microsoft platform developer community.

Related posts:

Mary Jo Foley (@maryjofoley) reported More Microsoft cloud changes coming around identity and commerce on 5/2/2011 to ZDNet’s All About Microsoft blog:

The Microsoft Server and Tools Business reorg announced on May 2 has a lot of moving parts. But the overarching goal is plainly to tighten up Microsoft’s cloud positioning and strategy.

Satya Nadella, the newly appointed President of Microsoft’s Server and Tools Business, already is setting up his team and making his game plan known. At the highest level, Microsoft’s cloud strategy continues to focus around customer choice among public cloud, private cloud, virtualization, platform and tools for new cloud applications — or any mix of these offerings.

Just a couple of months into the job, Nadella is tweaking his organization at a more granular level. I’ve already blogged about the new Azure Application Platform that will be headed, on the engineering side, by Corporate Vice President Scott Guthrie. (Guthrie is overseeing a team that brings together Azure developer, Web and the App Fabric teams.) And then there’s the newly expanded Developer Division, headed by Senior Vice President Soma Somasegar.

But there are some other changes Nadella is instigating, as announced internally today. These include

* Moving the Commerce Transaction Platform team from the Online Services Division (OSD) to the Server and Tools division. The existing Business Online Services Group (BSOG) Commerce Team will merge with the Commerce Transaction Platform Team, creating a single Commerce team some time after Office 365 becomes generally available (expected this June). Corporate VP of advertising and commerce Rajat Taneja will be the head of this joint team that will support all commerce services across the company, including those that are part of Office 365, Xbox Live, Ad Center and the Windows Azure platform. Taneja will report directly to Nadella.

* Maintaining the current Identity group, which will continue to work on security/identity products for both server and cloud. Lee Nackman, who joined Microsoft in 2009, has been Corporate Vice President, Directory, Access, and Information Protection, but is not going to stay in this role. Corporate VP Dave Thompson, who is leaving Microsoft this summer, is leading the identity team while Microsoft searches for a new head for the division. Nackman is looking for new opportunities, Nadella told his troops today. (I’m not sure if that means that Nackman is no longer going to be heading up the billing and provisioning parts of the Online Services organization, as was the plan just a couple months ago. I’ve asked Microsoft for comment.) …

Read the entire post here.

Mary Jo Foley (@maryjofoley) described Microsoft's plan to increase its focus on developers: The full internal memo on 5/2/2011:

The move of Microsoft Corporate Vice President Scott Guthrie from the .Net developer platform to heading up the newly created Azure Application Platform team is just one part of the Server and Tools Business reorg announced on May 2 inside Microsoft.

There are changes happening on the tools side of the house, as well. Microsoft is consolidating its developer tools and developer evangelism groups under Developer Division Senior Vice President Soma Somasegar. It seems kind of odd to me that these teams weren’t already lumped together, but they weren’t. …

Click here to read Somasegar’s full memo to the troops about today’s moves.

Michael Desmond riffed on the news that Scott Guthrie [Will] Lead Azure Application Platform Team on 5/2/2011 with quotes from me:

Microsoft confirms that .NET Platform head Scott Guthrie is leaving to lead a new Azure group as part of a May reorg.

As previously reported, Scott Guthrie, corporate vice president of the .NET Platform at Microsoft, will move to the Windows Azure group at Microsoft, as part of a May reorganization. All About Microsoft blogger (and Redmond magazine columnist) Mary Joe Foley is reporting that Guthrie will take the reins of the new Azure Application Platform team.

Foley reports that she obtained an internal memo written by Developer Division Senior Vice President S. "Soma" Somasegar, which outlined Guthrie's move and some of the shuffling that will take place with his departure. As Somasegar wrote in the memo, Microsoft needed "a strong leader to help drive the development of our Cloud Application Platform and help us win developers for Azure."

…

Guthrie will report to Ted Kummert, senior vice president of the Business Platform Division. The new Azure Application Platform team will be made up of several existing teams, according to the internal memo. These include the Web Platform and Tools team under Bill Staples, the Application Server Group under Abhay Parasnis, and the Portal and Lightweight Role teams drawn from the Windows Azure team.

Roger Jennings, principal at OakLeaf Systems, said the move makes a lot of sense for an Azure team that faces ramped up competition from IBM and Amazon.

"The Windows Azure Platform team needs the charisma and energy that Scott Guthrie brought to Visual Studio," Jennings wrote in an email interview. "Despite the plethora of new CTPs and feature releases, it seems to me that Windows Azure has been somnolent since Bob Muglia relinquished management of the Server and Tools business and Satya Nadella took over with Bill Laing continuing as the head of the Server and Cloud Division."

…

"Microsoft often takes 'stars' from one team and sends them to another team to try to 'goose' it," Foley wrote in an email exchange. "Azure sales need goosing."

Foley noted that her followers on Twitter offered up ideas for what Guthrie could accomplish in the Azure group, from enabling more open source language support to making the platform "more friendly for developers.

Jennings believes Guthrie will be well prepared for his new role. "Visual Studio has more and longer tentacles than Azure, and certainly a wider range and more users," he said, adding that it's critical that the Azure team "coordinate marketing with development, especially if Microsoft Research will be spending 90 percent of their proceeds on the cloud starting next fiscal year."

Michael posted a related Guthrie’s Gone story to his Desmond File column for VSM on the same day.

Mary Jo Foley (@maryjofoley) confirmed her earlier scoop with a Microsoft reorg: Scott Guthrie to head new Azure Application Platform team post of 5/2/2011 to ZDNet’s All About Microsoft blog:

The expected MIcrosoft Server and Tools Business (STB) reorg was announced inside the company on May 2.

And as my sources indicated last week, Scott Guthrie, the current Corporate Vice President of the .Net platform at Microsoft, is now going to be running the Azure Application Platform team under Senior Vice President of the Business Platform Division, Ted Kummert.

The Windows Azure team remains under Bill Laing, the Corporate Vice President of the Server and Cloud division. Laing has been heading up the division since Amitabh Srivastava resigned from Microsoft last month.

Here’s a bit from today’s internal memo from Developer Division Senior Vice President Soma Somasegar on Guthrie’s new role and his team:

“Sharpening our Focus around Azure and Cloud Computing. Azure and the cloud are incredibly important initiatives that will play a huge role in the future success of STB and the company. Given the strategic importance of Cloud Computing for STB and Microsoft, we need a strong leader to help drive the development of our Cloud Application Platform and help us win developers for Azure. We’ve asked Scott Guthrie to take on this challenge and lead the Azure Application Platform team that will report to Ted Kummert in BPD… This team will combine the Web Platform & Tools team led by Bill Staples, the Application Server Group led by Abhay Parasnis and the Portal and Lightweight Role teams from the Windows Azure team. Scott’s transition is bittersweet for me. I personally will miss him very much, but I’m confident that Scott will bring tremendous value to our application platform. With Scott’s current organization finishing up important milestones, the timing is right for Scott to take on this role.

“With Scott’s transition, the Client Platform team led by Kevin Gallo will report directly to me and will continue its focus on the awesome work that the team is doing for the different Microsoft platforms. The .NET Core Platform team led by Ian Carmichael will report to Jason Zander which will bring the managed languages and runtime work closer together. Patrick Dussud will report to Ian Carmichael and will continue being the technical leader for .NET.”

To date, Kummert’s Business Platform Division was in charge of SQL Server, SQL Azure, BizTalk Server, Windows Server AppFabric and Windows Azure platform AppFabric, Windows Communication Foundation, and Windows Workflow Foundation. These products are core to Microsoft’s private-cloud strategy and set of deliverables. …

Click here to read the rest of the story.

Kevin Remde (@kevinremde) posted The Cloudy Summary (“Cloudy April” - Part 30) on 4/30/2011:

If you’re living under a rock (or have better things to do) you may have missed the fact that all this month (April 2011) I’ve been creating one post each day having something to do with “the cloud”.

“Yeah. I saw that.”

And today, as a grand finale, I thought I’d list out titles and link to each and every one of them, so that you can save this link and have fast access to all the goodness that was my “Cloudy April” series. So without further adieu…

“’without further adieu’? What does that mean, really? Who says that? You never do...”

Yeah.. I don’t know. Sorry. Take two:

So here they are – My “Cloudy April” series blog posts:

- “Cloudy April” Part 1- Full of I.T. with my head in the clouds

- The Cloud Ate My Job! ( Cloudy April - Part 2)

- SaaS, PaaS, and IaaS.. Oh my! ( Cloudy April - Part 3)

- Getting SAASy ( Cloudy April - Part 4)

- Those stupid To the Cloud! Commercials ( Cloudy April - Part 5)

- No thanks. I'll PaaS. ( Cloudy April - Part 6)

- Windows Azure - the SQL! ( Cloudy April - Part 7)

- Was it cloudy at MMS 2011- (“Cloudy April”–Part 8)

- But what about security- (“Cloudy April”–Part 9)

- Your Cloud Browser (“Cloudy April”–Part 10)

- Let’s Chat Business (“Cloudy April”–Part 11)

- Are You Cloud-Certified- (“Cloudy April” - Part 12)

- Cloudy TechNet Events (“Cloudy April” - Part 13)

- The Springboard Series Tour Is Back, and Partly Cloudy! (“Cloudy April” - Part 14)

- InTune with the Cloud (“Cloudy April” - Part 15)

- I want my.. I want my.. I want my Hyper-V Cloud (“Cloudy April” - Part 16)

- More Hyper-V Goodness (“Cloudy April” - Part 17)

- Show me the money! (“Cloudy April” - Part 18)

- TechEd North America Cloudiness (“Cloudy April” - Part 19)

- Cloud Power-to-the-People! (“Cloudy April”–Part 20)

- Are you subscribed- (the “15-for-12” deal) (“Cloudy April” - Part 21)

- Your Office in the Clouds. 24x7. “365” (“Cloudy April” - Part 22)

- Cloud-in-a-Box (“Cloudy April” - Part 23)

- Building Cloudy Apps (“Cloudy April” - Part 24)

- Manage Your Windows Azure Cloud (“Cloudy April” - Part 25)

- The Role of the VM Role Role (“Cloudy April” - Part 26)

- What’s new in SCVMM 2012 - (“Cloudy April” - Part 27)

- Serve Yourself (“Cloudy April” - Part 28)

- Cloudy Server Configuration Advice (“Cloudy April” - Part 29)

- The Cloudy Summary (“Cloudy April” – Part 30)

CAUTION: Clicking that last link will cause your browser to enter an infinite loop. Fortunately, Windows Azure can complete that loop in 0.04 seconds.

---

Have you found the series useful? I sincerely hope so. I’d love to discuss any of these topics further with you in the comments.

Thanks for reading, participating, and hopefully enjoying these articles. If you’ve enjoyed reading them just half as much as I’ve enjoyed writing them, then I’ve enjoyed them twice as much as you.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

James Staten asked CIOs: At What Stage is Your Thinking on Cloud Economics? in his Forrester blog post of 5/1/2011: