Windows Azure and Cloud Computing Posts for 8/22/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI, Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 8/24/2012 with new articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Cloud Numerics, Big Data and OData

- Windows Azure Service Bus, Access Control, Caching, Active Directory, and Workflow

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Mike Wheatley described Facebook’s Secret Project To Contain It’s Big Data in an 8/24/2012 post to the DevOpsANGLE blog:

The amount of big data that Facebook handles never ceases to amaze. At a subdued press conference this week, attended by only a few reporters, the social media giant revealed a whole host of impressive stats about its data operations.

As thing stand right now, its system processes something close to 500+ terabytes of data and 2.5 billion pieces of content every single day. This includes more than 300 million new photos uploaded each day, plus a staggering 2.7 billion likes every 24 hours!

Such a voluminous amount of data means that Facebook is presented with some unique challenges, one of the biggest of them being how to create server clusters that can operate as a single entity even when they’re located in different parts of the globe.

At this week’s press conference, Facebook gave us some details on their latest infrastructure project, which they’ve codename “Project Prism”.

Jay Parikh, Facebook’s Vice President of Engineering kicked off by speaking about the huge importance that the social media company placed in the project:

“Big data really is about having insights and making an impact on your business. If you aren’t taking advantage of the data you’re collecting, then you just have a pile of data, you don’t have big data.”

Parikh explained that ‘taking advantage’ of data was something that had to be done in a matter of minutes, so that Facebook would be able to instantly understand user reactions and respond to them in something close to real time.

“With 950 million users, every problem is a big data problem, and one of the biggest challenges… is with MapReduce,” added Parikh.

For those who don’t know, MapReduce is one of the most widely-used implementations of Apache Hadoop, a model for processing large data sets using distributed computing and clusters of servers that was created by Facebook in conjunction with Yahoo. To begin with, MapReduce was the perfect system for Facebook to be able to handle the massive quantities of big data it handles, but as its mountain of data grew exponentially each year, it became clear that it was no permanent solution.

“As we got more data and servers over the years, we said, ‘Oh, crap, this isn’t going to fit in our data center. We’re running out of space,’” said Parikh.

“One of the big limitations in Hadoop today is, for the whole thing to work, the servers have to be next to each other. They can’t be geographically dispersed… The whole thing comes crashing to a halt.”

Project Prism has been designed to overcome this challenge. Essentially, the idea is that it will Facebook to take apart its monolithic storage warehouse and scatter it across different locations, whilst still maintaining a single view of all of its data.

“Prism basically institutes namespaces, allowing anyone to access the data regardless of where the data actually resides. … We can move the warehouses around, and we get a lot more flexibility and aren’t bound by the amount of power we can wire up to a single cluster in a data center,” Parikh explained.

Admittedly, Facebook are being kind of vague about how it all works; for now, their engineers are still trying to document the project, although they have promised to publish an engineering blog post about how it all works at a later date. We know one thing though – Facebook will likely make Project Prism open source soon enough.

“Given the other things we’ve done, we want to open source this stuff. These are the next scaling challenges other folks are going to face,” concluded Parikh.

And few can argue with that.

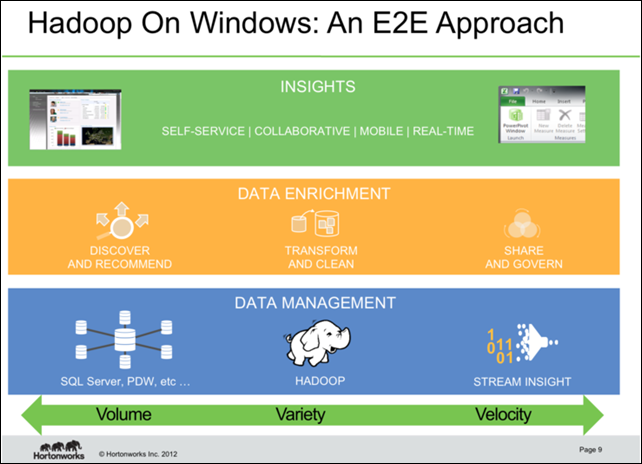

Wenming Ye (@wenmingye) posted Hadoop On WindowsAzure Updated on 8/22/2012:

HadoopOnAzure allows a user to run Hadoop on Microsoft Windows Azure as a service. It is currently in private CTP with very limited capacity, and by invitation only. You may attempt to sign up at https://connect.microsoft.com/SQLServer/Survey/Survey.aspx?SurveyID=13697

To get a feel of what the service currently looks like, please take a look at these learning resources on WindowsAzure.com that I have authored a few months ago. I would also love to get your feedback on addition content you are interested in for learning about Hadoop.

- Hadoop on Windows Azure Tutorials

- Introduction to Hadoop on Windows Azure

- Running Hadoop Jobs on Windows Azure and Analyzing the

Data with the Excel Hive Add-In- Hadoop on Windows Azure - Working With Data

- Analyzing Twitter Movie Data with Hive (additional source at: https://github.com/wenming/BigDataSamples/tree/master/twittersample)

- Simple Recommendation Engine using Apache Mahout

- Additional Learning Resources

I have also given talks at techEd this year, one of the sessions discusses the use scenarios for big data and Hadoop. (samples at https://github.com/wenming/BigDataSamples)

- TechEd Talk Video: Learn Big Data Application Development on Windows Azure

Now, onto the announcement by Henry Zhang on our engineering team today [link added]:

We just updated the Hadoop on Azure site with SU3 bits. Please see below for a list of changes.

If you create a new cluster now, you will be running on the 1.01 Hadoop core bits. We now provide access to the cluster dashboard on the master node directly for you to manage your cluster and schedule jobs. You can simply go to https://<clustername>.cloudapp.net, type in the cluster user name/password you created the cluster with and log in. You will find a familiar experience in the cluster dashboard as before. You will also find preview bits of the Powershell cmdlets and C# SDK for job submission to your Azure cluster. Both tool kits can be downloaded in the 'Download' tab while you are on your cluster. Feedback will be highly welcome!

SU3: Publicly Visible Improvements

- Hadoop core revved from 0.20.203.1 to 1.0.1 on Azure

- Hadoop on Azure dashboard running on cluster master node, accessible via .cloudapp.net">.cloudapp.net">.cloudapp.net">.cloudapp.net">.cloudapp.net">.cloudapp.net">.cloudapp.net">.cloudapp.net">https://<clustername>.cloudapp.net

- REST APIs for hadoop job submission, progress inquery, kill job (no web UI or remote desktop needed) (check out the download link on your cluster)

- Powershell Cmdlet and C# SDK support (SDK 1.0 + Cmdlets are available on the download page for your cluster)

- Same familiar job submission experience in the web UI with dashboard backed up by the REST API in the background

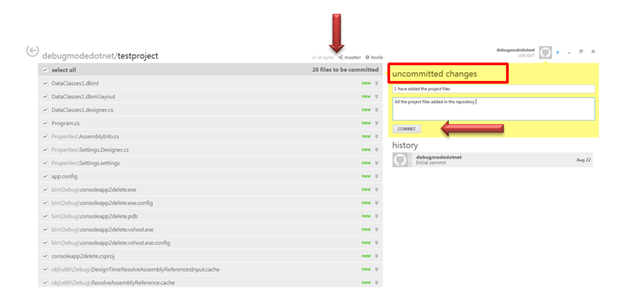

I wasn’t able to find the preceding two download links when clicking the Downloads tile. Here’s a capture of the new URL-accessible dashboard for an OakLeaf Systems cluster:

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Gregory Leake posted Announcing Enhancements to SQL Data Sync in Windows Azure to the Windows Azure blog on 8/22/2012:

We are happy to announce new service updates for the SQL Data Sync service which are now operational in all Windows Azure data centers. The past two service updates (June and August) have brought the following enhancements to the preview in addition to general reliability improvements:

- Added support for spatial data types including geography and geometry.

- Added ability for user to cancel a data synchronization in progress.

- Enhanced overall performance on initial provisioning and sync tasks

- Enhanced sync performance between on-premises databases and Windows Azure SQL Databases

SQL Data Sync enables creating and scheduling regular synchronizations between Windows Azure SQL Database and either SQL Server or other SQL Databases. You can read more about SQL Data Sync on MSDN. We have also published SQL Data Sync Best Practices on MSDN.

The team is hard at work on future updates as we march towards General Availability, and we really appreciate your feedback to date! Please keep the feedback coming and use the Windows Azure SQL Database Forum to ask questions or get assistance with issues. Have a feature you’d like to see in SQL Data Sync? Be sure to vote on features you’d like to see added or updated using the Feature Voting Forum.

Iain Hunter (@hunt3ri) described SQL Azure - Disaster Recovery in an 8/21/2012 post:

In this post I look at how to set up some tooling to help implement a Disaster Recovery plan for your SQL Azure database.

Fundamentals

The key to any successful DR plan is that it has to be a fire and forget process. If your DR process involves any manual components – ie Bob from infrastructure needs to push a button at 3pm on Wednesdays, you can guarantee that when disaster strikes you’ll discover Bob hasn’t pushed the button since February.

Thus you want to make sure everything is automated, and you want to hear about it if anything goes wrong.

It’s worth pointing out that every SQL Azure instance is mirrored twice, therefore it is highly unlikely you’re going to suffer an actual outage or data loss from unexpected downtime. So what we’re doing here is creating a backup in-case someone inadvertently deletes the Customers table. Of course it never hurts to have a backup under your pillow (so to speak) if it’s going to help you get to sleep at night.

Tooling

Tools you will need:

- Azure Import Export Service Client v 1.6

- Azure Storage Explorer

- Server or PC to set up the scheduled task

Exporting your SQL Azure DB

The first thing we’re going to do is to export your SQL Azure DB to a blob file. The blob file can be used to import your backup into a new DB in the event of disaster.

- If you haven’t already got one, create a new Azure Storage account. It’s a good idea to create this in a different location from your SQL Azure DB, so in the event of a catastropic data-centre melt-down your backup will be located far away. Eg if your database is in North-Europe setup your Storage Account in East-Asia.

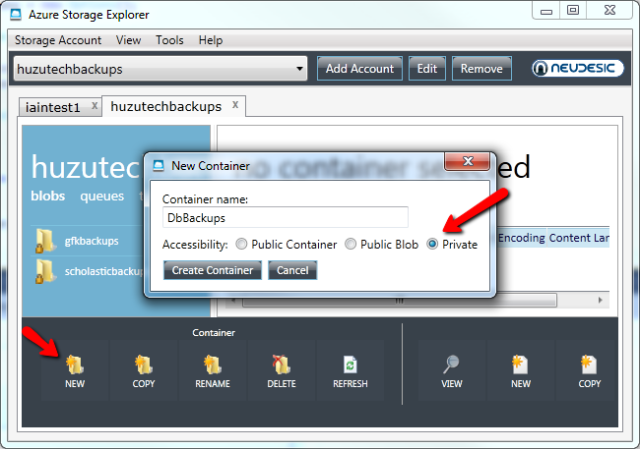

- Now fire-up Azure Storage Explorer and connect to your new storage account. Create a new private container for sticking the backups in. If you don’t create a container you can’t actually save anything into your storage account

- Now we can configure Azure Import Export Client to download your DB into your newly created storage account. This is a command line util which is ideal for automating but for now we’ll just run manually. Run the following, editing for your specific account details:

- Important – Make sure you the BLOBURL argument correctly specifies your container name, ie -BLOBURL http://iainsbackups.blob.core.windows.net/dbbackups/MyDb_120820.bacpac

- If all has gone well you want to see something like below. Note – this command simply kicks off the backup process it may take some time before your backup file is complete, you can actually monitor the backup jobs on the portal if you want.

Importing your SQL Azure DB

A DR plan is of little use if you don’t test your backup, so we want to ensure that our backup file can actually be used to create a rescue DB from. So lets import our .bacpac file to see if we can recreate our DB and connect our app to it.

- We basically reverse the process. This time create a new empty SQL Azure DB

- Now we can configure Azure Import Export Service to import our .bacpac file as follows:

- If it works as expected we should see

- Now you want to connect your app to your DB to ensure it works as expected.

Automating your backups

Now we’ve proven we can export and import our db we want to make sure the process happens automatically so we can forget about it. The easiest way of doing that is to create a simple powershell script that runs the above commands for us, and then schedule it on the task manager.

Here’s a basic script that will run the Import/Export service for us, you can tailor as you see fit. Note that I’m creating a timestamped backup file so we should get a new file every day

Now we have the script we can call it from the task scheduler, I created a Basic Task to run every night at 23:30, to call our script we can just run powershell from the schedular, as so:

Important - You will have to set your powershell executionpolicy to Remotesigned or the script won’t run when called.

Next Steps

So that’s it we’re backing up our Azure DB and storing in Blob storage all for the cost of a few pennies. Next we might want to create a more sophisticated script/program that would email us in event of failure, or tidy up old backups – I’ll leave it up to you

Useful Links

http://msdn.microsoft.com/en-us/library/windowsazure/383f0cb9-0647-4e67-985d-e88369ef0508

<Return to section navigation list>

Marketplace DataMarket, Cloud Numerics, Big Data and OData

• Dan English (@denglishbi) reported Excel Graduates to a Complete and Powerful Self-Service BI Tool in an 8/24/2012 post to the Microsoft Business Intelligence blog:

Today we have a guest post from Microsoft SQL Server MVP Dan English. Dan shares a walkthrough using Power View in Excel 2013. You can find him on twitter @denglishbi and follow his blog at http://denglishbi.wordpress.com/.

It’s been an exciting year for Microsoft’s self-service reporting tool Power View – the feature officially released with SQL Server 2012 in March, and just this last month Power View was integrated into Excel 2013 through the Office 2013 Preview release. With the Excel integration, everyone that uses Excel will be able to create insightful and highly interactive reports that can easily be explored. Let’s take a quick walkthrough of this new capability included in Excel 2013 and explore new features along the way.

Getting Started

In order to take advantage of this new feature, download the Office Professional Plus Preview that can be found here. The only other item required is Silverlight, and if you don’t already have it installed, you will be prompted to do so once you try and use the new Power View report option.

Before we start using Power View, we need some data to work with, so let’s go grab some. For this demonstration I am going to a set of data from the US government that is free to download and explore: A general raw data set, and since the school season is right around the corner, a school-related dataset titled National School Lunch Assistance Program Participation and Meals Served Data. The data is available in an Excel format and after a bit of manipulation and consolidation – basically unpivoting the data provided, cleaning up some blank records and formatting data values – I came up with a table of data that we can start to work with.

Creating Reports

Now without doing anything with my data, I first need to switch over to the Insert tab in the Ribbon and click on the Power View button in the Reports section.

As I mentioned previously, Power View does require Silverlight, so you will be prompted and provided an option to download and install Silverlight 5 if you haven’t already. After this is downloaded and installed, we can click the Reload button and see what we have to work with.

Now we can start exploring the data, look for anomalies, and see if there are any trends.

When we first add items from the Power View Fields area onto the canvas area they will start out in a table layout. You can alter the format of the numeric data and change the layout into other visualizations such as charts. After doing that we end up with a report that looks like this:

As you can see, the numbers have been steadily increasing since 2007 (the fiscal year starts in October). One thing that we spot when comparing the overall totals by state/territory is that Georgia has a fairly high meals served ranking versus the number of participants.

Performing Analysis

We can quickly create another report and filter down to see the data just for Georgia. After we create a new Power View report worksheet we can then add a filter for Georgia in the Filters area and then copy and paste items over from the first report.

Now we can see what was going on specific to Georgia – over the past few years the number of meals served is decreasing.

How about we take the data, map it out, and see what we have for last year?

We can see that California, Texas, and New York served the most meals last year. The east coast of the country seems to have more activity than the central, as well.

Conclusion

So far we have only scratched the surface as to what we could do with this data. We haven’t even gone into Power Pivot yet, but we have been working with a model (and yes, that model does reside in Power Pivot inside Excel). The data that we have been using could easily be related to other sources and we could go into Power Pivot and start to add additional measures or key performance indicators (KPIs) to determine the Average Meals per Participant, Total Cost, or the Year-over-Year Growth values and percentages. The sky is the limit with what we can do with the data, and amazingly we are able to do all of this inside Microsoft Excel. Now we can perform analysis on our desktop and share this with others and it is only a few clicks away!

Resources

For more information about Power View and Microsoft Business Intelligence you can check out the following:

- Visualizing Data with Microsoft Power View (book)

- Power View Overview

- What’s new in Power View in Excel 2013

- Sean Boon’s Blog (great series on Olympic data)

- Microsoft BI (web site)

- Microsoft BI Blogs (consolidated list)

- Dan English, Microsoft SQL Server MVP, Principal BI Consultant at Superior Consulting Services, LLC.

Bought a copy of Dan’s book from Amazon.

• Paul Miller (@PaulMiller) posted Data Markets, revisited on 8/24/2012:

Earlier this year, I conducted a series of podcasts with some of the leading lights in the Data Market business.

We delved into the things that differentiated them from one another, and we searched for the areas of commonality that might provide some boundaries to the rather fluid concept of a market for a non-rival good like data. Through ten separate conversations, I had opportunities to talk with old friends and acquaintances, and to explore the ideas of people whom I had previously only admired from afar.

Some of the insights from that process have now been written up in a report for GigaOM Pro; Data Markets: In search of new business models.

As the blurb states,

From information on U.S. census returns to the location of every Starbucks in Canada, the demand for data to support decision making is increasing. Fittingly, a number of new data markets have emerged in the past few years that provide access to this data.

A wide range of companies exists in this space, and often there are more differences than similarities in the various products on offer, not to mention the many different financial models. This report describes the basics of a data market, explores the ways in which various companies are beginning to position their offerings, and looks for evidence that there is sufficient demand for this market segment to prove sustainable.

GigaOM Pro reports are only available to subscribers. If you aren’t (yet!) a subscriber and want to read this report, you can sign up for a week’s free trial access to the site.

Full disclosure: I’m a registered GigaOm analyst.

Julie Lerman (@julielerman) reported You can now start building WinRT apps with OData in an 8/23/2012 post:

Many devs have been waiting for a toolkit so they can consume OData from [Metro] “Windows Store” apps they are building. The Release Candidate of these tools is now on the download center:

WCF Data Services Tools for Windows Store Apps RC:

“The WCF Data Services Tools for Windows Store Apps installer extends the Add Service Reference experience with client-side OData support for Windows Store Apps in Visual Studio 2012.”

http://www.microsoft.com/en-us/download/details.aspx?id=30714

Mark Stafford from the OData team has written two blog posts that walk you through using the tooling and creating a WinRT (aka Windows Store) app:

Julie = Lucy? Check out the OData 101 series below:

The WCF Data Services Team (formerly the Astoria Team) continued their series with OData 101: Building our first OData-based Windows Store app (Part 2) on 8/23/2012:

In the previous blog post, we walked through the steps to build an OData-enabled client using the new Windows UI. In this blog post, we’ll take a look at some of the code that makes it happen.

ODataBindable, SampleDataItem and SampleDataGroup

In the walkthrough, we repurposed SampleDataSource.cs with some code from this gist. In that gist, ODataBindable, SampleDataItem and SampleDataGroup were all stock classes from the project template (ODataBindable was renamed from SampleDataCommon, but otherwise the classes are exactly the same).

ExtensionMethods

The extension methods class contains two simple extension methods. Each of these extension methods uses the Task-based Asynchronous Pattern (TAP) to allow the SampleDataSource to execute an OData query without blocking the UI.

For instance, the following code uses the very handy Task.Factory.FromAsync method to implement TAP:

public static async Task<IEnumerable<T>> ExecuteAsync<T>(this DataServiceQuery<T> query) { return await Task.Factory.FromAsync<IEnumerable<T>>(query.BeginExecute(null, null), query.EndExecute); }SampleDataSource

The SampleDataSource class has a significant amount of overlap with the stock implementation. The changes I made were to bring it just a bit closer to the Singleton pattern and the implementation of two important methods.

Search

The Search method is an extremely simplistic implementation of search. In this case it literally just does an in-memory search of the loaded movies. It is very easy to imagine passing the search term through to a .Where() clause, and I encourage you to do so in your own implementation. In this case I was trying to keep the code as simple as possible.

public static IEnumerable<SampleDataItem> Search(string searchString) { var regex = new Regex(searchString, RegexOptions.CultureInvariant | RegexOptions.IgnoreCase | RegexOptions.IgnorePatternWhitespace); return Instance.AllGroups .SelectMany(g => g.Items) .Where(m => regex.IsMatch(m.Title) || regex.IsMatch(m.Subtitle)) .Distinct(new SampleDataItemComparer()); }LoadMovies

The LoadMovies method is where the more interesting code exists.

public static async void LoadMovies() { IEnumerable<Title> titles = await ((DataServiceQuery<Title>)Context.Titles .Expand("Genres,AudioFormats,AudioFormats/Language,Awards,Cast") .Where(t => t.Rating == "PG") .OrderByDescending(t => t.ReleaseYear) .Take(300)).ExecuteAsync(); foreach (Title title in titles) { foreach (Genre netflixGenre in title.Genres) { SampleDataGroup genre = GetGroup(netflixGenre.Name); if (genre == null) { genre = new SampleDataGroup(netflixGenre.Name, netflixGenre.Name, String.Empty, title.BoxArt.LargeUrl, String.Empty); Instance.AllGroups.Add(genre); } var content = new StringBuilder(); // Write additional things to content here if you want them to display in the item detail. genre.Items.Add(new SampleDataItem(title.Id, title.Name, String.Format("{0}\r\n\r\n{1} ({2})", title.Synopsis, title.Rating, title.ReleaseYear), title.BoxArt.HighDefinitionUrl ?? title.BoxArt.LargeUrl, "Description", content.ToString())); } } }The first and most interesting thing we do is to use the TAP pattern again to asynchronously get 300 (Take) recent (OrderByDescending) PG-rated (Where) movies back from Netflix. The rest of the code is simply constructing SimpleDataItems and SimpleDataGroups from the entities that were returned in the OData feed.

SearchResultsPage

Finally, we have just a bit of calling code in SearchResultsPage. When a user searches from the Win+F experience, the LoadState method is called first, enabling us to intercept what was searched for. In our case, the stock implementation is okay aside from the fact that we don’t any additional quotes embedded, so we’ll modify the line that puts the value into the DefaultViewModel to not append quotes:

this.DefaultViewModel["QueryText"] = queryText;When the filter actually changes, we want to pass the call through to our implementation of search, which we can do with the stock implementation of Filter_SelectionChanged:

void Filter_SelectionChanged(object sender, SelectionChangedEventArgs e) { // Determine what filter was selected var selectedFilter = e.AddedItems.FirstOrDefault() as Filter; if (selectedFilter != null) { // Mirror the results into the corresponding Filter object to allow the // RadioButton representation used when not snapped to reflect the change selectedFilter.Active = true; // TODO: Respond to the change in active filter by setting this.DefaultViewModel["Results"] // to a collection of items with bindable Image, Title, Subtitle, and Description properties var searchValue = (string)this.DefaultViewModel["QueryText"]; this.DefaultViewModel["Results"] = new List<SampleDataItem>(SampleDataSource.Search(searchValue)); // Ensure results are found object results; ICollection resultsCollection; if (this.DefaultViewModel.TryGetValue("Results", out results) && (resultsCollection = results as ICollection) != null && resultsCollection.Count != 0) { VisualStateManager.GoToState(this, "ResultsFound", true); return; } } // Display informational text when there are no search results. VisualStateManager.GoToState(this, "NoResultsFound", true); }Item_Clicked

Optionally, you can implement an event handler that will cause the page to navigate to the selected item by copying similar code from GroupedItemsPage.xaml.cs. The event binding will also need to be added to the resultsGridView in XAML. You can see this code in the published sample.

The WCF Data Services Team (formerly the Astoria Team) began a series with OData 101: Building our first OData-based Windows Store app (Part 1) on 8/23/2012:

In this OData 101 we will build a Windows Store app that consumes and displays movies from the Netflix OData feed. Specifically, we will focus on getting data, displaying it in the default grid layout, and enabling search functionality.

Because there’s a lot of details to talk about in this blog post, we’ll walk through the actual steps to get the application functional first, and we’ll walk through some of the code in a subsequent post.

Before you get started, you should ensure that you have an RTM version of Visual Studio 2012 and have downloaded and installed the WCF Data Services Tools for Windows Store Apps.

1. Let’s start by creating a new Windows Store Grid App using C#/XAML. Name the application OData.WindowsStore.NetflixDemo:

2. [Optional]: Open the Package.appxmanifest and assign a friendly name to the Display name. This will make an impact when we get around to adding the search contract:

3. [Optional]: Update the AppName in App.xaml to a friendly name. This value will be displayed when the application is launched.

3. [Optional]: Replace the images in the Assets folder with the images from the sample project.

4. Build and launch your project. You should see something like the following:

5. Now it’s time to add the OData part of the application. Right-click on your project in the Solution Explorer and select Add Service Reference…:

6. Enter the URL for the Netflix OData service in the Address bar and click Go. Set the Namespace of the service reference to Netflix:

(Note: If you have not yet installed the tooling for consuming OData services in Windows Store apps, you will be prompted with a message such as the one above. You will need to download and install the tools referenced in the link to continue.)

7. Replace the contents of SampleDataSource.cs from the DataModel folder. This data source provides sample data for bootstrapping Windows Store apps; we will replace it with a data source that gets real data from Netflix. This is the code that we will walk through in the subsequent blog post. For now, let’s just copy and paste the code from this gist.

8. Add a Search Contract to the application. This will allows us to integrate with the Win+F experience. Name the Search Contract SearchResultsPage.xaml:

9. Modify line 58 of SearchResultsPage.xaml.cs so that it doesn’t embed quotes around the queryText:

10. Insert the following two lines at line 81 of SearchResultsPage.xaml.cs to retrieve search results:

(Note: The gist also includes the code for SearchResultsPage.xaml.cs if you would rather replace the entire contents of the file.)

11. Launch the application and try it out. Note that it will take a few seconds to load the images upon application launch. Also, your first search attempt may not return any results. Obviously if this were a real-world application, you would want to deal with both of these issues.

So that’s it – we have now built an application that consumes and displays movies from the Netflix OData feed in the new Windows UI. In the next blog post, we’ll dig into the code to see how it works.

Glenn Gailey (@ggailey777) explained OData Client for Windows Store Apps in an 8/23/2012 post:

I’m happy to announce that OData client support for Windows Store apps (“formerly Metro apps”) in Windows 8 RTM has been released. This didn’t actually get chained into Visual Studio 2012 like it was in the pre-release, but it may be even easier to use in the new incarnation:

WCF Data Services Tools for Windows Store Apps RC

Despite the somewhat confusing title, this installation supports the RTM version of Windows 8 and Visual Studio 2012. I have heard that there could be tweaks to the installer behavior itself, but that the client library itself shouldn’t change, since Win8 is done and shipped.

In this version of support for Windows Store apps, the client library is actually obtained from NuGet.org by the new tool, which is integrated with Visual Studio 2012 so that Add Service Reference will work again, as you would expect it to. Without this package installed, Visual Studio gives you a cryptic message about the service not being valid, because it’s looking for a WSDL and not the data service definition. This client supports all versions of the OData protocol.

For an overview of how this client works in Visual Studio 2012, see the post OData 101: Building our first OData-based Windows Store app (Part 1).

If you are jazzed about writing Windows Store apps…stay tuned. Next week, I am going to be able to talk about some very cool stuff that I have been working on that will be great for folks who are trying to figure out what to do with data for their Windows Store app users.

Wade Wegner (@WadeWegner) described Generating C# Classes from JSON in an 8/22/2012 post:

I’ve long advocated using JSON when building mobile and cloud applications. If nothing else, the payload size makes it extremely efficient when transferred over the wire – take a look at the size of the same information formatted as OData, REST-XML, and lastly JSON:

Despite the use of JSON – and great frameworks like JSON.NET and SimpleJson – I always struggled with creating my C# classes when working with an existing web service that returned JSON. It can take a long time to create these C# classes correctly, and often time I’d take a lazy approach and either use the JObject or an IDictionary such that I didn’t have to have a C# class – something like:

var json = (IDictionary<string, object>)SimpleJson.DeserializeObject(data);Yesterday I stumbled upon a tool that makes this SO amazingly easy. In many ways I’m bothered by the fact that it’s taken me so long to find it – has this been one of the best kept secrets on the Internet or did I just miss it?

This website is as simple as it is powerful. Simply paste your JSON into the textbox, click Generate, and voilà you have C# objects!

Take a look. Here’s some JSON returned back from the Untappd API:

{ "meta": { "code": 200, "response_time": { "time": 0.109, "measure": "seconds" } }, "notifications": [], "response": { "pagination": { "next_url": "http://api.untappd.com/v4/thepub?max_id=11697698", "max_id": 11697698, "since_url": "http://api.untappd.com/v4/thepub?min_id=11697724" }, "checkins": { "count": 2, "items": [ { "checkin_id": 11697724, "created_at": "Wed, 22 Aug 2012 12:56:41 +0000", "checkin_comment": "", "user": { "uid": 205218, "user_name": "asiahobo", "first_name": "Bum", "last_name": "", "location": "", "url": "0", "relationship": null, "bio": "0", "user_avatar": "https://untappd.s3.amazonaws.com/profile/7d21ba831edb33341b98f86e09795ed7_thumb.jpg", "contact": { "twitter": "asiahobo", "foursquare": 31652652 } }, "beer": { "bid": 9652, "beer_name": "Maredsous 8° Brune", "beer_label": "https://untappd.s3.amazonaws.com/site/beer_logos/beer-maredsous.jpg", "beer_style": "Belgian Dubbel", "auth_rating": 0, "wish_list": false }, "brewery": { "brewery_id": 6, "brewery_name": "Abbaye de Maredsous (Duvel Moortgat)", "brewery_label": "https://untappd.s3.amazonaws.com/site/brewery_logos/brewery-AbbayedeMaredsousDuvelMoortgat_6.jpeg", "country_name": "Belgium", "contact": { "twitter": "", "facebook": "www.facebook.com/pages/Abbaye-De-Maredsous/208016262548587fine", "url": "www.maredsous.be/" }, "location": { "brewery_city": "", "brewery_state": "Denée", "lat": 50.3044, "lng": 4.77149 } }, "venue": [], "comments": { "count": 0, "items": [] }, "toasts": { "count": 0, "auth_toast": null, "items": [] }, "media": { "count": 0, "items": [] } }, { "checkin_id": 11697723, "created_at": "Wed, 22 Aug 2012 12:56:35 +0000", "checkin_comment": "", "user": { "uid": 137722, "user_name": "Mjoepp", "first_name": "Christoffer", "last_name": "", "location": "Linköping", "url": "", "relationship": null, "bio": "", "user_avatar": "http://gravatar.com/avatar/f1672535a7caa3bd686267257d33c588?size=100&d=https%3A%2F%2Funtappd.s3.amazonaws.com%2Fsite%2Fassets%2Fimages%2Fdefault_avatar.jpg", "contact": { "foursquare": 25958771 } }, "beer": { "bid": 12145, "beer_name": "Chocolate", "beer_label": "https://untappd.s3.amazonaws.com/site/beer_logos/beer-ChocolatePorter_12145.jpeg", "beer_style": "English Porter", "auth_rating": 0, "wish_list": false }, "brewery": { "brewery_id": 844, "brewery_name": "Meantime Brewing Company", "brewery_label": "https://untappd.s3.amazonaws.com/site/brewery_logos/brewery-MeantimeBrewingCompanyLimited_844.jpeg", "country_name": "England", "contact": { "twitter": "MeantimeBrewing", "facebook": "http://www.facebook.com/meantimebrewing", "url": "http://www.meantimebrewing.com" }, "location": { "brewery_city": "London", "brewery_state": "", "lat": 51.5081, "lng": -0.128005 } }, "venue": [], "comments": { "count": 0, "items": [] }, "toasts": { "count": 0, "auth_toast": null, "items": [] }, "media": { "count": 0, "items": [] } } ] } } }I’m sad to admit that, in the past, I’d like create my C# objects by hand and then either conform to the JSON or map between the two. It requires a TON of time and is extremely error prone. With http://json2csharp.com/ all I do is paste this into the textbox and click Generate. I’ll get the following output:

public class ResponseTime { public double time { get; set; } public string measure { get; set; } } public class Meta { public int code { get; set; } public ResponseTime response_time { get; set; } } public class Pagination { public string next_url { get; set; } public int max_id { get; set; } public string since_url { get; set; } } public class Contact { public string twitter { get; set; } public int foursquare { get; set; } } public class User { public int uid { get; set; } public string user_name { get; set; } public string first_name { get; set; } public string last_name { get; set; } public string location { get; set; } public string url { get; set; } public object relationship { get; set; } public string bio { get; set; } public string user_avatar { get; set; } public Contact contact { get; set; } } public class Beer { public int bid { get; set; } public string beer_name { get; set; } public string beer_label { get; set; } public string beer_style { get; set; } public int auth_rating { get; set; } public bool wish_list { get; set; } } public class Contact2 { public string twitter { get; set; } public string facebook { get; set; } public string url { get; set; } } public class Location { public string brewery_city { get; set; } public string brewery_state { get; set; } public double lat { get; set; } public double lng { get; set; } } public class Brewery { public int brewery_id { get; set; } public string brewery_name { get; set; } public string brewery_label { get; set; } public string country_name { get; set; } public Contact2 contact { get; set; } public Location location { get; set; } } public class Comments { public int count { get; set; } public List<object> items { get; set; } } public class Toasts { public int count { get; set; } public object auth_toast { get; set; } public List<object> items { get; set; } } public class Media { public int count { get; set; } public List<object> items { get; set; } } public class Item { public int checkin_id { get; set; } public string created_at { get; set; } public string checkin_comment { get; set; } public User user { get; set; } public Beer beer { get; set; } public Brewery brewery { get; set; } public List<object> venue { get; set; } public Comments comments { get; set; } public Toasts toasts { get; set; } public Media media { get; set; } } public class Checkins { public int count { get; set; } public List<Item> items { get; set; } } public class Response { public Pagination pagination { get; set; } public Checkins checkins { get; set; } } public class RootObject { public Meta meta { get; set; } public List<object> notifications { get; set; } public Response response { get; set; } }Pretty amazing! Now, note that it’s not perfect – there’s both a Contact and Contact2 class, but that’s easy to fix by merging the two and updating references. I’ll gladly perform this little bit of cleanup given the hours this tool just saved me.

Now that I have these classes, it’s really easy to use JSON.NET to load them with data.

RootObject publicFeed = new RootObject(); using (StreamReader reader = new StreamReader(response.GetResponseStream())) { data = reader.ReadToEnd(); publicFeed = JsonConvert.DeserializeObject<RootObject>(data); }Now it’s a simple matter of using my RootObject within my applications.

I feel like I may be the last person to have heard of this tool, in which case I’m both embarrassed and bitter – couldn’t you all have told me about this years ago?

The SD Times NewsWire (@sdtimes) reported Big Data is big driver for cloud development, new Evans Data survey of developers shows on 8/21/2012:

Over half of all developers (55.4%) active in development for Cloud platforms say that the need to manage large data sets that cannot be handled on traditional database systems (Big Data) is a major driver in getting their organizations to use Cloud services, according to Evans Data’s recently released survey of over 400 developers developing for or in the Cloud. Big Data, or large, unstructured data sets that may measure in petabytes, exabytes, or beyond, promises to provide new insights to businesses and is an ideal candidate for the Cloud where scalability and infrastructure are featured.

In addition, the most important components for implementing services that allow for management of Big Data are integration tools, cited by over sixty percent of developers working with

Big Data in the Cloud. Security tools, were the next most commonly cited components for Big Data in the Cloud.

“Cloud service providers have to realize the need to provide specific tools to developers in their Cloud environments,” said Janel Garvin, CEO of Evans Data, “and those tools should include ones designed to handle Big Data, as the scalability and cost effectiveness of the Cloud paradigm are particularly cogent when related to Big Data.”The Cloud Development Survey is a worldwide survey conducted twice a year amongst developers actively developing for the Cloud, in the Cloud, or both. It includes topics such as: Cloud adoption and targeting, Development tools in the Cloud, Big Data and Database Technology, Cloud Security and Governance, Cloud Clients, Private Cloud, Public Cloud and more.

See complete Table of Contents here:

http://www.evansdata.com/reports/viewRelease.php?reportID=27.

Read more: http://sdt.bz/36886#ixzz24UHkqwko

Windows Azure Service Bus, Access Control Services, Caching, Active Directory and Workflow

• My (@rogerjenn) Windows Azure Active Directory enables single sign-on with cloud apps post of 8/24/2012 for TechTarget’s SearchCloudComputing.com begins:

Microsoft’s Windows Azure Active Directory (WAAD) Developer Preview provides simple user authentication and authorization for Windows Azure cloud services. The preview delivers online demonstrations of Web single sign-on (SSO) services for multi-tenanted Windows Azure .NET, Java and PHP applications, and programmatic access to WAAD objects with a RESTful graph API and OData v3.0.

The preview extends the choice of IPs to include WAAD, the cloud-based IP for Office 365, Dynamics CRM Online and Windows InTune. It gives Windows Azure developers the ability to synchronize and federate with an organization’s on-premises Active Directory.

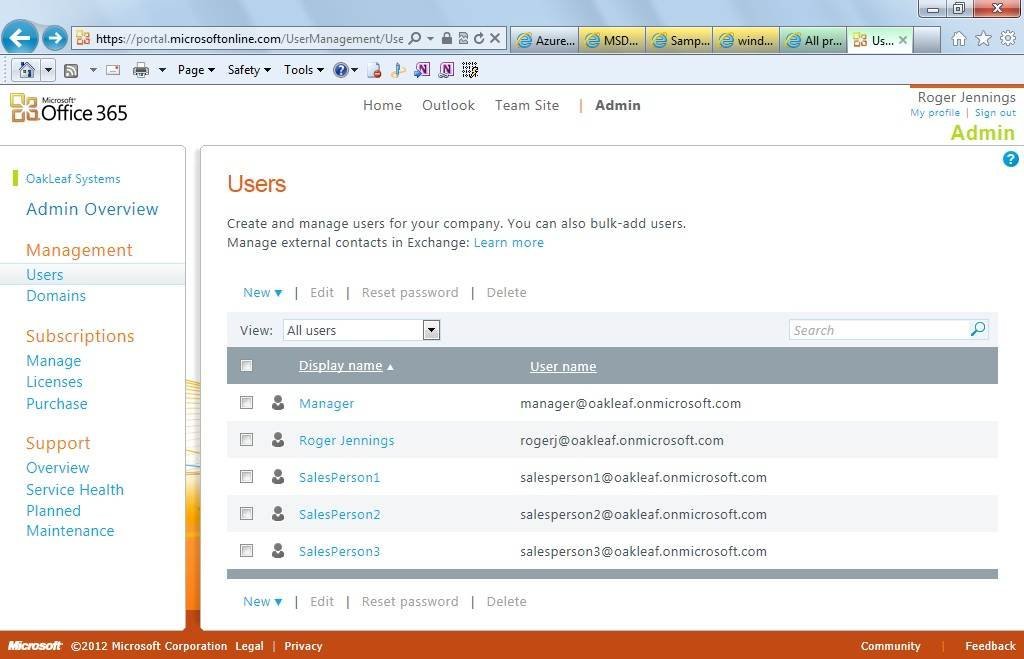

Figure 1. The Users page of the Office 365 Administrative portal enables adding detailed user accounts to an organization’s domain, oakleaf.onmicrosoft.com for this example.

Traditionally, developers provided authentication for ASP.NET Web applications with claims-based identity through Windows Azure Access Control Services (WA-ACS), formerly Windows Azure AppFabric Access Control Services.

According to Microsoft, WA-ACS integrates with Windows Identity Foundation (WIF); supports Web identity providers (IPs) including Windows Live ID, Google, Yahoo and Facebook; supports Active Directory Federation Services (AD FS) 2.0; and provides programmatic access to ACS settings through an Open Data Protocol (OData)-based management service. A management portal also enables administrative access to ACS settings.

Running online Windows Azure Active Directory demos

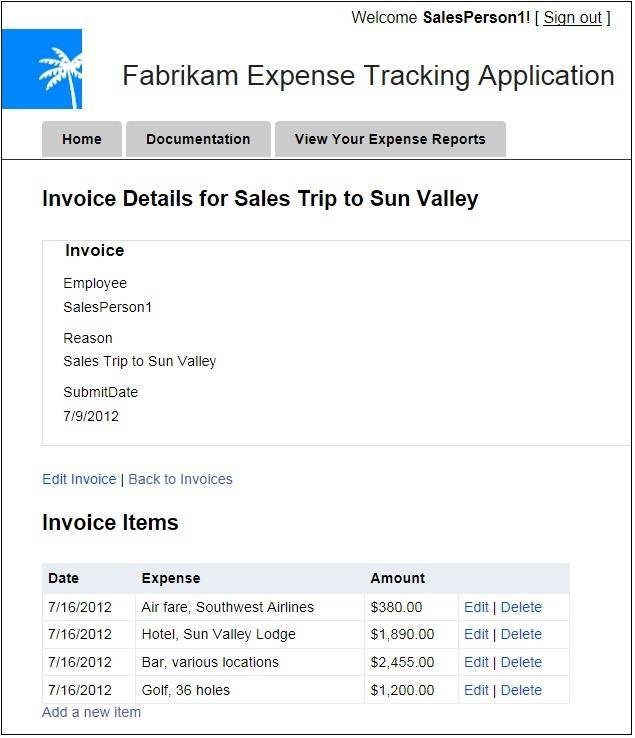

Taking full advantage the preview’s two online demonstration apps requires an Office 365 subscription with a few sample users (Figure 1). Members of the Microsoft Partner Network get 25 free Office 365 Enterprise licenses from the Microsoft Cloud Essentials benefit; others can subscribe to an Office 365 plan for as little as $6.00 per month. According to online documentation, the WAAD team plans to add a dedicated management portal to the final version to avoid reliance on Office 365 subscriptions. Note: The preview does not support Windows 8, so you’ll need to use Windows 7 or Windows Server 2008 R2 for the demo.Figure 2. Use the Fabrikam demo to add or edit detail items of an expense report.

The preview also requires users to download an updated Microsoft Online Services Module for Windows PowerShell v1.0 for 32-bit or 64-bit systems. You’ll also need to download and save a prebuilt PowerShell authorization script, which you execute to extract the application’s identifier (Application Principal ID), as well as the tenant identifier (Company ID) for the subscribing organization.

The Fabrikam Expense report demo is a tool used to show interactive cloud Web apps to prospective Windows Azure users (Figure 2). The preview also includes open source code developers can download from GitHub and use under an Apache 2.0 license. Working with the source code in Visual Studio 2010 or later requires the Windows Azure SDK 1.7, MVC3 Framework, WIF runtime and SDK, as well as Windows Communication Framework (WCF) Data Services 5.0 for OData v3 and .NET 4.0 or higher. With a bit of tweaking, this ASP.NET MVC3 app could manage expense reports for small- and medium-sized companies. …

and concludes with “Traversing Office 365 AD with OData queries.”

Full disclosure: I’m a paid contributor to TechTarget’s SearchCloudComputing.com blog.

Manfred Steyer (@ManfredSteyer) reported on 8/24/2012 publication of his EAI and EDI in the Cloud: Prospects of Azure Service Bus EAI & EDI – Part I article in Service Technology Magazine’s August 2012 issue:

Abstract: This is the first article in a two-part series that discusses how in the future, the EAI features of Azure Service Bus will offer the transformation and routing of messages to integrated systems on a pay-per-use basis while maintaining its usual high availability. Moreover, standards like EDIFACT and X12 will also be supported in the same way as the integration into local systems via relaying.

Introduction

System integration solutions are a single point of failure and, as such, must be designed to be fail-save. This results in higher hardware and software licensing costs and, consequently, often makes professional ESB offers unattractive. Azure Service Bus's pay-per-use solution provides a remedy for this problem.The current version concentrates on message mediation and, among other features, offers queues to enable reliable communication as well as topics for publish/subscribe scenarios. However, looking at the CTP [REF-1] which is currently available hints that this is just the beginning. Hence we can expect to see possibilities for transforming and routing messages in the future. Moreover, flat files, especially for X12 and EDIFACT, will be widely supported, and the integration of local business applications and databases will be facilitated.

It is always advisable to install CTPs on a test system, such as a virtual machine. With regard to the CTP in question, special attention must be paid to documented installation requirements and dependencies, otherwise the setup routine may not be performed successfully.

Message Transformations Using Maps

If two systems that are to be connected expect messages in different formats, these must be transformed, which can be done using maps rather than programming. A map assigns elements of a message definition to its counter pieces in another message definition. Thereby, an XML schema is to be given for the source message and for the target message. Fig. 1 depicts a map which maps the elements of the PlaceOrder message to elements of the the SendOrder message. The last three elements are not mapped 1:1 to the respective elements of the target schema, but serve as an access parameter for a String Concatenate (symbol labeled A+B) type map operation. As the name suggests, this operation brings different values together in a string. In the present case, this string is assigned to the ShipTo field. A number of other map operators are to be found in the Toolbox.

This particular map may be used when a client who generates a PlaceOrder type message is meant to communicate with a service expecting a SendOrder type message. For the sake of completeness, Fig. 2 depicts another map mapping the response of SendOrder to the response belonging to PlaceOrder.

Figure 1 – Converting a request.

Figure 2 – Converting a response.

Connecting Systems Using Bridges

Bridges help to connect two heterogeneous systems. A distinction is made between one-way bridges and two-way bridges. In the case of a one-way bridge, the application only sends a message to the recipient, whilst when a two-way bridge is used, the application sends a message to the recipient and the recipient sends a response back. Bridges can be used to validate, enrich and transform messages using maps.

Fig. 3 displays a bridge configuration which was set up using the drag-and-drop function in Visual Studio. A two-way bridge is used here which routes the received messages to a service. Being a two-way bridge, it does not ignore the response message of this service. To define via which address the bridge should be accessible later on, the desired value is entered in the properties window under Relative Address. The complete address is composed of the combination of the chosen Azure namespace (see below) and this relative address.

The service in this example is a SOAP service connected through a relay binding. This means that the service is performed locally and registers with the service bus when booting up. The service bus assigns a public address to the service and from then on any and all messages sent to this address are routed to the service. Therefore, the service bus uses the connection which was initially set up by the local service thereby rendering the entire process firewall-safe.

Figure 3 – Bridge configuration.

The SOAP message to be sent to the service must have a SOAP action header so it knows with which service operation to associate the message. This can be created in Visual Studio using the Route Action function which is offered to connect the bridge to the service. Fig. 4 shows the dialog connected with this property. The Property (Read From) section defines where the value to be used can be obtained. Either a dropdown list with so-called context variables (Property Name) or a textbox in which an expression can be entered are to be selected.

In this particular case the latter is used; we will, however, look at context variables later on. That is why the desired value, SendOrder, is in this textbox. But as the textbox expects to receive an expression, SendOrder must be entered in inverted commas showing that it is a string.

The Destination (Write To) section is used to clarify how the value which has previously been defined should be used. The Type option defines if the value is to be assigned to an HTTP header or a SOAP header. The name of this header must be entered into the Identifier field. In the given example, the SOAP header Action is addressed.

Figure 4 – Defining a route action.

In addition to this, a Filter property (Fig. 5) is offered by the connection between the bridge and the service. It defines under which circumstances the message should be routed from the bridge to the service. As the bridge is only connected to one single service in the present case, the MatchAll option was chosen.

Figure 5 – Definition of a filter.

As well as the relaying service used in this example, other types of message recipients can also be configured. An overview thereof is given in the Toolbox (Fig. 6). The elements given under On-Premise LOBs offer the possibility to send messages directly to a local system (an "on-premise line-of-business-system"). Here, relaying is complemented by LOB adapters which are to be installed locally and originate from the BizTalk Server universe. An example for this is given further below. In the Route-Destinations section the Toolbox offers elements which are also able to address public services (One-Way External Service Endpoint and Two-Way External Service Endpoint). In addition, messages can be sent to a queue or a topic.

Figure 6 – Elements needed when setting up a bridge configuration.

Manfred continues with

- Connecting Bridges

- Configuring Relay Bindings

- Sending Data to a Bridge

- Making the Local Service Available Via Relay Binding

sections and concludes:

The second article in this two-part series goes over the following topics: enriching data in a bridge, enriching data in maps, EDI and communication via flat files with the TPM Portal, support for flat files, using the Flat File Schema Wizard, retrieving data via FTP, tracking, importing XML schemas from WSDL, and the conclusion to this article.

References

- [REF-1] Azure Service Bus CTP http://www.microsoft.com/en-us/download/details.aspx?id=17691

- [REF-2] Labs Portal, http://www.microsoft.com/download/en/details.aspx?displaylang=en&id=17691

- [REF-3] TPM-Portal, https://edi.appfabriclabs.com

- [REF-4] NuGet, http://nuget.codeplex.com/

- [REF-5] LOB-Adapter http://www.microsoft.com/biztalk/en/us/adapter-pack.aspx

Jonathan Allen described A Microsoft Branded Service Bus without BizTalk in an 8/23/2012 post to the InfoQ blog:

For quite some time now BizTalk has been essentially on life support. Being both very complex and very expensive, it was never a particularly popular product. None the less, many companies used it because they trust the Microsoft name and actually do need some sort of enterprise service bus. Seeing this gap, Microsoft has created a new product called Microsoft Service Bus 1.0 for Windows Server.

Service Bus for Windows Server is based on Azure Service Bus and shares many of the same capabilities such as queue, topic, and subscription support, reliable message storage, and a variety of communication protocols. It is built with .NET 4.5 and requires a SQL Server or SQL Azure database for message persistence. This is a change from Microsoft Message Queues, which used a file-based persistence and couldn’t store more messages than what fit in RAM. [Emphasis added.]

The primary communication protocols are Net.TCP and REST over HTTP. Net.TCP is a binary format that is designed for high performance communication between WCF clients and servers. For non-.NET applications, REST over HTTP is the preferred protocol.

A Service Bus installation would normally have a set of message brokers. Each message broker in turn contains one or more message containers. The message container hosts the actual queues, topics, and subscriptions. Each container is backed by its own database for persistence. When a new queue, topic, or subscription is created a load balancer determines which container to put it in. After that, the queue, topic, or subscription cannot be moved. However, the container itself can be moved to another server during a failover or for load balancing scenario.

[Note:] If a messaging broker NT service crashes or recycles, or in the event of a complete node recycle/shutdown, the associated message containers that were placed in this broker instance before the crash are automatically moved to other machines in the farm. The message containers continue to service requests with a small interruption in the case of failover.

Windows Fabric provides the “core logic necessary for high availability, farm and cluster formation, and load balancing across machines in the farm.” It is important to note that this alone isn’t enough for actual high availability. The SQL Server databases will also need to be mirrored, clustered, or replicated in some fashion to ensure they too will survive hardware failure.

Service Bus 1.0 for Windows Server is currently available as a beta [since 7/16/2012].

Leandro Boffi (@leandroboffi) described an ADFS WS-Trust client for Node.js on 8/23/2012:

Joining two of my favorite topics, Node.js and Identity Federation I’ve created a very simple and minimalist WS-Trust client for Node.js, this module allows you to request a security token from ADFS using WS-Trust protocol.

If you want to know how this works you can see the detailed explanation here. Also you can find the source code on my github: https://github.com/leandrob/wstrust-client

Installation

$ npm install wstrust-clientHow to use it

var trustClient = require('wstrust-client'); trustClient.requestSecurityToken({ scope: 'https://yourapp.com', username: 'Your Username Here', password: 'Your Password Here', endpoint: 'https://your-ws-trust-endpoint-address-here' }, function (rstr) { // Access the token and enjoy it! var rawToken = rstr.token; console.log(rawToken); }, function (error) { // Error Callback console.log(error) });Remember that if you are using ADFS, the endpoint that you need to use is: /adfs/services/trust/13/UsernameMixed.

Leandro Boffi (@leandroboffi) posted Windows Azure Access Control Service Management Client for Node.js on 8/22/2012:

During the last months I’ve been working a lot with Node.js, I really like it. I’m building something huge, but I’ll talk about that later, the reason for this post is share with you a Node.js module in which I’m working on: a Windows Azure Access Control Service (recently renamed to Windows Azure Active Directory) management client for node.js.

As you probably know ACS or Windows Azure Active Directory has an OData API for manage Identity Providers, Relying Parties, Rules, etc, as a requirement in the project that I’m working on we needed to use that, so together with my teammate Gustavo Machado we build this nice Node.js module that allows to do that in a very simple manner.

You can find it on my github: https://github.com/leandrob/node-acs-cli

Installation

$ npm install acs-cliHow to use it….

var ManagementClient = require('acs-cli'); var client = new ManagementClient('[acsNamespace]', '[acs-management-key]'); client .from('RelyingParties') .top(2) .query(function (err, res) { ///res... });

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Nick Harris (@cloudnick) interviewed Michael Washam (@MWashamMS) in CloudCover Episode 88 - Tips and Tricks for Windows Azure Virtual Machines and Virtual Networks on 8/24/2012:

In this episode Nick is joined by Michael Washam who demonstrates a variety of tips and tricks for Windows Azure IaaS scenarios. Michael shows how to configure a site-to-site virtual network using Windows Azure Virtual Networks and a Cisco ASA 5505. Additionally, he shows how to setup a web farm with content synchronization using Windows Azure Virtual Machines and Web Deploy. Finally, you will see how to use the connect button in the Windows Azure management portal to remote desktop into a Linux virtual machine.

In the News:

- Windows Azure Media Services and the London 2012 Olympics

- Calling the Windows Azure Service Management API from Python

- Windows Azure Training Kit Update Released – August 2012

- iOS and Windows Azure Communication using SignalR

- Windows Azure Community News Roundup (Edition #32)

- Publishing and Synchronizing Web Farms using Windows Azure Virtual Machines

In the Tip of the Week, we discuss a blog post by Brady Gaster that shows how clean log files in Windows Azure Web Sites and walkthrough Mingfei Yan's Blog on Windows Azure Media Services.

See my Configuring a Windows Azure Virtual Network with a Cisco ASA 5505-BUN-K9 Adaptive Security Appliance preview of 6/21/2012 for more details about the Adaptive Security Appliance.

Michael Washam (@MWashamMS) described Deploying certificates with Windows Azure Virtual Machines and PowerShell in an 8/23/2012 post:

A common question around using the Windows Azure PowerShell cmdlets is how to deploy certificates with VMs? In this post I’ve put together two samples on how to do this on Windows and Linux VMs.

Windows VM Example

Select-AzureSubscription mysub $service = 'yourservicename1' $location = 'West US' ## Cloud Service must already exist New-AzureService -ServiceName $service -Location $location ## Add Certificate to the store on the cloud service (.cer or .pfx with -Password) Add-AzureCertificate -CertToDeploy 'D:\User-Data\development\Azure Samples\mlwdevcert.cer' -ServiceName $service ## Create a certificate setting for deploying the VM 'My' is the only supported store (goes into computer account) $cert1 = New-AzureCertificateSetting -Thumbprint D7BECD4D63EBAF86023BB4F1A5FBF5C2C924902A -StoreName 'My' ## Create the VM passing the certificate setting in the provisioning config New-AzureVMConfig -ImageName 'MSFT__Windows-Server-2012-Datacenter-201208.01-en.us-30GB.vhd' -InstanceSize 'Small' -Name 'win2012cert' | Add-AzureProvisioningConfig -Windows -Password 'somepass@1' -Certificates $cert1 | New-AzureVM -ServiceName $service

Linux VM Example

Select-AzureSubscription mysub $service = 'yourservicename1' $location = 'West US' ## Cloud Service must already exist New-AzureService -ServiceName $service -Location $location ## Add Certificate to the store on the cloud service (.cer or .pfx with -Password) Add-AzureCertificate -CertToDeploy 'D:\User-Data\development\Azure Samples\mlwdevcert.cer' -ServiceName $service ## Create a certificate in the users home directory $sshkey = New-AzureSSHKey -PublicKey -Fingerprint D7BECD4D63EBAF86023BB4F1A5FBF5C2C924902A -Path '/home/mwasham/.ssh/authorized_keys' New-AzureVMConfig -ImageName 'CANONICAL__Canonical-Ubuntu-12-04-amd64-server-20120528.1.3-en-us-30GB.vhd' -InstanceSize 'Small' -Name 'linuxwithcert' | Add-AzureProvisioningConfig -Linux -LinuxUser 'mwasham' -Password 'somepass@1' -SSHPublicKeys $sshKey | New-AzureVM -ServiceName $serviceNote: The -Certificates and -SSHPublicKeys parameters are arrays so they can accept multiple certificates.

-SSHPublicKeys $sshKey1,$sshKey2For Linux there is also the -SSHKeyPairs parameter for passing a key pair instead of just the public key. -Certificates can handle both types on Windows.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Bruno Terkaly (@brunoterkaly) explained How To Take Photographs From Windows 8 Applications And Automatically Upload Them To The Cloud–Part 1 of 6 in an 8/25/2012 post:

This post will provide techniques that you can use to take and automatically upload photographs from Windows 8 applications.

- The techniques presented can be used to upload practically anything to the cloud.

- PDFs, Videos, Word Documents, Web Pages, JavaScript js files, etc

- Nick Harris did a great job delivering this content (and more) at TechEd North America and Europe. You can read more here: http://www.nickharris.net/

- Windows 8 is a connected operating system.

- It has been from the ground up to interface with the web and networking in general.

- Storing Photographs in the Cloud

- The Windows Azure storage service offers two types of blobs, block blobs and page blobs.

- You specify the blob type when you create the blob.

- Page Blobs

- Page blobs are a collection of 512-byte pages optimized for random read and write operations.

- Writes to page blobs happen in-place and are immediately committed to the blob.

- The maximum size for a page blob is 1 TB.

- Block Blobs

- Block blobs let you upload large blobs efficiently.

- The maximum size for a block blob is 200 GB, and a block blob can include no more than 50,000 blocks.

- You can set the number of -threads used to upload the blocks in parallel using the ParallelOperationThreadCount property.

- Block Blobs are appropriate for media file serving, whereas Page Blobs are optimized for other work patterns.

- For this blog series we will use block blobs.

- You will need an Azure Account to do these 6 posts. Sign up now here.

Free 90-day trial for Windows Azure

http://www.microsoft.com/click/services/Redirect2.ashx?CR_CC=200114759Visual Studio 2012 RC For Windows 8

http://www.microsoft.com/click/services/Redirect2.ashx?CR_CC=200114760

Figure 1: The inefficient approach to uploading photos

- Figure 1 shows one approach (a bad one) to uploading images to the cloud is to create a web role (our code running inside an IIS process) that accepts a byte stream from a Windows 8 application and writes to Azure Blob Storage.

- But this is less than ideal because the Web Role will end up costing a lot of money for the bandwidth.

- It will also be less scalable because it has to manage all the potential byte streams coming from Windows 8 Applications.

- A better approach is for the Windows 8 application to directly write to blob storage.

- But to make this practical, the Windows 8 application should leverage a special kind of key (like a password of sorts).

- This special key that gives the Windows 8 application special permission is called a Shared Access Signature.

- Shared Access Signature

- A Shared Access Signature is a URL that grants access rights to blobs.

- By specifying a Shared Access Signature, you can grant users who have the URL access to a specific resource for a specified period of time.

- You can also specify what operations can be performed on a resource that's accessed via a Shared Access Signature.

- Once the Windows 8 application has the Shared Access Signature, it can write directly to blob storage.

- So the first thing the Windows 8 application need to do is to request a shared access signature.

Figure 2: Windows 8 Application Requesting and Receiving Shared Access Signature

- Figure 2 represents the steps needed to get the Shared Access Signature (SAS) to the Windows 8 Application.

- SASs have a limited amount of time that they are valid to use.

- In other words, they stop working when they expire.

- This means they should be requested only when they are ready to use.

- In short, the Windows 8 application requests an SAS from a web role.

- The web role then sends the SAS to the Windows 8 application.

- Once the Windows 8 application gets an SAS, it can start reading and writing from and to blob storage.

Figure 3 - Windows 8 Talking Directly to Blob Storage

- Once the Windows 8 application takes and uploads the photograph, it can mark it as public, meaning that anyone with the url to the photograph can view it.

- I'll show this with code.

- By specifying a Shared Access Signature, you can give the Windows 8 application access to a blob container for a specified period of time.

- Shared Access Signatures allow granular access to tables, queues, blob containers, and blobs.

- A SAS token can be configured to provide specific access rights, such as read, write, update, delete, etc. to a specific table, key range within a table, queue, blob, or blob container; for a specified time period or without any limit.

- The SAS token appears as part of the resource's URI as a series of query parameters.

5 More Posts

- We have 5 more posts to address

- We will need to sign up for an Azure Trial Account

- It will be needed to create two things at the portal

- A Hosted Service

- It will be used to host our forthcoming web service in IIS inside of one of several MS data centers

- A Storage Account

- It will be used to store pictures taken by our Windows 8 application.

- It will also be used by Internet users to view these uploaded photographs

- We will create a Web Service

- You can typically choose either of these two project types: (1) Windows Communication Foundation (WCF) ; or (2) ASP.NET Web API, which is included with MVC version 4.

- We will take the newer, more modern concepts that ASP.NET Web API brings to the table, truly embracing HTTP concepts (URIs and verbs).

- Also, the ASP.NET Web API can be used to create services that use more advanced HTTP features - such as request/response headers, hypermedia constructs.

- Once we create the web service, it will be ready to support the Windows 8 application.

- The ASP.NET Web API will return a SAS to the Windows 8 application. That is all it will do.

- Both projects can be tested on a single machine during development.

- We will deploy the ASP.NET Web API Web Service to the Hosted Service (created at the portal )indicated above.

- We will need to create a Windows 8 application that has access to either a USB or built in web cam.

- The Windows 8 application will automatically request the Shared Access Signature from the web service.

- It will then use the Shared Account Signature to upload the freshly taken photograph to the Storage Account previously created.

• John Casaretto asked Could the next Xbox be an Azure Cloud-Box? in an 8/22/2012 post to the SiliconANGLE blog:

Xbox meets cloud computing. Well, that’s hardly a stretch as it’s already happening on today’s Xbox. The Xbox is already a cloud-enabled device by virtue of having Xbox Live users able to cloud-upload their saved games for access from any Xbox 360 console. However, a quick look at some strategic factors appears to point to a more, better, always cloud enablement for a next generation Xbox.

Cloud-based gaming is relatively new on the scene of course, and there are a number of examples such as OnLive, Sony (Gaikai), and even Gamestop stepping in and rolling out their own services sometime next year. A cloud-based Xbox offering would be a strategic boon, for a number of reasons. The Xbox has been keen on service and portal based strategy for some time. The thought of applying virtualization technologies such as resource allocation based user load, game type, and network conditions can make this a very scalable and robust system. Ultimately, how these things all connect remain to be seen, but there are a number of quite open but somewhat neglected indicators that point to something big.

Some of the elements that come into play when contemplating this kind of shift really take a step back in perspective on the state of technology. For one, bandwidth has long been the bane of server-based computing. New mobile network and broadband capabilities have closed that gap considerably in the last few years. The Azure platform certainly comes to mind as another valuable property in this picture. The IaaS infrastructure service from Microsoft already is host to a number of the company’s service offerings. How that infrastructure relates to the Xbox will be an interesting thing to observe.

Another advantage point brings to mind long heard statistics of the losses that Microsoft has endured in subsidizing the hardware and technology in the Xbox. A cloud-based Xbox could alleviate the demands of hardware. But it gets more interesting than that and we are diving in to bring you how these things might be aligning in the market and for Microsoft as it continues to evolve its Xbox properties.

• Matt Tilchen described Motown: Easy JavaScript Apps for Metro (Part 1) in an 8/19/2012 post to the Vectorform blog (missed when published):

The Web has arrived. Microsoft has made a bold move with Windows 8 that makes Web technology (HTML/CSS/JS) a first class citizen for building professional and commercial grade applications. Up until now, even with the advent of HTML 5 and incredibly fast JavaScript engines, Web applications have always seemed to have limitations compared to native development stacks for creating fully integrated and cutting edge user experiences. These days are over with the arrival of Windows 8 as Microsoft blends the Chakra JS engine and the hardware accelerated rendering / DOM environment of IE 10 with the new WinRT OS-level abstractions.

The WinJS libraries and bindings to WinRT provide a solid foundation for building applications but I feel that a significant gap remains. MS rightly, and I presume intentionally, left most of this gap open for the development community to fill. I created the Motown JavaScript library for Metro-style applications (HTML/CSS/JS) to fill the gap.

Motown empowers you and your team to:

- Create apps that are easy to maintain and extend

- Be more productive, freeing you to focus on the details of your app

- Make small and simple apps easily and larger more complex apps a possibility

Motown accomplishes this by:

- Eliminating glue code and boilerplate

- Providing architectural structure and modularity, eliminating spaghetti-code

- Making application architecture implicit in the configuration

- Providing clear, thoughtful and flexible APIs

In this post I will briefly introduce you to Motown by walking you through the creation of a small application that illustrates how Motown addresses one of the largest weaknesses of what Microsoft delivers out-of-the-box with WinJS. This weakness lies in how WinJS implements page navigation with regards to what it attempts to do in addition to what it does not.

Single-Page Style Navigation

Microsoft recommends that developers use a “single-page” style of navigation instead of a “multi-page” style in their applications. The idea is to prevent the browser from navigating to a different page, as if you had entered a new URL into the location field of a regular web browser. Doing this causes the browser to unload the current document, including all the images, stylesheets and JavaScript that are part of it. In a web application, or in this case a Metro-style application in JavaScript, this is obviously problematic because the unloading of the document effectively unloads your entire app. You might be able to reestablish/reinitialize your application in the new page that you navigate to but to do so would be a waste of time and system resources.

To assist developers in implementing “single-page” style navigation Microsoft provides a control they call “PageControlNavigator” in projects you create with the “Navigation Application” project tempate. The control assumes your “pages” use Microsoft’s WinJS.UI.Pages API. The former provides integration with the WinJS.Navigation API so that calls to “navigate(), back()” etc. use the functions defined in the latter to dynamically load content from other HTML files into the main document. As you navigate to different pages the PageControlNavigator swaps in the new page for the old page.

Using the Fragment Loader

Under the hood, the calls PageControlNavigator makes to the WinJS.UI.Pages API are using the WinJS fragment loader (WinJS.UI.Fragments). The fragment loader is a clever piece of code that allows you to load the content of an arbitrary HTML file into an element in the existing document. When you call WinJS.UI.Fragments.render() the loader goes out and intelligently parses the specified HTML file and first takes everything between then <body> tags and appends it to the “target” element you passed in. Then it takes all of the stylesheet and script references it encounters during parsing and adds them to the existing document. This functionality is the key to implementing the “single-page” navigation style.

The advantages to this approach are that it allows you build your application in a modular way. You can lazily load your application a page at a time (which improves performance and memory consumption), and as mentioned before, you do not have to completely unload/load your app as you do in the “multi-page” style.

Problems with the WinJS.UI.Pages API and PageControlNavigator

The WinJS.UI.Pages API and PageControlNavigator, both new as of the Consumer Preview release of Windows 8, take some good steps towards reducing the complexity of implementing “single-page” style navigation in your application. However, I think there are some flaws in this approach and Motown presents you with a better solution.

The first problem lies in the semantics of specifying the Page control’s URI with WinJS.UI.Pages.define(uri). This feels awkward and redundant. Why do you need to specify the URI of the HTML file the Page control is supposed to work with if that HTML file is already including the script defining the Page control? If the intent is to relate the Page control to the particular HTML file with a URI then that information is already implicit due to the inclusion.

To be fair this implementation does allow you to move the inclusion of the Page control’s script to another file and still maintain the relationship. However, if you do move the inclusion of the script defining the Page control for the URI to a different file (e.g., the default.html or main page) you would sacrifice modularity and the performance advantages of lazily loading the script in the HTML file that it “belongs to”.Secondly, using WinJS.UI.Pages.define(uri) strongly couples your application’s logic to its presentation. In other words, with an MVC architecture in mind, the controller (the Page control) is strongly coupled to the view (HTML file) because of this hard-coded specification of the URI.

How would you reuse any of the logic defined in the Page control with a different view without introducing additional complexity? Ideally, you should be able to to use an arbitrary view with a particular controller and vice-versa, as long as the references the controller expects to find in the view are available using various querying functions (getElementById, queryAll, etc). If you want to share controller logic with this approach you will at least need to use “WinJS.UI.Pages.define(uri)” for each view you want to reuse the controller logic with and then come up with a way to pass the rest of the controller implementation into the second parameter of “WinJS.UI.Pages.define()”.Finally, PageControlNavigator as implemented does not allow your pages to maintain their state in between navigations. Every time you navigate to a page, whether you have previously loaded the page or not, the PageControlNavigator implementation loads the page from scratch every time.